MolDQN: Revolutionizing Molecule Optimization with Deep Q-Networks for Drug Discovery

This article provides a comprehensive examination of MolDQN (Molecule Deep Q-Network), a pioneering reinforcement learning framework for de novo molecule optimization.

MolDQN: Revolutionizing Molecule Optimization with Deep Q-Networks for Drug Discovery

Abstract

This article provides a comprehensive examination of MolDQN (Molecule Deep Q-Network), a pioneering reinforcement learning framework for de novo molecule optimization. Tailored for researchers and drug development professionals, the content explores the foundational principles of combining deep Q-learning with molecular property prediction, details the methodological pipeline for scaffold-based modification, addresses common implementation and optimization challenges, and validates its performance against traditional and state-of-the-art computational chemistry methods. The analysis highlights MolDQN's potential to accelerate hit-to-lead optimization and generate novel chemical entities with desirable pharmacodynamic and pharmacokinetic profiles.

MolDQN Demystified: The Core Concepts of Reinforcement Learning for Molecule Design

Application Notes: MolDQN Framework for De Novo Molecular Design

The traditional drug discovery pipeline is hindered by high costs, long timelines, and high attrition rates, particularly in the early-stage identification of viable lead compounds. AI-driven de novo design, specifically using deep reinforcement learning (RL) models like MolDQN, directly addresses this bottleneck by generating novel, optimized molecular structures in silico.

Core Mechanism of MolDQN: MolDQN frames molecular generation as a Markov Decision Process (MDP). An agent iteratively modifies a molecular graph through defined actions (e.g., adding or removing atoms/bonds) to maximize a reward function based on quantitative structure-activity relationship (QSAR) predictions and chemical property goals.

Key Performance Metrics from Recent Studies: Table 1: Comparative Performance of AI-Driven Molecular Generation Models

| Model / Framework | Primary Method | Success Rate (% of molecules meeting target) | Novelty (Tanimoto Similarity < 0.4) | Key Optimized Property | Reference/Study Year |

|---|---|---|---|---|---|

| MolDQN (Basic) | Deep Q-Network (DQN) | ~80% | >99% | QED, Penalized LogP | Zhou et al., 2019 |

| MolDQN with SMILES | DQN on String Representation | ~76% | >98% | Penalized LogP | Recent Benchmark (2023) |

| Graph-Based GM | Graph Neural Network (GNN) | ~85% | ~95% | DRD2 Activity, Solubility | Industry White Paper, 2024 |

| Fragment-Based RL | Actor-Critic Framework | ~89% | ~92% | Binding Affinity (pIC50) | Recent Conference Proceeding |

Experimental Protocols

Protocol 1: Training a MolDQN Agent for LogP Optimization

- Objective: Train an RL agent to generate molecules with high penalized octanol-water partition coefficient (LogP), a proxy for lipophilicity.

- Materials: Python 3.8+, PyTorch/TensorFlow, RDKit, OpenAI Gym environment configured for molecular graphs.

- Procedure:

- Environment Setup: Define the state space (molecular graph representation), action space (e.g., add carbon, add nitrogen, add bond, remove bond), and reward function: R = logP(molecule) - SA(molecule) - cycle_penalty(molecule).

- Network Initialization: Initialize a Double DQN with a Graph Convolutional Network (GCN) as the Q-value estimator.

- Training Loop: a. Initialize a starting molecule (e.g., benzene). b. For each step, the agent selects an action (ε-greedy policy), applies it to the current molecule, and receives a new state and reward. c. Store transition (s, a, r, s') in replay buffer. d. Sample random mini-batch from buffer to update DQN weights via gradient descent, minimizing the temporal difference error. e. Repeat for 1,000-5,000 episodes, with each episode having a max of 40 steps.

- Evaluation: Deploy the trained agent from multiple starting points. Collect generated molecules, filter invalid structures, and compute property distributions.

Protocol 2: Validating Generated Molecules with In Silico Docking

- Objective: Assess the binding potential of MolDQN-generated molecules against a target protein.

- Materials: Generated molecule library (SDF format), target protein structure (PDB format), AutoDock Vina or Glide software, high-performance computing cluster.

- Procedure:

- Preparation: Prepare protein structure (remove water, add hydrogens, assign charges) and ligand structures (generate 3D conformers, optimize geometry) using RDKit or Maestro.

- Docking Grid Definition: Define the active site binding pocket coordinates based on a co-crystallized native ligand.

- Virtual Screening: Execute batch docking for all generated molecules using the predefined grid. Set exhaustiveness to at least 20 for accuracy.

- Analysis: Rank compounds by predicted binding affinity (kcal/mol). Select top candidates (e.g., top 1%) for further in vitro analysis.

Visualization Diagrams

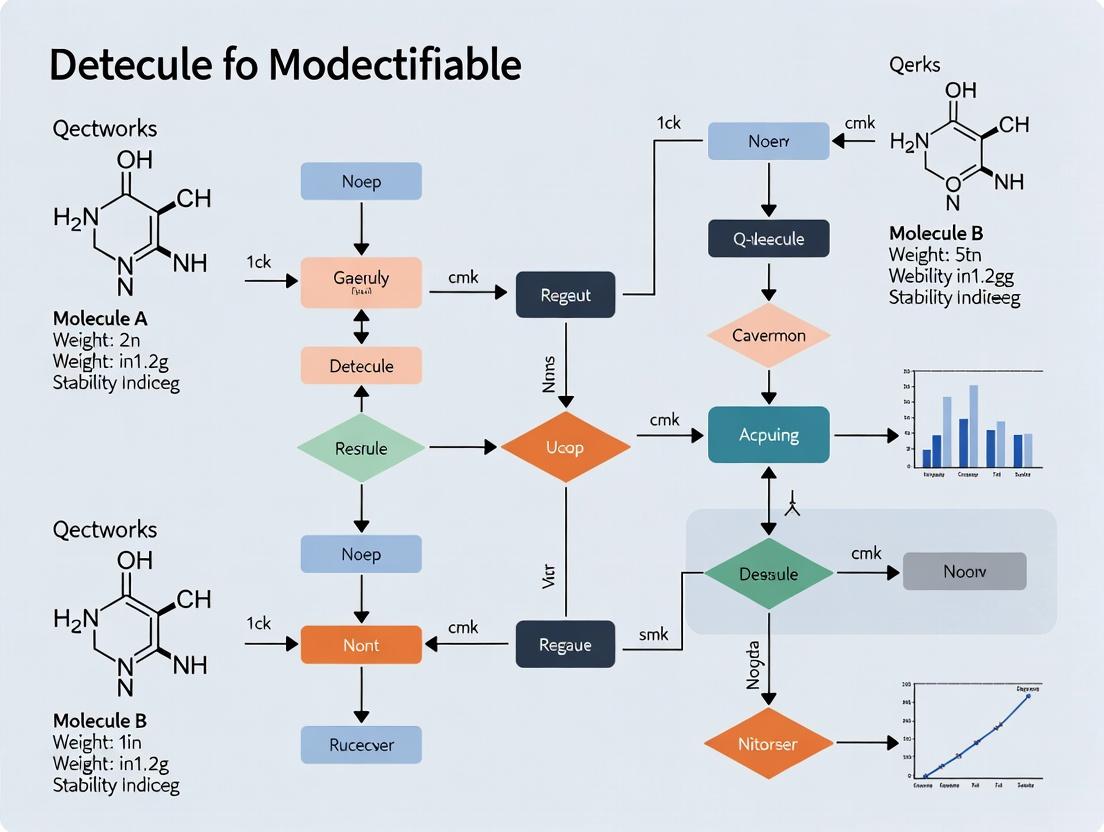

MolDQN Reinforcement Learning Training Cycle

AI-Driven Workflow Bypassing Traditional Screening Bottleneck

The Scientist's Toolkit: Research Reagent & Software Solutions

Table 2: Essential Resources for AI-Driven Molecular Design Experiments

| Item / Resource | Type | Primary Function in Context | Example Vendor/Platform |

|---|---|---|---|

| RDKit | Open-Source Cheminformatics Library | Fundamental for manipulating molecular structures, calculating descriptors (LogP, QED, SA), and handling SMILES/Graph representations. | rdkit.org |

| PyTorch / TensorFlow | Deep Learning Framework | Provides the foundational infrastructure for building, training, and deploying the Deep Q-Networks (DQNs) and GNNs used in MolDQN. | pytorch.org, tensorflow.org |

| OpenAI Gym | Reinforcement Learning Toolkit | Offers a standardized API to create custom environments for the molecular MDP, defining state, action, and reward. | gym.openai.com (community maintained) |

| AutoDock Vina | Molecular Docking Software | Critical for in silico validation, predicting the binding pose and affinity of generated molecules against a protein target. | vina.scripps.edu |

| ZINC or ChEMBL | Compound Database | Provides initial real-world molecular structures for pre-training or as starting points for the RL agent. | zinc.docking.org, ebi.ac.uk/chembl |

| High-Performance Computing (HPC) Cluster | Computational Hardware | Essential for training complex RL models and running large-scale virtual docking screens within a feasible timeframe. | Local institutional or cloud-based (AWS, GCP) |

What is MolDQN? Defining the Deep Q-Network Framework for Molecular Graphs

Within the broader thesis on the application of deep reinforcement learning (DRL) to de novo molecular design and optimization, MolDQN represents a seminal framework. This thesis argues that MolDQN establishes a foundational paradigm for treating molecule modification as a sequential decision-making process, directly optimizing chemical properties via interactive exploration of the vast chemical space. By integrating a Deep Q-Network (DQN) with molecular graph representations, it moves beyond traditional generative models, enabling goal-directed generation with explicit reward signals tied to pharmacological objectives.

Core Framework Definition

MolDQN (Molecular Deep Q-Network) is a reinforcement learning (RL) framework that formulates the task of molecular optimization as a Markov Decision Process (MDP). An agent learns to perform chemical modifications on a molecule to maximize a predicted reward, typically a quantitative estimate of a desired molecular property (e.g., drug-likeness, synthetic accessibility, binding affinity).

Key Components

- State (s): The current molecular graph.

- Action (a): A valid modification to the molecular graph (e.g., adding or removing a bond, adding an atom or functional group).

- Policy (π): The strategy that defines the agent's behavior (selecting actions given states). This is learned by the DQN.

- Reward (r): A scalar signal received after taking an action, often a function of the property of the new molecule (e.g., the change in the penalized LogP score or QED).

- Q-Network (Q(s,a;θ)): A neural network that approximates the expected cumulative future reward (Q-value) of taking action a in state s. The parameters θ are learned during training.

MolDQN Process Flow

Diagram 1: MolDQN Reinforcement Learning Cycle (80 characters)

Table 1: Benchmark Performance of MolDQN on Penalized LogP Optimization (Source: Zhou et al., NeurIPS 2019 and subsequent studies)

| Metric / Method | MolDQN | VAE (Baseline) | JT-VAE (Baseline) |

|---|---|---|---|

| Improvement over Start | +4.50 | +2.94 | +3.45 |

| Top-3 Molecule Score | 8.98 | 4.56 | 7.98 |

| Success Rate (%) | 82% | 60% | 76% |

| Sample Efficiency | ~3k episodes | ~10k samples | ~5k samples |

Table 2: Optimization Results for Different Target Properties

| Target Property | Metric | Initial Avg. | MolDQN Optimized Avg. |

|---|---|---|---|

| QED | Score (0 to 1) | 0.67 | 0.92 |

| Synthetic Accessibility (SA) | Score (1 to 10) | 4.12 | 2.87 (more synthesizable) |

| Multi-Objective (QED+SA) | Combined Reward | - | +31% vs. single-objective |

Experimental Protocols

Protocol 4.1: Standard MolDQN Training for Penalized LogP Optimization

Objective: Train a MolDQN agent to maximize the penalized LogP of a molecule through sequential single-bond additions/removals.

Materials:

- Software: RDKit, PyTorch/TensorFlow, OpenAI Gym-style environment.

- Data: ZINC250k dataset (pre-processed SMILES strings).

- Hardware: GPU (e.g., NVIDIA V100) recommended.

Procedure:

- Environment Setup:

- Define the state space as all valid molecular graphs under a maximum atom constraint (e.g., 38 atoms).

- Define the action space as a set of feasible graph modifications (e.g., "add a single bond between atom i and j," "remove a bond," "change bond type").

- Implement a reward function

R(m) = logP(m) - SA(m) - cycle_penalty(m), calculated using RDKit.

- Network Initialization:

- Initialize a policy Q-network and a target Q-network with identical architecture (typically a Graph Neural Network or fingerprint-based MLP).

- Initialize a replay buffer

Dwith capacityN(e.g., 1M transitions).

- Training Loop (for M episodes):

a. Initialize a random starting molecule

s_tfrom the dataset. b. For each steptin episode (max T steps): i. With probabilityε, select a random valid actiona_t; otherwise, selecta_t = argmax_a Q(s_t, a; θ). ii. Executea_tin the environment to get new molecules_{t+1}and rewardr_t. iii. Store transition(s_t, a_t, r_t, s_{t+1})in replay bufferD. iv. Sample a random mini-batch of transitions fromD. v. Compute target Q-values:y = r + γ * max_a' Q(s_{t+1}, a'; θ_target). vi. Update policy network parametersθby minimizing MSE loss:L = (y - Q(s_t, a_t; θ))^2. vii. Every C steps, update target network:θ_target <- τ*θ + (1-τ)*θ_target. viii.s_t <- s_{t+1}. c. Decay exploration rateε.

Validation:

- Every K episodes, run a validation episode from a fixed set of initial molecules.

- Track the maximum reward achieved and the properties of the top-5 generated molecules.

Protocol 4.2: Multi-Objective Optimization with Constrained Rewards

Objective: Optimize a primary property (e.g., QED) while constraining a secondary property (e.g., Molecular Weight < 500).

Procedure:

- Modify the reward function:

R(m) = QED(m) + λ * penalty, wherepenalty = max(0, MW(m) - 500)andλis a negative scaling factor. - Implement an action masking layer in the Q-network that invalidates actions leading to molecules that immediately violate the hard constraint (e.g., MW > 550).

- Follow Protocol 4.1, but monitor both objectives separately during validation.

Diagram 2: MolDQN Network with Action Masking (95 characters)

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Implementing and Testing MolDQN

| Item / Reagent | Function / Role in Experiment | Example / Specification |

|---|---|---|

| Molecular Dataset | Provides initial states and a training distribution for the agent. | ZINC250k, ChEMBL, GuacaMol benchmark sets. |

| Cheminformatics Library | Enables molecular representation, manipulation, and property calculation. | RDKit (open-source) or OEChem. |

| Deep Learning Framework | Provides the infrastructure to build, train, and validate the DQN models. | PyTorch, TensorFlow (with GPU support). |

| Reinforcement Learning Env. | Defines the MDP (state/action space, transition dynamics, reward function). | Custom OpenAI Gym environment. |

| Graph Neural Network Library | (Optional but recommended) Facilitates direct learning on molecular graph representations. | PyTorch Geometric (PyG), DGL-LifeSci. |

| Property Calculation Tools | Computes the reward signals that guide the optimization. | RDKit descriptors, external QSAR models, docking software (e.g., AutoDock Vina) for advanced tasks. |

| High-Performance Compute | Accelerates the intensive training process, which involves thousands of simulation episodes. | GPU cluster (NVIDIA Tesla series). |

| Chemical Validation Suite | Assesses the synthetic feasibility and novelty of generated molecules post-optimization. | SAscore, RAscore, FCFP-based similarity search. |

Within the broader thesis on MolDQN (Molecular Deep Q-Network) for de novo molecular design and optimization, the framework is conceptualized as a Markov Decision Process (MDP). This MDP formalizes the iterative process of modifying a molecule to improve its properties. The four key components—Agent, Action Space, State Space, and Reward Function—form the computational engine that enables autonomous, goal-directed molecule generation. This document provides detailed application notes and protocols for implementing and experimenting with these components in a drug discovery research setting.

Detailed Component Analysis & Protocols

The Agent

The Agent is the decision-making algorithm, typically a Deep Q-Network (DQN) or its variants (e.g., Double DQN, Dueling DQN). It learns a policy π that maps molecular states to modification actions to maximize cumulative reward.

Core Protocol: MolDQN Agent Training

- Objective: Train a DQN to propose optimal molecular modifications.

- Materials: Python 3.8+, PyTorch/TensorFlow, RDKit, CUDA-capable GPU (recommended).

- Procedure:

- Initialize: Create a DQN with two networks (online Q-network, target Q-network). Initialize replay buffer D to capacity N.

- Episode Loop: For each episode, start with a valid, initial molecule state st.

- Step Loop: For each step t in episode: a. Action Selection: With probability ε, select a random valid action from A(st). Otherwise, select a = argmaxa Q(st, a; θ) where θ are online network parameters. b. Execute Action: Apply action a to state st to get new molecule s{t+1}. Use RDKit to ensure chemical validity. c. Compute Reward: Calculate reward rt using the predefined reward function. d. Store Transition: Store (st, a, rt, s{t+1}) in replay buffer D. e. Sample & Learn: Sample random minibatch of transitions from D. Compute target y = r + γ * maxa' Q(s{t+1}, a'; θtarget). Perform gradient descent step on (y - Q(st, a; θ))^2 with respect to θ. f. Update Target Network: Every C steps, soft or hard update θtarget with θ. g. Terminate: If s{t+1} is terminal (e.g., max steps reached, perfect property achieved), end episode.

- Key Parameters (Typical Ranges):

- Discount factor (γ): 0.9 - 0.99

- Replay buffer size (N): 50,000 - 1,000,000

- Minibatch size (k): 32 - 128

- Target update frequency (C): 100 - 10,000 steps

- ε-greedy decay: 1.0 to 0.01 over 1,000,000 steps

Action Space (Molecular Modifications)

The Action Space defines the set of permissible chemical modifications the agent can perform on the current molecule. It is typically a discrete set of graph-based transformations.

Table 1: Common Discrete Actions in MolDQN-like Frameworks

| Action Category | Specific Action | Chemical Implementation (via RDKit) | Validity Check Required |

|---|---|---|---|

| Atom Addition | Add a carbon atom (with single bond) | Chem.AddAtom(mol, Atom('C')) |

Yes - check valency |

| Atom Addition | Add a nitrogen atom (with double bond) | Chem.AddAtom(mol, Atom('N')) & set bond order |

Yes - check valency & aromaticity |

| Bond Addition | Add a single bond between two atoms | Chem.AddBond(mol, i, j, BondType.SINGLE) |

Yes - prevent existing bonds/cycles |

| Bond Addition | Increase bond order (Single -> Double) | mol.GetBondBetweenAtoms(i,j).SetBondType() |

Yes - check valency & ring strain |

| Bond Removal | Remove a bond (if >1 bond) | Chem.RemoveBond(mol, i, j) |

Yes - prevent molecule dissociation |

| Functional Group Addition | Add a hydroxyl (-OH) group | Use SMILES [OH] and merge fragments |

Yes - check for clashes |

| Terminal Action | Stop modification (output final molecule) | N/A | N/A |

Protocol: Defining and Validating the Action Space

- Define Action List: Enumerate all graph modification actions as in Table 1.

- Implement Validity Function: For a given state s, create a function

get_valid_actions(s)that returns a subset of actions. This function must use chemical sanity checks (e.g., valency, reasonable ring size, sanitization success in RDKit) to filter out actions that would lead to invalid or unstable molecules. - Action Masking: During DQN training, apply an action mask to the final Q-value layer to set logits of invalid actions to -∞, forcing the agent to only sample from valid actions.

State Space (Molecular Representation)

The State Space is a numerical representation (fingerprint or graph) of the current molecule s_t.

Table 2: Common Molecular Representations for RL State Space

| Representation | Dimension | Description | Pros | Cons |

|---|---|---|---|---|

| Extended Connectivity Fingerprint (ECFP) | 1024 - 4096 bits | Circular topological fingerprint capturing atomic neighborhoods. | Fixed-length, fast computation, good for similarity. | Loss of structural details, predefined length. |

| Molecular Graph | Variable | Direct representation of atoms (nodes) and bonds (edges). | Maximally expressive, captures topology exactly. | Requires Graph Neural Network (GNN), more complex. |

| MACCS Keys | 166 bits | Predefined structural key fingerprint. | Interpretable, very fast. | Low resolution, limited descriptive power. |

| Physicochemical Descriptor Vector | 200 - 5000 | Vector of computed properties (LogP, TPSA, etc.). | Directly relevant to reward. | Not unique, may not guide structure generation well. |

Protocol: State Representation Processing Workflow

- Input: SMILES string of current molecule.

- Sanitization: Use RDKit's

Chem.MolFromSmiles()with sanitization flags. Reject invalid molecules (reset episode). - Representation Choice:

- For ECFP: Use

AllChem.GetMorganFingerprintAsBitVect(mol, radius=2, nBits=2048). - For Graph: Represent atoms as nodes (features: atom type, degree, etc.) and bonds as edges (features: bond type). Normalize features.

- For ECFP: Use

- State Output: Deliver a fixed-size vector (for fingerprints) or a graph object to the DQN or GNN agent.

Diagram Title: Molecular State Processing Workflow

Reward Function

The Reward Function R(s, a, s') provides the learning signal. It is a combination of property-based (e.g., drug-likeness, binding affinity prediction) and step penalties.

Typical Reward Components:

- Property Score (R_prop): Scaled value from a predictive model (e.g., QED for drug-likeness, -pIC50 for binding). Example:

R_qed = (QED(mol) - 0.5) * 10. - Improvement Reward (R_imp): Bonus for improving the property beyond the previous step:

R_imp = max(0, QED(s') - QED(s)) * 5. - Step Penalty (R_step): Small negative reward (e.g., -0.1) per step to encourage efficiency.

- Validity & Uniqueness Bonus (R_val): Positive reward for generating a novel, valid molecule.

- Constraint Penalty (R_pen): Large negative reward for violating hard constraints (e.g., synthesizability score below threshold).

Protocol: Designing a Multi-Objective Reward Function

- Define Objectives: List target properties (e.g., QED > 0.6, pIC50 > 7.0, SA_Score < 4.0).

- Normalize Scores: Scale each property to a common range (e.g., 0 to 1) using sigmoid or min-max scaling based on known distributions.

- Weight Components: Assign weights w_i to each objective based on priority.

R_total = w1*R_qed + w2*R_binding + w3*R_sa + R_step. - Implement Clipping: Clip final reward to a stable range (e.g., [-10, 10]) to prevent exploding gradients.

- Test Sensitivity: Run short training bursts with different weight combinations to observe learning dynamics before full-scale training.

Table 3: Example Reward Function for Lead Optimization

| Component | Calculation | Weight | Purpose |

|---|---|---|---|

| Drug-likeness (QED) | 10 * (QED(s') - 0.7) | 1.0 | Drive molecules towards optimal QED (~0.7). |

| Synthetic Accessibility | -2 * SA_Score(s') | 0.8 | Penalize complex, hard-to-synthesize structures. |

| Step Penalty | -0.05 | Fixed | Encourage shorter modification pathways. |

| Invalid Action Penalty | -1.0 | Fixed | Strongly discourage invalid chemistry. |

| Cliff Reward | +5.0 if pIC50_pred > 8.0 | -- | Large bonus for achieving primary activity goal. |

Diagram Title: Multi-Objective Reward Calculation Flow

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Materials & Tools for MolDQN Research

| Item / Reagent | Supplier / Source | Function in Experiment |

|---|---|---|

| RDKit | Open-source (rdkit.org) | Core cheminformatics toolkit for molecule manipulation, fingerprinting, and validity checks. |

| PyTorch / TensorFlow | Open-source (pytorch.org, tensorflow.org) | Deep learning frameworks for building and training the DQN Agent. |

| GPU Computing Resource | NVIDIA (e.g., V100, A100) | Accelerates deep Q-network training, essential for large-scale experiments. |

| ZINC Database | Irwin & Shoichet Lab, UCSF | Source of initial, purchasable molecules for training and as starting points. |

| OpenAI Gym / ChemGym | OpenAI / Custom | Environment interfaces for standardizing the RL MDP for molecules. |

| Pre-trained Property Predictors | e.g., ChemProp, DeepChem | Provide fast, in-silico reward signals for properties like solubility or toxicity. |

| Synthetic Accessibility (SA) Score Calculator | RDKit or Ertl & Schuffenhauer algorithm | Computes SA_Score as a key component of the reward function to ensure practicality. |

| Molecular Dataset (e.g., ChEMBL) | EMBL-EBI | Used for pre-training predictive models or benchmarking generated molecules. |

| Jupyter Notebook / Lab | Open-source | Interactive environment for prototyping and analyzing RL runs. |

This document details the application notes and protocols for implementing core Reinforcement Learning (RL) principles within the MolDQN framework. MolDQN represents a pioneering application of deep Q-networks to the problem of de novo molecule generation and optimization, framing chemical design as a Markov Decision Process (MDP). Within the context of a broader thesis on molecule modification research, understanding these principles is critical for advancing autonomous, goal-directed molecular discovery.

Core RL Principles in MolDQN: Theoretical Framework

Q-Learning and the Deep Q-Network (DQN)

In MolDQN, the agent learns to modify a molecule through a series of atom or bond additions/removals. The Q-function, $Q(s, a)$, estimates the expected cumulative reward of taking action $a$ (e.g., adding a nitrogen atom) in molecular state $s$ (the current molecule). The DQN approximates this complex function.

Key Update Rule (Temporal Difference): $Q{\text{new}}(st, at) = Q(st, at) + \alpha [rt + \gamma \max{a} Q(s{t+1}, a) - Q(st, at)]$ Where:

- $\alpha$: Learning rate

- $r_t$: Immediate reward (e.g., change in a property like QED)

- $\gamma$: Discount factor

Table 1: MolDQN Q-Learning Parameters and Typical Values

| Parameter | Symbol | Typical Range in MolDQN | Description |

|---|---|---|---|

| Discount Factor | $\gamma$ | 0.7 - 0.9 | Determines agent's foresight; higher values prioritize long-term reward. |

| Learning Rate | $\alpha$ | 0.0001 - 0.001 | Step size for neural network optimizer (Adam). |

| Replay Buffer Size | $N$ | 1,000,000 - 5,000,000 | Stores past experiences (s, a, r, s') for stable training. |

| Target Network Update Freq. | $\tau$ | Every 100 - 1000 steps | How often the target Q-network parameters are synchronized. |

| Batch Size | $B$ | 64 - 256 | Number of experiences sampled from replay buffer per update. |

Policy Derivation from Q-Values

MolDQN typically employs a deterministic greedy policy derived from the learned Q-network: $\pi(s) = \arg\max_{a \in \mathcal{A}} Q(s, a; \theta)$ where $\theta$ are the DQN parameters. The action space $\mathcal{A}$ consists of feasible chemical modifications.

Exploration vs. Exploitation

Balancing the trial of novel modifications (exploration) with the use of known successful ones (exploitation) is paramount.

- $\epsilon$-Greedy Strategy: With probability $\epsilon$, choose a random valid action; otherwise, choose the action with the highest Q-value.

- Annealing: $\epsilon$ decays from a high value (e.g., 1.0) to a low value (e.g., 0.01) over training, shifting from exploration to exploitation.

- Reward Shaping: Designing the reward function $rt$ is a form of implicit guidance. A common approach is $rt = \text{property}(s{t+1}) - \text{property}(st) + \text{penalty}$.

Table 2: Exploration Strategies and Their Impact

| Strategy | Implementation in MolDQN | Effect on Molecular Exploration |

|---|---|---|

| $\epsilon$-Greedy | Linear decay of $\epsilon$ over 1M steps. | Broad initial search of chemical space, gradually focusing on promising regions. |

| Boltzmann (Softmax) | Sample action based on $p(a|s) \propto \exp(Q(s, a)/\tau)$. | Probabilistic exploration that considers relative Q-value confidence. |

| Noise in Action Representation | Adding noise to the fingerprint or latent vector of state $s$. | Encourages small perturbations in chemical structure, leading to local exploration. |

Experimental Protocols

Protocol 1: Training a MolDQN Agent for Penalized LogP Optimization

Objective: Train a MolDQN agent to sequentially modify molecules to maximize the penalized LogP score, a measure of lipophilicity and synthetic accessibility.

Materials & Reagents: See The Scientist's Toolkit below.

Procedure:

- Environment Initialization:

- Initialize the molecular MDP environment (e.g., using

RDKitandOpenAI Gyminterface). - Define the state representation: 2048-bit Morgan fingerprint (radius 3).

- Define the action space: A set of valid chemical transformations (e.g., append atom, change bond, remove atom).

- Set the reward function: $rt = \text{penalized LogP}(s{t+1}) - \text{penalized LogP}(s_t)$.

- Initialize the molecular MDP environment (e.g., using

Agent Initialization:

- Initialize the Q-network: a multi-layer perceptron (MLP) with layers [2048, 512, 128, n_actions].

- Initialize the target network as an identical copy.

- Initialize the experience replay buffer with capacity $N = 2,000,000$.

- Set hyperparameters: $\gamma=0.8$, $\alpha=0.0005$, batch size $B=128$, $\epsilon{\text{start}}=1.0$, $\epsilon{\text{end}}=0.01$, decay steps=1,000,000.

Training Loop (for 2,000,000 steps): a. State Acquisition: Receive initial state $st$ (a starting molecule). b. Action Selection: With probability $\epsilon$, select a random valid action. Otherwise, select $at = \arg\max{a} Q(st, a; \theta)$. c. Step Execution: Execute $at$ in the environment. Observe reward $rt$ and next state $s{t+1}$. d. Storage: Store transition $(st, at, rt, s{t+1})$ in the replay buffer. e. Sampling: Sample a random minibatch of $B$ transitions from the buffer. f. Loss Calculation: Compute Mean Squared Error (MSE) loss: $L = \frac{1}{B} \sum [ (r + \gamma \max{a'} Q(s', a'; \theta^{-}) - Q(s, a; \theta) )^2 ]$ where $\theta^{-}$ are the target network parameters. g. Network Update: Perform a gradient descent step on $L$ w.r.t. $\theta$ using the Adam optimizer. h. Target Update: Every 500 steps, softly update target network: $\theta^{-} \leftarrow \tau \theta + (1-\tau) \theta^{-}$ ($\tau=0.01$). i. $\epsilon$ Decay: Linearly decay $\epsilon$. j. Termination: If $s_{t+1}$ is terminal (e.g., invalid molecule or max steps reached), reset the environment.

Evaluation:

- Run the trained agent with $\epsilon=0.0$ (greedy policy) on a test set of starting molecules.

- Record the final penalized LogP scores and the structural pathways of optimization.

Protocol 2: Assessing Exploration Efficiency via Chemical Space Coverage

Objective: Quantify the diversity of molecules generated during training under different exploration strategies.

Procedure:

- Train two MolDQN agents for 500,000 steps: Agent A with $\epsilon$-greedy, Agent B with Boltzmann exploration.

- At intervals of 50,000 steps, save a snapshot of the agent's policy and run it from a fixed set of 100 seed molecules for 10 steps each.

- For each collected set of 1000 final molecules, calculate:

- Average Pairwise Tanimoto Similarity: Using Morgan fingerprints.

- Unique Scaffold Ratio: Number of unique Bemis-Murcko scaffolds / total molecules.

- Plot these metrics vs. training steps to visualize how exploration strategy affects chemical space coverage over time.

Visualizations

Title: MolDQN Training Loop Architecture

Title: Exploration vs. Exploitation Decision in MolDQN

The Scientist's Toolkit

Table 3: Essential Research Reagents & Software for MolDQN Experiments

| Item Name | Type/Category | Function in MolDQN Research |

|---|---|---|

| RDKit | Open-Source Cheminformatics Library | Core environment for molecule manipulation, fingerprint generation (state representation), and validity checking after each action. |

| OpenAI Gym | API & Toolkit | Provides a standardized interface (env.step(), env.reset()) for defining the molecular MDP, enabling modular agent development. |

| PyTorch / TensorFlow | Deep Learning Framework | Used to construct, train, and evaluate the Deep Q-Network (DQN) and target network models. |

| ZINC Database | Chemical Compound Library | Source of valid, purchasable starting molecules for training and evaluation episodes. |

| Redis / deque | Data Structure | Implementation of the experience replay buffer for storing and sampling transitions (s, a, r, s'). |

| QM Calculation Software (e.g., DFT) | Computational Chemistry | For calculating precise quantum mechanical properties (e.g., dipole moment, HOMO-LUMO gap) as reward signals for target-oriented optimization. |

| Molecular Property Predictors | Pre-trained ML Models (e.g., on QM9) | Provides fast, approximate reward signals (e.g., predicted LogP, SAScore, QED) during training for scalability. |

| TensorBoard / Weights & Biases | Experiment Tracking Tool | Logs training metrics (loss, average reward, epsilon), hyperparameters, and generated molecule structures for analysis. |

Article

The 2019 paper "Optimization of Molecules via Deep Reinforcement Learning" by Zhou et al. introduced MolDQN, a foundational framework for molecule optimization using deep Q-networks (DQN). Within the broader thesis on MolDQN for molecule modification research, this work established the paradigm of treating molecular optimization as a Markov Decision Process (MDP), where an agent sequentially modifies a molecule through discrete, chemically valid actions to maximize a specified reward function.

1. Core Methodological Breakdown & Application Notes

Key MDP Formulation:

- State (s_t): The current molecule represented as a SMILES string.

- Action (a_t): A valid chemical modification from a defined set (e.g., adding or removing a specific atom or bond).

- Reward (r_t): A scalar score combining stepwise penalty (e.g., -0.1 per step) and a final property score (e.g., QED, logP, or a custom docking score) upon reaching a terminal state or exceeding a step limit.

- Policy (π): The DQN that predicts the Q-value (expected cumulative reward) for each possible action given the current state.

Experimental Protocols from Zhou et al. (Summarized)

Protocol 1: Benchmarking on Penalized logP Optimization

- Objective: Maximize the penalized octanol-water partition coefficient (logP), a measure of lipophilicity, while applying synthetic accessibility penalties.

- Dataset: ZINC250k (250,000 drug-like molecules).

- Agent Training: The DQN was trained using experience replay and a target network. The state (molecule) was encoded using a fingerprint or a graph neural network.

- Evaluation: Started from 800 randomly selected ZINC molecules. Allowed a maximum of 40 steps. Compared against baseline algorithms (e.g., REINVENT, hill climb).

- Key Metric: Improvement in penalized logP from the starting molecule.

Protocol 2: Targeting a Specific QED Range

- Objective: Modify molecules to achieve a Quantitative Estimate of Drug-likeness (QED) value within a narrow target range (0.85-0.9).

- Reward Function: Defined as negative absolute difference between molecule's QED and the target range midpoint (0.875).

- Procedure: Similar training setup as Protocol 1. Performance measured by success rate (percentage of runs reaching the target range) and step efficiency.

Table 1: Key Quantitative Results from Zhou et al.

| Benchmark Task | Start Molecule Avg. Score | MolDQN Optimized Avg. Score | % Improvement | Key Comparative Result |

|---|---|---|---|---|

| Penalized logP (ZINC Test) | ~2.5 | ~7.9 | ~216% | Outperformed REINVENT (5.9) and Hill Climb (5.2). |

| QED Targeting Success Rate | N/A | 75.6% | N/A | Significantly higher than rule-based & other RL baselines. |

2. Visualization of the MolDQN Framework

Title: MolDQN Reinforcement Learning Cycle for Molecule Optimization

3. The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Components for MolDQN-Based Research

| Component / "Reagent" | Function / Purpose | Example/Note |

|---|---|---|

| Chemical Action Set | Defines the permissible, chemically valid modifications the agent can perform. | E.g., {Add a single/double bond between atoms X & Y, Add a carbon atom, Change atom type}. |

| Molecular Representation | Encodes the molecule (state) for input to the neural network. | Extended-Connectivity Fingerprints (ECFP), Graph Neural Network (GNN) embeddings. |

| Reward Function | The objective the agent learns to maximize. Critically defines research goals. | Combined score: Property (e.g., docking score, QED) + Step penalty + Validity penalty. |

| Property Prediction Model | Often used as a fast surrogate for expensive computational or experimental assays. | Pre-trained models for logP, solubility, binding affinity (e.g., Random Forest, CNN on graphs). |

| Experience Replay Buffer | Stores past (state, action, reward, next state) tuples. Stabilizes DQN training. | Random sampling from this buffer breaks temporal correlations in updates. |

| Chemical Checker & Validator | Ensures every intermediate molecule is chemically plausible and valid. | RDKit library's sanitization functions are integral to the environment. |

| Benchmark Molecule Set | Standardized starting points for fair evaluation and comparison of algorithms. | ZINC250k, Guacamol benchmark datasets. |

4. Impact & Evolution in Molecular Design

The impact of Zhou et al. is profound. It demonstrated that RL could drive efficient exploration of chemical space de novo without requiring pre-enumerated libraries. This directly enabled subsequent research in:

- Multi-objective optimization: Simultaneously optimizing for potency, selectivity, and ADMET properties.

- Incorporating sophisticated predictors: Using fine-tuned GNNs or docking simulations as part of the reward function.

- Template-based drug design: Constraining actions within specific scaffold frameworks.

The core protocols and MDP formulation remain standard, though modern implementations often replace the DQN with more advanced actors (e.g., Policy Gradient methods) and use more powerful GNNs for state representation. The paper's true legacy is providing a robust, scalable, and flexible computational framework for goal-directed molecular generation, now a cornerstone of AI-driven drug discovery.

Why MolDQN? Advantages Over Traditional Virtual Screening and Generative Models

Within the broader thesis on MolDQN (Molecular Deep Q-Network) for molecule modification research, this document provides application notes and protocols. MolDQN is a reinforcement learning (RL) framework that formulates molecular optimization as a Markov Decision Process (MDP), where an agent iteratively modifies a molecule to maximize a reward function (e.g., quantitative estimate of drug-likeness, binding affinity). It represents a paradigm shift from traditional methods by enabling goal-directed, sequential discovery.

Table 1: Comparative Analysis of Molecular Discovery Approaches

| Feature | Traditional Virtual Screening (VS) | Generative Models (e.g., VAEs, GANs) | MolDQN (RL Framework) |

|---|---|---|---|

| Core Principle | Selection from a static, pre-enumerated library. | Learning data distribution & sampling novel structures. | Sequential, goal-oriented decision-making. |

| Exploration Capability | Limited to library diversity. | High novelty, but often unguided. | Directed exploration towards a specified reward. |

| Optimization Strategy | One-step ranking/filtering. | Latent space interpolation/arithmetic. | Multi-step, iterative optimization of a lead. |

| Objective Incorporation | Post-hoc scoring; objectives not learned. | Implicit via training data; hard to steer explicitly. | Explicit, flexible reward function (multi-objective possible). |

| Sample Efficiency | High (evaluates existing compounds). | Moderate (requires large datasets). | High for optimization (focuses on promising regions). |

| Interpretability of Path | None. | Low (black-box generation). | Provides optimization trajectory (action sequence). |

| Key Limitation | Cannot propose novel scaffolds outside library. | May generate unrealistic or non-optimizable compounds. | Sparse reward design; action space definition. |

Table 2: Benchmark Performance on DRD2 Activity Optimization (ZINC Starting Set)

| Method | % Valid Molecules | % Novel (vs. ZINC) | Success Rate* | Avg. Improvement in Reward |

|---|---|---|---|---|

| MolDQN (Original) | 99.8% | 100% | 0.91 | +0.49 |

| SMILES-based VAE | 95.2% | 100% | 0.04 | +0.05 |

| Graph-based GA | 100% | 100% | 0.31 | +0.20 |

| Success: Achieving reward > 0.5 (active) within a limited number of steps. |

Detailed Experimental Protocols

Protocol 3.1: Implementing a MolDQN Agent for QED Optimization

Objective: To optimize the Quantitative Estimate of Drug-likeness (QED) of a starting molecule using a MolDQN agent.

Materials & Software:

- Python (≥3.8)

- RDKit, PyTorch, OpenAI Gym, DeepChem

- Pre-trained proxy model for reward prediction (optional)

- Dataset of molecules for initial state (e.g., ZINC)

Procedure:

- Define the MDP:

- State (s): Molecular graph representation (e.g., Morgan fingerprint or atom/bond matrix).

- Action (a): Define a set of permissible chemical modifications (e.g., add/remove a bond, change atom type, add a small fragment). Example action space size: ~10-20 valid actions.

- Reward (r):

R(s) = QED(s) - QED(s_initial)for terminal step, else 0. Can include penalty for invalid actions. - Transition: Apply action

ato statesdeterministically to get new molecules'.

Initialize Networks:

- Create a Q-network (

Q(s,a; θ)) with 3-5 fully connected layers. Input is a concatenated vector of state and action features. - Initialize a target network (

Q'(s,a; θ')) with identical architecture. - Use Experience Replay Buffer (capacity ~10⁵-10⁶ transitions).

- Create a Q-network (

Training Loop (for N episodes): a. Initialize: Start with a random molecule

s0from dataset. b. For each step t (max T steps): i. With probability ε (decaying), select random actiona_t. Else, selecta_t = argmax_a Q(s_t, a; θ). ii. Applya_ttos_tto obtains_{t+1}. Calculate rewardr_t. iii. Store transition(s_t, a_t, r_t, s_{t+1})in replay buffer. iv. Sample a random minibatch of transitions from buffer. v. Compute target:y_j = r_j + γ * max_{a'} Q'(s_{j+1}, a'; θ'). vi. Update θ by minimizing loss:L(θ) = Σ_j (y_j - Q(s_j, a_j; θ))^2. vii. Every C steps, update target network:θ' ← τθ + (1-τ)θ'. viii. Ifs_{t+1}is terminal (or T reached), end episode.Evaluation: Run the trained policy greedily (ε=0) on a test set of starting molecules and record the final QED values and trajectories.

Protocol 3.2: Benchmarking vs. Generative Model (SMILES VAE)

Objective: To compare the optimization efficiency of MolDQN against a generative model baseline.

Procedure:

- Train a SMILES VAE:

- Train a Variational Autoencoder (VAE) on a corpus of drug-like SMILES strings.

- Learn a smooth latent space

z.

- Latent Space Optimization:

- Encode a start molecule

s0toz0. - Use a Bayesian Optimizer (BO) to propose new latent points

z'predicted to increase the reward (QED). - Decode

z'to a molecules', compute reward. - Iterate for N_BO steps.

- Encode a start molecule

- Comparison Metrics:

- Run MolDQN (Protocol 3.1) and VAE+BO for identical number of total reward function calls.

- Plot the best reward achieved vs. number of calls (sample efficiency curve).

- Record the validity rate of proposed molecules and their novelty.

Visualization

Diagram 1: MolDQN Framework MDP Workflow

Diagram 2: MolDQN vs. Virtual Screening & Generative Models

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Components for a MolDQN Research Pipeline

| Item / Solution | Function in Experiment | Notes / Specification |

|---|---|---|

| RDKit | Core cheminformatics toolkit for molecule manipulation, fingerprint generation, and QED/SA calculation. | Open-source. Used for state representation, action validation, and reward computation. |

| PyTorch / TensorFlow | Deep learning framework for constructing and training the Q-Network and target networks. | Enables automatic differentiation and GPU acceleration. |

| OpenAI Gym Environment | Customizable framework to define the molecular MDP (states, actions, rewards). | Provides standardized API for agent-environment interaction. |

| DeepChem | Library for molecular ML. Provides featurizers (e.g., GraphConv) and potential pre-trained reward models. | Useful for complex reward functions like predicted binding affinity. |

| Experience Replay Buffer | Data structure storing past transitions (s, a, r, s') to decorrelate training samples. | Implement with fixed capacity (e.g., 100k transitions) and random sampling. |

| ε-Greedy Scheduler | Balances exploration (random action) and exploitation (best predicted action). | ε typically decays from 1.0 to ~0.01 over training. |

| Molecular Action Set | Pre-defined, chemically plausible modifications (e.g., from literature). | Critical for ensuring validity. Example: "Add a carbonyl group," "Remove a methyl." |

| Reward Function Proxy | (Optional) A pre-trained predictive model (e.g., for solubility, activity) used as a reward signal. | Allows optimization for properties without expensive simulation at every step. |

Building and Applying MolDQN: A Step-by-Step Guide to Optimizing Molecules

This protocol details the operational pipeline for MolDQN, a deep Q-network (DQN) framework for de novo molecular design and optimization. Within the broader thesis on "Reinforcement Learning for Rational Molecule Design," MolDQN represents a pivotal methodology that formulates molecular modification as a Markov Decision Process (MDP). The agent learns to perform chemically valid actions (e.g., adding or removing atoms/bonds) to optimize a given reward function, typically a quantitative estimate of a drug-relevant property. This document provides application notes and step-by-step protocols for implementing the MolDQN pipeline, from initial configuration to candidate generation.

Core Pipeline Architecture & Workflow

The MolDQN pipeline integrates molecular representation, reinforcement learning, and chemical validity checks into a cohesive workflow.

Diagram Title: MolDQN Reinforcement Learning Cycle

Detailed Stage Protocols

Protocol 2.1.1: State Representation Generation

- Objective: Convert a SMILES string into a fixed-length numerical vector for DQN input.

- Materials: RDKit (v2023.x.x or later), NumPy.

- Procedure:

- Sanitize the input SMILES string using

rdkit.Chem.MolFromSmiles()withsanitize=True. - Generate a Morgan Circular Fingerprint using

rdkit.Chem.AllChem.GetMorganFingerprintAsBitVect(). - Key Parameters: Radius=3, nBits=2048. These values balance specificity and computational efficiency.

- Convert the bit vector to a NumPy array of dtype

float32. This array is the states_t.

- Sanitize the input SMILES string using

Protocol 2.1.2: Action Space Definition

- Objective: Define a set of chemically valid modifications the agent can perform.

- Materials: RDKit, predefined action dictionary.

- Procedure:

- The action space is typically discretized. A common set includes:

- Atom Addition: Append a new atom (C, N, O, F, etc.) with a single bond to an existing atom.

- Bond Addition: Increase bond order (single->double, double->triple) between two existing atoms, respecting valency.

- Bond Removal: Decrease bond order or remove a bond entirely.

- Each action is coupled with a validity check using RDKit's

SanitizeMolto ensure the resulting molecule is chemically plausible. Invalid actions are masked by setting their Q-value to -∞.

- The action space is typically discretized. A common set includes:

Protocol 2.1.3: Reward Function Computation

- Objective: Calculate a scalar reward

r_tthat guides the agent toward desired molecular properties. - Materials: Property calculation scripts (e.g., for QED, SAScore, Docking), NumPy.

- Procedure:

- For the new state molecule

s_{t+1}, compute one or more objective metrics. - Combine metrics into a single reward. A common multi-objective reward is:

r_t = w1 * QED(s_{t+1}) + w2 * [ -SAScore(s_{t+1}) ] + w3 * pIC50_prediction(s_{t+1}) - Penalization: Subtract a small step penalty (e.g., -0.05) to encourage shorter synthetic paths. Assign a large negative reward (e.g., -1) for invalid actions or molecules.

- For the new state molecule

Experimental Training Protocol

Protocol 3.1: MolDQN Agent Training

- Objective: Train the DQN to learn an optimal policy for molecule optimization.

- Materials: PyTorch or TensorFlow, RDKit, Replay Buffer memory structure.

- Network Architecture: A standard architecture comprises 3-4 fully connected layers with ReLU activation. Input layer size matches fingerprint length (2048). Output layer size matches the number of defined actions.

- Training Loop:

- Initialize Q-network (

Q_online) and target network (Q_target). SetQ_target = Q_online. - For each episode, start with a random valid molecule.

- For each step

tin the episode:- Select action

a_tusing an epsilon-greedy policy based onQ_online(s_t). - Apply action, get new state

s_{t+1}and rewardr_t. - Store transition

(s_t, a_t, r_t, s_{t+1})in the replay buffer. - Sample a random minibatch (size=128) from the buffer.

- Compute target Q-values:

y_j = r_j + γ * max_a' Q_target(s_{j+1}, a'). - Update

Q_onlineby minimizing the Mean Squared Error (MSE) loss betweenQ_online(s_j, a_j)andy_j. - Every C steps (e.g., 100), update

Q_target = Q_online.

- Select action

- Decay epsilon from 1.0 to 0.01 over the course of training.

- Initialize Q-network (

Quantitative Performance Benchmarks

Table 1: Benchmarking MolDQN Against Other Molecular Optimization Methods Performance metrics averaged over benchmark tasks like penalized LogP optimization and QED improvement.

| Method | Avg. Improvement (Penalized LogP) | Success Rate (% reaching target) | Computational Cost (GPU-hr) | Chemical Validity (%) |

|---|---|---|---|---|

| MolDQN | 4.32 ± 0.15 | 95.2% | 48 | 100% |

| REINVENT | 3.95 ± 0.21 | 89.7% | 52 | 100% |

| GraphGA | 4.05 ± 0.18 | 78.3% | 12 | 100% |

| JT-VAE | 2.94 ± 0.23 | 65.1% | 36 | 100% |

| SMILES LSTM | 3.12 ± 0.29 | 71.4% | 24 | 98.5% |

Table 2: Typical Optimization Results for Drug-like Properties (10-epoch run) Starting from a common scaffold (e.g., Benzene).

| Target Property | Initial Value | Optimized Value (Mean) | Best Candidate in Run | Key Structural Change Observed |

|---|---|---|---|---|

| QED | 0.47 | 0.92 ± 0.04 | 0.95 | Addition of saturated ring, amine group |

| Penalized LogP | 1.22 | 5.18 ± 0.31 | 5.87 | Addition of long aliphatic chain, halogen |

| Synthetic Accessibility (SA) | 2.9 | 2.1 ± 0.3 | 1.8 | Simplification, reduction of stereocenters |

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Software & Libraries for MolDQN Implementation

| Item Name | Version/Example | Function in the Pipeline |

|---|---|---|

| RDKit | 2023.09.5 | Core cheminformatics: SMILES parsing, fingerprinting, substructure search, validity checks. |

| PyTorch / TensorFlow | 2.0+ | Deep learning framework for building, training, and deploying the DQN agent. |

| OpenAI Gym | 0.26.2 | (Optional) Provides a standardized environment API for defining the molecular MDP. |

| NumPy & Pandas | 1.24+ / 2.0+ | Numerical computation and data handling for fingerprints, rewards, and results logging. |

| Molecular Docking Suite (e.g., AutoDock Vina) | 1.2.x | For advanced reward functions based on predicted binding affinity to a protein target. |

| Property Calculation Tools (e.g., mordred) | 1.2.0 | Calculate >1800 molecular descriptors for complex, multi-parameter reward functions. |

Candidate Optimization & Validation Workflow

This final protocol describes the end-to-end process from initiating a run to validating the output.

Diagram Title: End-to-End MolDQN Optimization and Validation

Protocol 6.1: Post-Generation Filtering & Validation

- Objective: Apply drug-like filters and advanced validation to generated candidates.

- Materials: RDKit, PAINS filter definitions, ADMET prediction models (e.g., ADMETlab), docking software.

- Procedure:

- Filtering: Pass all generated candidates through standard filters:

- Synthetic Accessibility (SA) Score < 6.

- Pan-Assay Interference Compounds (PAINS) filter.

- Lipinski's Rule of Five (with appropriate thresholds for the target).

- Cluster: Cluster remaining molecules by structural fingerprint (Tanimoto similarity) to ensure diversity.

- In-silico Validation: Perform molecular docking against the target protein for the top representatives from each cluster. Rank final candidates by a composite score of the original reward and docking score.

- Filtering: Pass all generated candidates through standard filters:

Within the broader thesis on MolDQN (Molecule Deep Q-Network) for de novo molecular design and optimization, representation and featurization are the foundational steps. MolDQN, a reinforcement learning framework, iteratively modifies molecular structures to optimize desired properties. The choice of molecular encoding directly impacts the network's ability to learn valid chemical transformations, explore the chemical space efficiently, and generate synthetically accessible candidates. This document details the prevalent encoding schemes, their application within MolDQN-like pipelines, and associated experimental protocols.

Molecular Representation Schemes: A Quantitative Comparison

Table 1: Comparison of Primary Molecular Encoding Methods

| Method | Representation | Dimensionality | Information Captured | Suitability for MolDQN | Key Advantages | Key Limitations |

|---|---|---|---|---|---|---|

| SMILES | Linear string (e.g., CC(=O)O for acetic acid) |

Variable length (1D) | Atom identity, bond order, basic branching/rings. | Moderate. Simple for RNN-based agents, but validity can be an issue. | Human-readable, compact, vast existing corpora. | Non-unique, fragile (small changes can break syntax), poor capture of 3D/ topological similarity. |

| Molecular Graph | Graph G=(V, E) where V=atoms, E=bonds. | Node features: natoms x f, Edge features: nbonds x g. | Full topology, atom/ bond features, functional groups. | High. Natural for graph neural network (GNN) agents to predict bond/node edits. | Directly encodes structure, invariant to permutation, rich featurization. | Computationally heavier, variable-sized input. |

| Molecular Fingerprint | Fixed-length bit/ integer vector (e.g., 1024-bit). | Fixed (e.g., 2048). | Presence of predefined or learned substructures/ paths. | High for policy/value networks. Used as state descriptor in original MolDQN. | Fixed dimension, fast similarity search, well-established. | Information loss, dependent on design (e.g., radius for ECFP). |

| 3D Conformer | Atomic coordinates & types (Point Cloud/Grid). | n_atoms x 3 (coordinates) + features. | Stereochemistry, conformational shape, electrostatic fields. | Low for dynamic modification; high for property prediction within pipeline. | Critical for binding affinity prediction. | Multiple conformers per molecule, alignment sensitivity, high computational cost. |

Experimental Protocols for Featurization

Protocol 3.1: Generating Extended-Connectivity Fingerprints (ECFPs) for MolDQN State Representation

Objective: Convert a molecule into a fixed-length ECFP4 bit vector for use as the state input to the Deep Q-Network. Reagents & Software: RDKit (Python), NumPy. Procedure:

- Input: A molecule object (e.g., from SMILES)

mol, sanitized. - Parameter Definition: Set fingerprint length (

nBits=2048), radius for atom environments (radius=2), and use features (useFeatures=Falsefor ECFP,Truefor FCFP`). - Fingerprint Generation: Use

rdkit.Chem.AllChem.GetMorganFingerprintAsBitVect(mol, radius=radius, nBits=nBits). - Output: A 2048-bit vector (e.g., as a NumPy array) representing the molecule. In MolDQN, this vector is the state

s_t.

Protocol 3.2: Graph Construction for a Graph Neural Network (GNN)-Based Agent

Objective: Represent a molecule as a featurized graph for a GNN-based policy network. Reagents & Software: RDKit, PyTorch Geometric (PyG) or DGL. Procedure:

- Node (Atom) Featurization: For each atom, create a feature vector including:

- Atomic number (one-hot: H, C, N, O, F, etc.)

- Degree (one-hot: 0-5)

- Formal charge (integer)

- Hybridization (one-hot: SP, SP2, SP3)

- Aromaticity (binary)

- (Optional) Number of attached hydrogens.

- Edge (Bond) Featurization: For each bond, create a feature vector including:

- Bond type (one-hot: single, double, triple, aromatic)

- Conjugation (binary)

- (Optional) Stereochemistry.

- Adjacency Matrix: Construct a sparse adjacency matrix (or edge index list) representing connectivity.

- Output: A

Dataobject (in PyG) containingx(node features),edge_index, andedge_attr.

Protocol 3.3: SMILES Enumeration and Canonicalization for Dataset Preparation

Objective: Prepare a standardized set of SMILES strings for training a SMILES-based RNN agent or a molecular property predictor. Reagents & Software: RDKit. Procedure:

- Input: A list of raw SMILES strings (may be non-canonical or have varying tautomers).

- Parsing & Sanitization: Use

rdkit.Chem.MolFromSmiles()withsanitize=True. Discard molecules that fail parsing. - Canonicalization: For each valid molecule, generate the canonical SMILES using

rdkit.Chem.MolToSmiles(mol, isomericSmiles=True, canonical=True). - Optional Augmentation: For data augmentation, generate randomized SMILES equivalents using

rdkit.Chem.MolToSmiles(mol, doRandom=True, isomericSmiles=True). - Output: A list of canonical SMILES strings for reliable model training.

Visualization of Encoding Workflows in MolDQN

Title: MolDQN Molecular Encoding and Modification Loop

Title: MolDQN State-Action Flow with Fingerprint Encoding

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Molecular Featurization in Deep Learning

| Item / Software | Category | Primary Function in Encoding | Typical Use Case |

|---|---|---|---|

| RDKit | Open-Source Cheminformatics Library | Core toolkit for parsing SMILES, generating fingerprints, graph construction, and molecular operations. | Protocol 3.1, 3.2, 3.3. Universal preprocessing. |

| PyTorch Geometric (PyG) | Deep Learning Library | Efficient implementation of Graph Neural Networks (GNNs) for processing molecular graphs in batch. | Building GNN-based agents for MolDQN. |

| Deep Graph Library (DGL) | Deep Learning Library | Alternative to PyG for building and training GNNs on molecular graphs. | GNN-based property prediction and RL. |

| OEChem (OpenEye) | Commercial Cheminformatics Toolkit | High-performance molecular toolkits, often with superior fingerprint and shape-based methods. | High-throughput production featurization. |

| NumPy/SciPy | Scientific Computing | Handling numerical arrays, sparse matrices, and performing linear algebra operations on feature vectors. | Manipulating fingerprint vectors and model inputs. |

| Pandas | Data Analysis | Managing datasets of molecules, their features, and associated properties in tabular format. | Organizing training/validation datasets. |

| Standardizer (e.g., ChEMBL) | Tautomer/Charge Tool | Standardizes molecules to a consistent representation (tautomer, charge model), crucial for reliable encoding. | Dataset curation before featurization. |

| 3D Conformer Generator (e.g., OMEGA, RDKit ETKDG) | Conformational Sampling | Generates realistic 3D conformations for molecules required for 3D-based featurization methods. | Creating inputs for 3D-CNN or structure-based models. |

Within the thesis on MolDQN (Molecular Deep Q-Network) for de novo molecular design and optimization, the Q-network architecture is the central engine. This protocol details the design principles, data flow, and experimental validation for constructing a Q-network that predicts the expected cumulative reward of modifying a molecule with a specific action, guiding an agent toward molecules with optimized properties.

Core Q-Network Architecture & Data Flow

The Q-network in MolDQN maps a representation of the current molecular state (S) and a possible modification action (A) to a Q-value, estimating the long-term desirability of that action.

Architectural Components

Input Representation:

- Molecular Graph (State S): Represented as an adjacency tensor (A) and a node feature matrix (X). A ∈ {0, 1}^{n x n x b}, where n is the number of atoms and b is the bond type count. X ∈ R^{n x d}, where d is the number of atom features (e.g., atomic number, degree, hybridization).

- Action (A): A tuple defining a graph modification. For example: (

action_type,atom_id_1,atom_id_2,new_bond_type). This is typically one-hot encoded and concatenated to graph-derived features.

Core Neural Network Layers:

- Graph Encoder (e.g., MPNN, GCN): Processes the molecular graph to generate a set of atom-level embeddings and a global graph-level embedding.

- Action Integrator: The action encoding is combined with relevant atom embeddings (e.g., embeddings of the two atoms involved in bond addition).

- State-Action Fusion: The fused representation is passed through fully connected (FC) layers to produce the scalar Q-value.

Output:

- A single scalar Q(S, A), representing the predicted future reward.

Architectural Diagram

Diagram Title: Q-Network Architecture for Molecular State-Action Valuation

Experimental Protocols for Q-Network Training & Evaluation

Protocol 2.1: Off-Policy Training with Experience Replay

Objective: To train the Q-network parameters (θ) by minimizing the Temporal Difference (TD) error using a replay buffer.

Materials: Pre-trained Q-network, replay buffer D populated with transitions (S_t, A_t, R_t, S_{t+1}), target network (θ_target), optimizer (Adam).

Procedure:

- Sample Batch: Randomly sample a mini-batch of

Ntransitions from replay bufferD. - Compute Target:

- For each transition, if

S_{t+1}is terminal:y_i = R_t. - Else:

y_i = R_t + γ * max_{A'} Q_target(S_{t+1}, A'; θ_target).

- For each transition, if

- Compute Loss: Calculate Mean Squared Error (MSE):

L(θ) = 1/N Σ_i (y_i - Q(S_t, A_t; θ))^2. - Update Network: Perform backpropagation to update parameters

θto minimizeL(θ). - Update Target: Periodically soft-update target network:

θ_target ← τθ + (1-τ)θ_target.

Protocol 2.2: Benchmarking on Guacamol/ZTKC Tasks

Objective: To evaluate the performance of the MolDQN agent powered by the trained Q-network on standard molecular optimization benchmarks.

Materials: Trained MolDQN agent, Guacamol or ZINC250k (ZTKC) benchmark suite, RDKit.

Procedure:

- Initialize: Start from a set of defined starting molecules (or random SMILES).

- Run Episode: For each task (e.g., optimize LogP, similarity to Celecoxib), let the agent interact with the environment for a set number of steps (T), using an ε-greedy policy based on the Q-network.

- Record Results: At the end of each episode, record the best molecule found and its property score.

- Calculate Metrics: Compute the score (task-specific property, normalized to [0,1]) and the success rate (fraction of runs achieving a score > threshold).

- Compare: Aggregate results across multiple runs and compare to baseline algorithms (e.g., SMILES GA, REINVENT).

Table 1: Benchmark Performance of MolDQN vs. Baseline Methods

| Benchmark Task (Guacamol) | MolDQN Score (Mean ± SD) | SMILES GA Score (Mean ± SD) | Best Score Threshold | MolDQN Success Rate |

|---|---|---|---|---|

| Celecoxib Rediscovery | 0.92 ± 0.05 | 0.78 ± 0.12 | 0.90 | 85% |

| Osimertinib MPO | 0.86 ± 0.07 | 0.72 ± 0.10 | 0.80 | 90% |

| Median Molecule 1 | 0.73 ± 0.09 | 0.65 ± 0.11 | 0.70 | 65% |

| Table 2: Q-Network Training Hyperparameters | ||||

| Hyperparameter | Typical Value/Range | Description | ||

| --------------------------- | -------------------------- | -------------------------------------------- | ||

| Graph Hidden Dim | 128 | Dimensionality of atom embeddings. | ||

| FC Layer Sizes | [512, 256, 128] | Dimensions of post-fusion layers. | ||

| Learning Rate (α) | 1e-4 to 1e-3 | Adam optimizer learning rate. | ||

| Discount Factor (γ) | 0.90 to 0.99 | Future reward discount. | ||

| Replay Buffer Size | 1e5 to 1e6 | Max number of stored transitions. | ||

| Target Update (τ) | 0.01 to 0.05 | Soft update coefficient for target net. |

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Reagent Solutions for MolDQN Implementation

| Item Name / Tool | Function & Purpose in Experiment |

|---|---|

| RDKit (Chemoinformatics) | Core library for molecule manipulation, SMILES parsing, fingerprint generation, and property calculation (e.g., LogP). |

| PyTorch Geometric (PyG) | Provides pre-implemented Graph Neural Network layers (GCN, GIN, MPNN) crucial for building the graph encoder. |

| Guacamol Benchmark Suite | Provides standardized tasks and scoring functions to objectively evaluate molecular design algorithms. |

| ZINC250k Dataset | Curated set of ~250k purchasable molecules; common source for initial states and for pre-training property predictors. |

| DeepChem Library | May offer utilities for molecule featurization (e.g., ConvMolFeaturizer) and dataset splitting. |

| OpenAI Gym / Custom Env | Framework for defining the molecular modification environment, including state transition and reward logic. |

| Weights & Biases (W&B) | Platform for tracking Q-network training metrics, hyperparameters, and generated molecule structures. |

MolDQN Agent-Environment Interaction Workflow

Diagram Title: MolDQN Agent Training and Action Cycle

Within the thesis on "MolDQN deep Q-networks for de novo molecular design and optimization," the central challenge is formulating a scalar reward signal from competing, often conflicting, physicochemical objectives. This document provides application notes and protocols for constructing and tuning multi-objective reward functions for optimizing drug-like molecules, focusing on balancing potency (pIC50), aqueous solubility (LogS), and synthesizability (SAscore).

Core Quantitative Objectives & Benchmarks

The following table summarizes the target ranges and transformation functions used to normalize each objective into a component reward (r_obj) between 0 and 1.

Table 1: Multi-Objective Targets, Metrics, and Reward Transformations

| Objective | Primary Metric | Target Range | Reward Function (Typical) | Data Source / Validation |

|---|---|---|---|---|

| Potency | pIC50 (or pKi) | > 8.0 (High), > 6.0 (Acceptable) | r_pot = sigmoid( (pIC50 - 6.0) / 2 ) | Experimental binding assays; public sources like ChEMBL. |

| Solubility | Predicted LogS | > -4.0 (Soluble, -4 Log mol/L) | r_sol = 1.0 if LogS > -4.0, else linear penalty to -6.0 | ESOL or SILICOS-IT models; measured solubility databases. |

| Synthesizability | SAscore (1-10) | < 4.5 (Easy to synthesize) | r_syn = 1.0 - (SAscore / 10) | RDKit implementation of Synthetic Accessibility score. |

| Composite Reward | Weighted Sum | R = w₁·r_pot + w₂·r_sol + w₃·r_syn | Weights (wᵢ) sum to 1.0. Default: w₁=0.5, w₂=0.3, w₃=0.2 | Tuned via ablation studies in MolDQN training. |

Experimental Protocols

Protocol 3.1: Iterative Reward Function Tuning for MolDQN

Purpose: To empirically determine the optimal weighting scheme for a multi-objective reward function. Materials: Pre-trained MolDQN agent, molecular starting scaffold, objective calculation scripts (RDKit, prediction models), training environment. Procedure:

- Initialize: Set baseline weights (e.g., 0.5, 0.3, 0.2 for potency, solubility, synthesizability). Initialize MolDQN network.

- Training Cycle: For each weight combination in the search grid: a. Run MolDQN for 1000 episodes, each starting from the defined scaffold. b. At each modification step, compute the composite reward R = Σ wᵢ * rᵢ. c. Store the top 10 molecules generated per run based on R.

- Post-Run Analysis: a. For each top-10 set, calculate the Pareto Front using the raw objective values (not rewards). b. Compute the Hypervolume Indicator relative to a reference point (e.g., pIC50<5, LogS<-6, SAscore>6). c. Select the weight set yielding the largest hypervolume.

- Validation: Execute a final, extended MolDQN run (5000 episodes) with the optimal weights. Evaluate the top 20 molecules with more rigorous (e.g., FEP, MD) solubility and potency predictions.

Protocol 3.2: Objective-Specific Reward Shaping

Purpose: To implement non-linear transformations that guide learning more effectively than simple linear scaling. Materials: Historical project data defining "success" thresholds, curve-fitting software. Procedure for Potency Reward:

- Gather pIC50 data for known actives and inactives in the target class.

- Define a success threshold (e.g., pIC50 ≥ 7.0) and a minimum threshold (e.g., pIC50 ≥ 5.0).

- Fit a smooth, differentiable function (e.g., piecewise linear or sigmoid) where:

- r_pot ≈ 0.0 for pIC50 ≤ 5.0

- r_pot rises monotonically between 5.0 and 7.0

- r_pot ≈ 1.0 for pIC50 ≥ 7.0

- Implement this custom function within the reward calculation pipeline.

Visualizing the Multi-Objective Optimization Framework

Title: MolDQN Multi-Objective Reward Feedback Loop

Title: Pareto Trade-off Between Key Molecular Objectives

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Computational Tools & Materials for Reward Function Development

| Item / Reagent | Supplier / Source | Primary Function in Experiment |

|---|---|---|

| RDKit | Open-Source Cheminformatics | Core library for molecule manipulation, SAscore calculation, and descriptor generation. |

| DeepChem | MIT/LF Project | Provides standardized molecular property prediction models (e.g., for LogS, pIC50). |

| MolDQN Framework | Custom Thesis Code | Deep Q-Network implementation for molecule optimization via fragment-based actions. |

| ChEMBL Database | EMBL-EBI | Public source of experimental bioactivity data (pIC50) for target proteins and reward function validation. |

| OpenChem | Intel Labs | May provide reference implementations of deep learning models for molecular property prediction. |

| Pareto Front Library (pygmo, pymoo) | Open-Source | Computes multi-objective optimization fronts and hypervolume metrics for reward weight tuning. |

| Chemical Simulation Software (Schrödinger, OpenMM) | Commercial/Open | Used in Protocol 3.1, Step 4 for high-fidelity validation of predicted solubility and binding affinity. |

Within the broader thesis on MolDQN (Deep Q-Network) frameworks for de novo molecular design and optimization, the definition of the action space is the fundamental operational layer. It translates the agent's decisions into tangible, chemically valid molecular transformations. This document details the permissible chemical modifications—atom addition/deletion, bond addition/deletion/alteration—that constitute the action space for a reinforcement learning (RL) agent in molecule modification research, providing application notes and protocols for implementation.

Defining the Permissible Action Space

The action space must be discrete, finite, and chemically grounded to ensure the RL agent explores synthetically feasible chemical space. Based on current literature and cheminformatics toolkits (e.g., RDKit), the following core modifications are defined.

Table 1: Core Permissible Chemical Modifications

| Modification Type | Specific Action | Valence & Chemical Rule Constraints | Common Examples in Lead Optimization |

|---|---|---|---|

| Atom Addition | Add a single atom to a specified existing atom. | New atom valency must not be exceeded. Added atom type is typically from a restricted set (e.g., C, N, O, F, Cl, S). | Adding a methyl group (-CH3), hydroxyl (-OH), or fluorine atom. |

| Atom Deletion | Remove a terminal atom (and its connected bonds). | Atom must have only one bond (terminal). Cannot break ring systems or create radicals arbitrarily. | Removing a chlorine atom or a methoxy group. |

| Bond Addition | Add a bond between two existing non-bonded atoms. | Must respect maximum valence of both atoms. Cannot create 5-membered rings or smaller unless part of pre-defined scaffold. Typically limited to single, double, or triple bonds. | Forming a ring closure (macrocycle), or adding a double bond in a conjugated system. |

| Bond Deletion | Remove an existing bond. | Must not create disconnected fragments (in most implementations). Breaking a ring may be allowed if it results in a valid, connected chain. | Cleaving a rotatable single bond in a linker. |

| Bond Alteration | Change the bond order between two already-bonded atoms. | Must respect valence rules for both atoms (e.g., increasing bond order only if valency permits). Common changes: single→double, double→single. | Aromatic ring modification, or altering conjugation. |

Application Notes for MolDQN Integration

- State Representation: The molecular graph (or its fingerprint) is the state

s_t. - Action Formulation: Each combination of modification type, target atom/bond index, and possible new feature (e.g., atom type, bond order) defines a unique action

a_t. The total action space size is the sum of all valid actions for all valid states. - Validity Check: An essential post-action step. The resulting molecule must pass sanitization checks (e.g., RDKit's

SanitizeMol), ensuring proper valences, acceptable rings, and no hypervalency. - Reward Shaping: The reward

r_tis calculated based on the property change (e.g., QED, Synthetic Accessibility Score, binding affinity prediction) between the previous and new molecule.

Experimental Protocol: Implementing and Validating the Action Space

This protocol describes the setup for a MolDQN-style environment using the RDKit cheminformatics toolkit.

Protocol: Action Space Initialization and Step Execution Materials: Python environment, RDKit, PyTorch (or TensorFlow), Gym-like environment framework.

Procedure:

- Define Baseline Molecule and Allowable Atoms/Bonds:

Generate All Valid Actions for a Given State (Molecule):

Execute an Action and Sanitize:

Train MolDQN Agent (Outline):

- Initialize replay buffer, Q-network, target Q-network.

- For each episode, reset to a starting molecule.

- For each step

t, select action a_t from valid actions using an ε-greedy policy.

- Execute action using

step() function to get s_{t+1} and validity flag.

- Compute reward

r_t using property calculators.

- Store transition

(s_t, a_t, r_t, s_{t+1}) in replay buffer.

- Sample minibatch and perform Q-network optimization via gradient descent on the Bellman loss.

Visualizing the MolDQN Modification Workflow

Title: MolDQN Action Execution and Training Loop

The Scientist's Toolkit: Key Research Reagents & Solutions

Table 2: Essential Tools for MolDQN Action Space Research

Item

Function/Description

Example/Provider

RDKit

Open-source cheminformatics toolkit used for molecule manipulation, sanitization, and fingerprint generation. Core for implementing the chemical action space.

RDKit Documentation

OpenAI Gym / Custom Environment

Provides the standardized RL framework (state, action, reward, step) for developing and benchmarking the molecular modification environment.

gym.Env or torchrl.envs

Deep Learning Framework

Library for building and training the Deep Q-Networks that parameterize the agent's policy.

PyTorch, TensorFlow, JAX

Property Prediction Models

Pre-trained or concurrent models used to calculate the reward signal (e.g., QED, SAscore, pChEMBL predictor).

molsets, chemprop, or custom models

Molecular Dataset

Curated sets of drug-like molecules for pre-training, benchmarking, and defining starting scaffolds.

ZINC, ChEMBL, GuacaMol benchmarks

High-Performance Computing (HPC) / GPU

Computational resources essential for training deep RL models over large chemical action spaces within a feasible time.

NVIDIA GPUs, Cloud compute (AWS, GCP)

Within the MolDQN framework for de novo molecule generation and optimization, training stability is paramount for producing valid, high-scoring molecular structures. This document details the core protocols—Experience Replay, Target Networks, and Hyperparameter Tuning—necessary to mitigate correlations and divergence in deep Q-learning, specifically applied to the chemical action space of molecule modification.

Core Stabilization Components: Protocols & Application Notes

Experience Replay Buffer

Protocol ER-01: Implementation and Sampling

- Initialization: Allocate a fixed-capacity replay buffer D (e.g., capacity N = 1,000,000 transitions). A transition is defined as the tuple (s_t, a_t, r_t, s_{t+1}, terminal_flag), where the state s is a molecular graph representation, and action a is a defined chemical modification (e.g., add/remove a bond, change atom type).

- Storage: During agent exploration, each new transition is stored in D. Upon reaching capacity, overwrite the oldest transition.

- Minibatch Sampling: For each training step, sample a random minibatch of B transitions (e.g., B = 128) uniformly from D. This breaks temporal correlations between consecutive episodes of molecule construction.

Application Note: For MolDQN, prioritize transitions that lead to successful synthesis paths or large positive rewards (prioritized experience replay). The probability of sampling transition i is P(i) = p_i^α / Σ_k p_k^α, where p_i is the priority (e.g., TD error δ_i) and α controls the uniformity.

Target Network

Protocol TN-01: Periodic Update Schedule

- Dual Network Instantiation: Initialize two identical Q-networks: the online network Q(s,a;θ) and the target network Q(s,a;θ⁻).

- Q-Target Calculation: Compute the target for the Q-learning update using the target network: y = r + γ * max_{a'} Q(s', a'; θ⁻), where γ is the discount factor (typically 0.9 for molecule optimization).

- Periodic Hard Update: Every C training steps (e.g., C = 1000), copy the parameters of the online network to the target network (θ⁻ ← θ).