From Bytes to Breakthroughs: Demystifying AI-Driven de novo Drug Design for Modern Researchers

This article provides a comprehensive overview of artificial intelligence (AI) principles in de novo drug design, tailored for researchers, scientists, and development professionals.

From Bytes to Breakthroughs: Demystifying AI-Driven de novo Drug Design for Modern Researchers

Abstract

This article provides a comprehensive overview of artificial intelligence (AI) principles in de novo drug design, tailored for researchers, scientists, and development professionals. It begins by establishing the fundamental concepts and motivation behind AI-driven molecular generation, contrasting it with traditional methods. We then detail core methodological approaches—including generative models, reinforcement learning, and genetic algorithms—and their practical application in hit identification and lead optimization. The guide addresses common challenges such as synthesizability, novelty, and objective function design, offering optimization strategies. Finally, we present rigorous validation frameworks and comparative analyses of state-of-the-art tools, culminating in a synthesis of current capabilities, persistent gaps, and the transformative future implications for accelerating biomedical discovery and clinical pipeline development.

AI in Drug Discovery: The Paradigm Shift from Screening to Generative Design

De novo drug design is a computational strategy for generating novel molecular structures with desired pharmacological properties from scratch, without relying on pre-existing templates. Framed within a broader thesis on AI-driven principles, this whitepaper details the core paradigms, historical evolution, and technical methodologies that define the field.

Historical Context and Evolution

The history of de novo drug design is marked by a transition from manual, intuition-driven discovery to increasingly automated, algorithm-driven generation.

Table 1: Historical Milestones in De Novo Drug Design

| Era | Period | Key Paradigm | Representative Technology | Limitation |

|---|---|---|---|---|

| Conceptual | 1980s | Structure-based design, molecular building blocks. | LUDI, GROW. | Limited computational power, simplistic scoring. |

| Evolutionary | 1990s-2000s | Genetic algorithms, fragment linking/assembly. | LEGEND, SPROUT. | Chemical novelty but poor synthesizability. |

| AI-Driven | 2010s-Present | Deep generative models, reinforcement learning. | Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), Reinforcement Learning (RL). | Early challenges in objective function design, model interpretability. |

| Generative AI | 2020s-Present | Transformer architectures, geometric deep learning, diffusion models. | Pocket2Mol, DiffDock, 3D-conditional diffusion models. | Generation of synthetically accessible, 3D-aware, and diverse lead-like molecules. |

Core Technical Principles

The Generative Cycle

The core workflow involves an iterative loop: (1) Generation of candidate molecular structures, (2) Evaluation via predictive models (e.g., for binding affinity, ADMET), and (3) Optimization using feedback to refine the generative model.

Molecular Representation

The choice of molecular representation directly influences the generative model's capabilities.

Table 2: Molecular Representations in AI-Driven De Novo Design

| Representation | Format | AI Model Suitability | Advantage | Disadvantage |

|---|---|---|---|---|

| String-Based | SMILES, SELFIES | RNN, Transformer | Simple, sequential, large corpora available. | Can generate invalid strings; 1D representation loses spatial data. |

| Graph-Based | Molecular Graph (Atoms as nodes, bonds as edges) | Graph Neural Network (GNN) | Naturally represents topology, invariant to permutation. | Complex generation requires autoregressive or one-shot methods. |

| 3D Coordinate | Atomic Point Cloud / 3D Grid | Geometric GNN, Diffusion Model | Encodes steric and electrostatic complementarity to target. | Computationally intensive; requires defined binding pocket. |

Optimization Strategies

- Goal-Directed Generation: Models are trained to directly optimize a multi-parametric objective function (e.g., QED, SA, binding score).

- Reinforcement Learning (RL): The generative model acts as an agent, receiving rewards from a scoring function and adjusting its policy (generation rules) to maximize reward.

- Bayesian Optimization: Used in latent space models to navigate towards regions of high desirability.

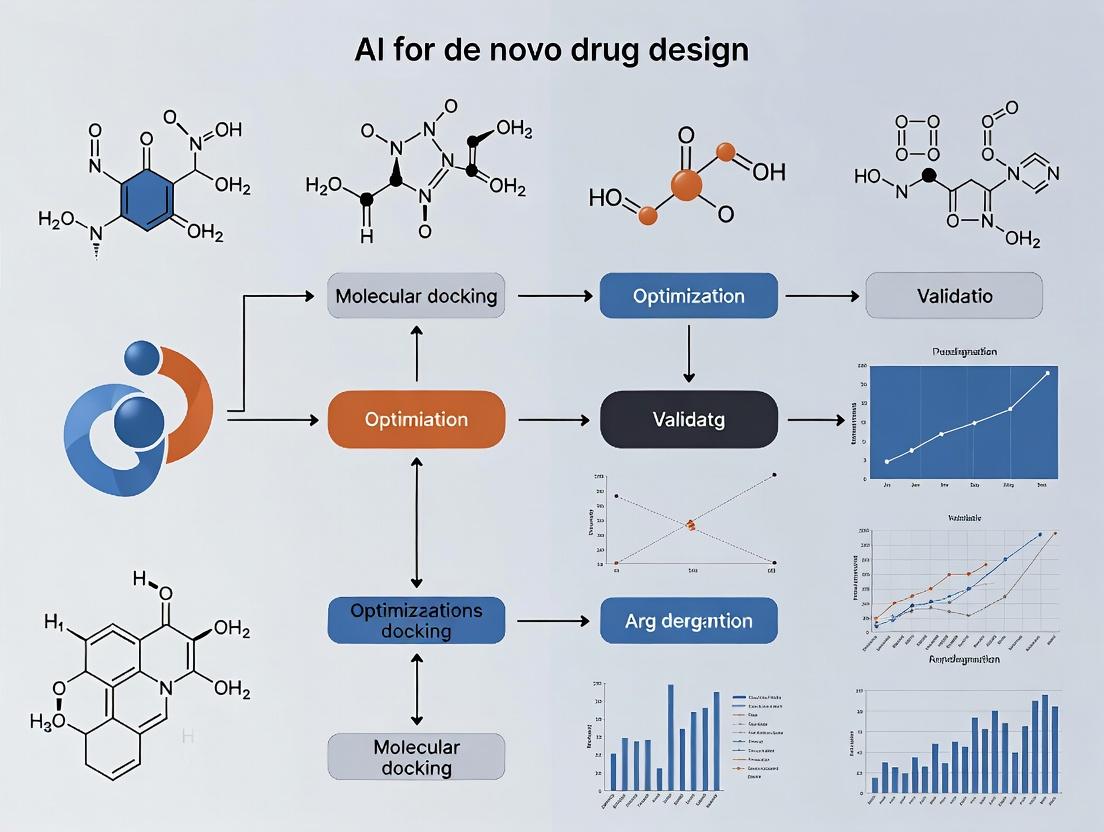

De Novo Design AI Optimization Workflow

Experimental Protocol: A StandardIn SilicoValidation

This protocol outlines a standard validation experiment for an AI-based de novo design model targeting a specific protein.

Aim: To generate novel, synthetically accessible inhibitors for Target Protein X.

Methodology

Data Curation:

- Source: Public databases (PDBbind, BindingDB).

- Content: Crystal structures of Target X with ligands (for structure-based models) or known active/inactive SMILES strings (for ligand-based models).

- Preprocessing: Ligands are stripped and protonated. Structures are aligned to a common reference frame. Pockets are defined (e.g., using FPocket).

Model Training & Configuration:

- Model: A 3D conditional diffusion model (e.g., Pocket2Mol architecture).

- Conditioning: The model is conditioned on the 3D atomic point cloud of the defined binding pocket.

- Training: Model is trained to denoise atomic coordinates and types within the pocket context.

Candidate Generation:

- 10,000 unique molecules are generated in silico by sampling from the trained model.

In Silico Evaluation Funnel:

- Step 1 - Syntactic Filter: Remove molecules with invalid valences or unstable rings.

- Step 2 - Drug-Likeness: Filter by Quantitative Estimate of Drug-likeness (QED > 0.6) and Synthetic Accessibility (SAscore < 4.5).

- Step 3 - Docking: Remaining molecules are docked into Target X's pocket using Glide SP or AutoDock Vina. Top 500 by docking score are retained.

- Step 4 - MM/GBSA: Re-score top 100 docked poses using Molecular Mechanics/Generalized Born Surface Area (MM/GBSA) for more accurate binding free energy estimation.

- Step 5 - ADMET Prediction: Predict key ADMET properties (e.g., CYP inhibition, hERG liability, Caco-2 permeability) using models like ADMETlab 2.0.

Output: A ranked list of 20-50 novel candidate molecules with associated scores and predicted properties for in vitro validation.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for AI-Driven De Novo Drug Design Research

| Tool Category | Specific Solution / Software | Primary Function | Key Application in Workflow |

|---|---|---|---|

| Generative AI Platform | PyTorch, TensorFlow, JAX | Deep learning framework for building and training custom generative models. | Model development and training. |

| Chemistry & Generation | RDKit, DeepChem | Open-source cheminformatics toolkit for molecule manipulation, descriptor calculation, and model integration. | SMILES parsing, fingerprinting, filter application, basic property calculation. |

| Docking & Scoring | AutoDock Vina, Glide (Schrödinger), GNINA | Predicts the binding pose and affinity of a generated molecule to a protein target. | Primary in silico validation of generated molecules' target engagement. |

| Free Energy Calculation | AMBER, GROMACS, OpenMM | Molecular dynamics simulation and more accurate (MM/PBSA, MM/GBSA) binding free energy estimation. | Refined scoring and stability assessment of top candidates. |

| ADMET Prediction | ADMETlab 2.0, pkCSM, StarDrop | Predicts pharmacokinetic, toxicity, and metabolic profiles from molecular structure. | Early-stage elimination of candidates with poor predicted developability. |

| Synthesis Planning | AiZynthFinder, ASKCOS, RetroSyn | Retrosynthetic analysis tool to evaluate and plan the synthetic route for a generated molecule. | Assesses and improves the synthetic accessibility of AI-generated designs. |

Thesis Context: AI Principles and Historical Trajectory

Quantitative Benchmarking

Table 4: Benchmark Performance of Modern De Novo Design Methods (Hypothetical Summary)

| Model (Year) | Generation Method | Target | Key Metric: Vina Score (Δ kcal/mol) | Key Metric: Novelty (Tanimoto < 0.3) | Key Metric: Synthetic Accessibility (SAscore) |

|---|---|---|---|---|---|

| Ligand-Based VAE (2018) | SMILES VAE + RL | DRD2 | -9.2 ± 0.5 | 85% | 3.8 ± 0.6 |

| Graph-based (2020) | GNN + Policy Gradient | JAK2 | -10.5 ± 0.7 | 92% | 3.5 ± 0.7 |

| 3D Diffusion (2023) | Pocket-Conditioned Diffusion | SARS-CoV-2 Mpro | -11.8 ± 0.4 | 99% | 2.9 ± 0.4 |

Note: Data is illustrative, compiled from recent literature trends. Actual values vary by study setup.

De novo drug design has evolved from a conceptual framework to a practical, AI-driven engine for molecular invention. Its core principles—generation conditioned on structural or property constraints, followed by iterative multi-parametric optimization—are now powered by deep generative models. Within the context of AI principles research, the field is moving towards integrated, "closing-the-loop" systems that directly connect generative AI with automated synthesis and biological testing, promising to accelerate the discovery of novel therapeutic agents.

The traditional drug discovery pipeline is a monument to high expenditure and high failure. Despite advances in genomics and combinatorial chemistry, the fundamental process remains slow, costly, and inefficient. The core thesis framing this discussion is that AI, particularly for de novo drug design, is not merely a tool for acceleration but a foundational shift in molecular discovery principles. It moves the paradigm from iterative screening to predictive generation and multi-parameter optimization.

The Quantitative Burden: A Data-Driven Case

Recent analyses underscore the unsustainable economics of traditional discovery. The following table summarizes key performance indicators.

Table 1: Traditional vs. AI-Augmented Drug Discovery Metrics

| Metric | Traditional Discovery (Avg.) | AI-Augmented Discovery (Projected/Reported) | Data Source (2023-2024) |

|---|---|---|---|

| R&D Cost per Approved Drug | ~$2.3B (Incl. failures) | Target: 30-50% reduction | (Evaluate Pharma, 2023; BCG Analysis) |

| Timeline from Target to Preclinical Candidate | 3-6 years | 12-24 months | (Nature Reviews Drug Discovery, 2024) |

| Clinical Trial Success Rate (Phase I to Approval) | ~7.9% | Early data suggests potential to double | (Biostatistics, 2024) |

| Number of Compounds Screened per Approved Drug | 10,000+ | Designed in silico, < 1000 synthesized | (ACS Medicinal Chemistry Letters, 2023) |

| Primary Cause of Preclinical Failure | Poor PK/PD & Toxicity (∼60%) | AI models predict ADMET properties prior to synthesis | (Journal of Chemical Information and Modeling, 2024) |

Core AI Methodologies: From Prediction to Generation

Predictive ADMET & Target Affinity Modeling

Experimental Protocol (In Silico Prediction):

- Data Curation: Assemble a structured database of molecules with experimentally determined properties (e.g., solubility, hepatic microsomal stability, hERG inhibition).

- Featurization: Convert molecular structures into numerical descriptors (e.g., ECFP fingerprints, molecular weight, logP) or graph representations.

- Model Training: Employ supervised learning algorithms (e.g., Gradient Boosting Machines, Graph Neural Networks) to correlate features with experimental outcomes.

- Validation: Use temporal split or scaffold split validation to assess model generalizability to novel chemical space.

- Prospective Screening: Apply the trained model to filter virtual compound libraries, prioritizing molecules with favorable predicted properties for synthesis.

De NovoMolecular Design with Generative AI

Experimental Protocol (Reinforcement Learning-Based Design):

- Agent Definition: The AI agent (e.g., a Recurrent Neural Network or Transformer) acts as a "generator" of molecular strings (SMILES).

- Environment & Reward: The "environment" is defined by multiple scoring functions (e.g., predicted target affinity, synthetic accessibility, similarity to known actives). The agent receives a composite reward signal.

- Training Loop: a. The agent generates a batch of molecules. b. Each molecule is evaluated by the reward functions. c. The agent's parameters are updated via policy gradient methods to maximize expected reward.

- Output: The optimized agent produces novel, synthetically accessible molecules optimized for the desired multi-property profile.

Diagram Title: Reinforcement Learning Cycle for De Novo Drug Design

Case Study: AI-Integrated Workflow for Kinase Inhibitor Discovery

The following diagram illustrates a complete, iterative AI-driven workflow, contrasting with linear traditional steps.

Diagram Title: Iterative AI-Driven Drug Discovery Workflow

The Scientist's Toolkit: Key Research Reagents & Solutions

Table 2: Essential Reagents for AI-Guided Experimental Validation

| Item | Function in AI-Driven Workflow | Example Vendor/Product |

|---|---|---|

| Recombinant Human Target Protein | Essential for in vitro binding (SPR, ITC) and enzymatic assays to validate AI-predicted affinities. | Sino Biological, R&D Systems |

| AlphaFold2 Protein Structure Prediction | Provides high-confidence 3D structural models for targets lacking crystal structures, enabling structure-based AI design. | EMBL-EBI, Google ColabFold |

| High-Throughput Screening Assay Kits | Validate AI-prioritized compound libraries against biological activity (e.g., kinase activity, cell viability). | Promega, Cisbio |

| LC-MS/MS for ADMET Profiling | Generates high-quality in vitro PK/PD data (e.g., microsomal stability, permeability) to ground-truth and refine AI models. | Agilent, Waters |

| Cryo-EM Services | Determine high-resolution structures of lead compounds bound to their target, providing critical feedback for next-generation AI design cycles. | Thermo Fisher Scientific, specialized CROs |

| Chemical Synthesis Services (CRO) | Rapid, parallel synthesis of AI-designed compounds for biological testing, bridging digital design and physical matter. | WuXi AppTec, Sigma-Aldrich Custom Synthesis |

The pursuit of novel therapeutic molecules is a cornerstone of pharmaceutical research, traditionally characterized by high costs, lengthy timelines, and high attrition rates. De novo drug design—the computational generation of novel molecular structures with desired properties—represents a paradigm shift. This whitepaper frames three key AI paradigms—Generative AI, Machine Learning (ML), and Molecular Representations—within the thesis that their integrated application is fundamental to modern, principled research in de novo drug design. These technologies enable the systematic exploration of chemical space, which is estimated to contain >10⁶⁰ synthesizable organic molecules, far beyond the capacity of traditional screening.

Foundational AI Paradigms

Machine Learning for Predictive Modeling

ML forms the quantitative backbone, learning from existing data to predict the properties of unseen molecules. Supervised learning models map molecular representations to biological activities (e.g., IC₅₀) or physicochemical properties (e.g., solubility, LogP).

- Common Algorithms: Random Forests, Gradient Boosting Machines (GBM), and deep neural networks (DNNs).

- Primary Application: Constructing Quantitative Structure-Activity Relationship (QSAR) models to virtually screen and prioritize generated molecules.

Generative AI for Molecular Invention

Generative AI moves beyond prediction to creation. It learns the underlying probability distribution of known chemical structures and/or their target-binding complexes to propose novel, valid, and optimized molecules.

- Key Architectures:

- Variational Autoencoders (VAEs): Encode molecules into a continuous latent space where interpolation and sampling yield novel structures.

- Generative Adversarial Networks (GANs): A generator creates molecules while a discriminator critiques them, driving iterative improvement.

- Autoregressive Models (e.g., Transformers): Generate molecular sequences (like SMILES) token-by-token, capturing long-range dependencies.

- Flow-Based Models: Learn invertible transformations between data distribution and a simple base distribution, enabling exact likelihood calculation.

Molecular Representations: The Data Language

The choice of representation dictates what patterns AI models can learn. Three primary paradigms dominate drug design.

1D: Simplified Molecular-Input Line-Entry System (SMILES) A string notation representing a molecule's 2D structure as a sequence of atoms and bonds. It is compact and easy to use with sequence-based models (RNNs, Transformers) but can suffer from syntactic invalidity and lack of explicit spatial information. Example: The serotonin molecule is represented as

C1=CC2=C(C=C1O)C(=CN2)CCN.2D: Molecular Graphs A graph G(V, E) where atoms are nodes (V) and bonds are edges (E). This representation explicitly encodes connectivity and is naturally processed by Graph Neural Networks (GNNs), which learn through message-passing between connected atoms.

3D: Geometric Representations Captures the spatial coordinates of atoms (conformation), critical for modeling molecular interactions, docking, and binding affinity. Models include E(3)-Equivariant Neural Networks and Geometric Graph Networks, which are invariant to rotations and translations.

Table 1: Comparative Analysis of Molecular Representations

| Representation | Format | Key AI Model | Advantages | Limitations |

|---|---|---|---|---|

| SMILES (1D) | String Sequence | RNN, Transformer | Simple, compact, vast existing datasets. | Ambiguous (one molecule, many SMILES), syntactic invalidity on generation, no explicit topology. |

| Molecular Graph (2D) | Graph (Nodes, Edges) | Graph Neural Network (GNN) | Explicitly encodes structure and connectivity, invariant to SMILES permutation. | Does not inherently encode 3D conformation or chirality. |

| 3D Geometric | Coordinates + Features | Equivariant Network, GNN | Directly models quantum-chemical and steric interactions, essential for binding. | Computationally intensive, requires conformation generation or data. |

Experimental Protocols & Workflows

Protocol 1: Benchmarking a GNN for Property Prediction

Objective: Train and validate a GNN model to predict molecular properties (e.g., solubility) from 2D graphs.

- Dataset Curation: Use a standard benchmark like ESOL (water solubility) or FreeSolv (hydration free energy). Split data (e.g., 80/10/10) into training, validation, and test sets using scaffold splitting to assess generalization.

- Graph Featurization: For each molecule, generate a graph where nodes (atoms) are featurized with atomic number, degree, hybridization, etc. Edges (bonds) are featurized with type (single, double, etc.) and conjugation.

- Model Training: Implement a Message-Passing Neural Network (MPNN). The model performs:

- Message Passing (K steps): Aggregate features from neighboring nodes.

- Readout: Pool updated node features into a global graph representation.

- Prediction: Pass the graph vector through fully connected layers to produce a scalar prediction.

- Evaluation: Use Root Mean Squared Error (RMSE) and Mean Absolute Error (MAE) on the held-out test set. Compare against baseline models (Random Forest on Morgan fingerprints).

Diagram 1: Workflow for GNN-based property prediction.

Protocol 2:De NovoMolecule Generation with a Conditional VAE

Objective: Generate novel molecules optimized for high predicted activity against a target and favorable drug-likeness.

- Model Architecture: Build a Conditional VAE (CVAE). The encoder (Q) maps a SMILES string to a latent vector z, conditioned on a property vector c (e.g., target activity, LogP). The decoder (P) reconstructs the SMILES from z and c.

- Training: Train on a dataset (e.g., ChEMBL) with associated properties. The loss function combines reconstruction loss (cross-entropy) and the Kullback–Leibler divergence (KL) loss to regularize the latent space.

- Latent Space Navigation: Sample latent vectors z from a region conditioned on desired properties c_target. Decode these vectors to generate novel SMILES.

- Validation & Filtering: Pass generated SMILES through a series of filters: chemical validity (RDKit), synthetic accessibility (SAscore), and a pre-trained activity predictor. Select top candidates for in silico docking or synthesis.

Diagram 2: Conditional generation workflow with a VAE.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Computational Tools for AI-Driven Drug Design

| Tool / Resource | Category | Primary Function |

|---|---|---|

| RDKit | Cheminformatics Library | Open-source toolkit for molecule I/O (SMILES, SDF), descriptor calculation, fingerprint generation, and substructure search. |

| PyTorch Geometric / DGL | Deep Learning Library | Specialized libraries for building and training Graph Neural Networks (GNNs) on molecular graph data. |

| Open Babel / MDAnalysis | Molecular Conversion & Analysis | Converts between molecular file formats and performs trajectory analysis for 3D molecular dynamics data. |

| AutoDock Vina / GNINA | Molecular Docking Software | Performs in silico docking of generated molecules into target protein pockets to estimate binding pose and affinity. |

| ChEMBL / PubChem | Bioactivity Database | Public repositories of curated bioactivity data (e.g., IC₅₀, Ki) for training predictive ML models. |

| ZINC / Enamine REAL | Compound Library | Commercial or virtual catalogs of purchasable compounds for virtual screening and training generative models on "real" chemical space. |

| SAscore | Synthetic Accessibility | Algorithm to estimate the ease of synthesis for a generated molecule, a critical post-generation filter. |

| OMEGA / CONFORMER | Conformation Generation | Software to generate biologically relevant 3D conformations from 1D/2D representations for downstream 3D modeling. |

Integrated Pipeline & Future Outlook

The convergence of these paradigms creates a powerful, iterative feedback loop for principled drug design: Generative AI proposes novel structures, which are encoded via Molecular Representations (Graphs, 3D) and evaluated by predictive Machine Learning models for multiple parameters (potency, pharmacokinetics, safety). The results of these predictions then inform the next cycle of generation.

Future research directions include the development of unified models that seamlessly operate across 1D, 2D, and 3D representations, the integration of biological sequence data (e.g., for target-aware generation), and the adoption of reinforcement learning frameworks where the generative agent is optimized against a complex, multi-parameter reward function. The overarching thesis remains clear: the deliberate and integrated application of these AI paradigms is transforming de novo drug design from a high-risk art into a principled, engineering discipline.

Within the broader thesis that AI-driven de novo drug design represents a paradigm shift from screening to generative creation, the central promise is the ab initio generation of novel, optimal, and synthetically accessible chemical entities. This whitepaper details the technical core of achieving this promise, moving beyond simple generation to the creation of molecules that satisfy a complex multi-objective optimization landscape encompassing potency, selectivity, ADMET (Absorption, Distribution, Metabolism, Excretion, Toxicity), and synthetic feasibility.

Foundational Architectures & Quantitative Benchmarks

Current state-of-the-art relies on deep generative models trained on vast chemical libraries. Their performance is benchmarked on standard tasks.

Table 1: Performance Benchmarks of Key Generative Architectures (2023-2024)

| Model Architecture | Primary Task | Key Metric | Reported Performance | Dataset |

|---|---|---|---|---|

| GPT-based (ChemGPT) | Next-token prediction (SMILES/SELFIES) | Validity (unconditional) | 97.2% | ZINC15, ChEMBL |

| Variational Autoencoder (VAE) | Latent space representation | Reconstruction Accuracy | 92.5% | MOSES |

| Generative Adversarial Net (GAN) | Distribution learning | Fréchet ChemNet Distance (FCD)↓ | 0.82 | Guacamol |

| Graph Neural Network (GNN) | Direct graph generation | Uniqueness @ 10k samples | 99.8% | QM9 |

| Reinforcement Learning (RL) | Objective-driven optimization | Success Rate (DRD2 target) | 95.1% | ZINC250k |

Core Experimental Protocol: Iterative AI-Driven Design Cycle

This protocol describes a standard workflow for generating novel chemical entities against a specific biological target.

Protocol Title: Integrated De Novo Design Cycle with Multi-Objective Optimization

Objective: To generate novel, drug-like compounds with predicted high affinity for Target X and favorable ADMET profiles.

Materials & Methods:

Target Profiling & Goal Definition:

- Define the chemical space constraints (e.g., MW < 500, LogP < 5).

- Establish quantitative objectives: pIC50 > 8.0 (from docking/QSAR), high selectivity vs. related targets, and predicted scores for permeability (e.g., Caco-2 > 5e-6 cm/s), metabolic stability (e.g., t1/2 > 30 min), and absence of structural alerts.

Model Initialization & Conditioning:

- Initialize a pre-trained generative model (e.g., a GNN-based generator).

- Condition the model using a 3D pharmacophore model or a fingerprint of the target's active site, derived from its crystal structure or a homology model.

Generation & Initial Filtering:

- Generate 50,000 novel molecular graphs.

- Apply rule-based filters (e.g., PAINS, REOS) to remove undesirable chemotypes. Expected attrition: ~40%.

In Silico Evaluation & Scoring:

- Docking: Dock the remaining compounds into the target's binding site using Glide SP or AutoDock Vina. Retain top 20% by docking score.

- ADMET Prediction: Pass the compounds through a suite of QSAR models (e.g., using ADMETLab 3.0 or proprietary models) for pharmacokinetic and toxicity endpoints.

- Synthetic Accessibility (SA): Score compounds using the SAScore or a retrosynthesis-based model (e.g., AiZynthFinder).

Multi-Objective Optimization & RL Fine-Tuning:

- Formulate a composite reward function: R = w1*DockingScore + w2*LogD + w3*CYP3A4score + w4*(1/SAScore).

- Use a policy gradient RL algorithm (e.g., REINFORCE, PPO) to fine-tune the generative model, encouraging it to produce molecules that maximize R.

- Iterate steps 3-5 for 10-20 cycles, generating 10,000 molecules per cycle.

Final Selection & In Vitro Validation:

- Cluster the top 200 molecules from the final cycle by scaffold.

- Select 20-30 representative, synthetically tractable candidates for in vitro synthesis and testing.

The Scientist's Toolkit: Key Research Reagent Solutions

| Tool/Reagent | Provider/Example | Function in De Novo Design |

|---|---|---|

| Chemical Databases | ZINC20, ChEMBL35, PubChem | Source of training data for generative models; provides known actives for validation. |

| Generative Model Software | REINVENT, MolecularAI, PyTorch/TensorFlow GNN libs | Core engine for generating novel molecular structures. |

| Docking Suite | Schrödinger Glide, OpenEye FRED, AutoDock-GPU | Predicts binding pose and affinity of generated molecules to the target. |

| ADMET Prediction Platform | ADMETLab 3.0, Schrödinger QikProp, StarDrop | Provides in silico estimates of pharmacokinetic and toxicity properties. |

| Synthetic Accessibility Tool | RDKit (SAScore), AiZynthFinder (ICSYN), ASKCOS | Evaluates the feasibility of synthesizing the AI-generated molecule. |

| High-Throughput Chemistry | Solid-phase synthesis plates, automated liquid handlers, flow reactors | Enables rapid physical synthesis of the top AI-generated candidates for testing. |

Visualizing the Integrated Workflow

AI-Driven De Novo Design Cycle

RL Fine-Tuning of a Generative Model

The central promise is being realized through integrated cycles of generation, multi-faceted in silico validation, and iterative optimization via reinforcement learning. The future trajectory within this thesis framework points toward the direct incorporation of physiological systems-level modeling (e.g., PK/PD simulations) into the generation loop and the use of foundational models trained on broader biochemical data, moving from generating optimal chemical entities to predicting optimal therapeutic outcomes.

Thesis Context: This whitepaper provides a technical foundation for the application of Artificial Intelligence in de novo drug design. The precise definition, quantification, and computational manipulation of these core concepts are critical for training robust AI models capable of generating novel, viable therapeutic candidates.

Quantitative Structure-Activity Relationship (QSAR)

QSAR is a computational modeling method that quantifies the relationship between a molecule's structural properties (descriptors) and its biological activity. In AI-driven de novo design, QSAR models serve as surrogate assays, enabling the rapid in silico prediction of activity for millions of generated structures.

Core Descriptors & Contemporary Data

Modern QSAR utilizes high-dimensional descriptors, often processed via machine learning algorithms.

Table 1: Key Classes of Molecular Descriptors for QSAR in AI Models

| Descriptor Class | Specific Examples | Role in AI/ML Model | Typical Value Range |

|---|---|---|---|

| Physicochemical | LogP (partition coefficient), Molecular Weight, Topological Polar Surface Area (TPSA) | Features for regression/classification; constraints for drug-likeness (e.g., Lipinski's Rule of 5). | LogP: -2 to 5, MW: 150-500 Da, TPSA: 20-130 Ų |

| Topological | Morgan Fingerprints (ECFP4), Daylight Fingerprints | Sparse, high-dimensional input for deep neural networks (DNNs) and gradient boosting. | Binary vectors of length 1024-4096 |

| Quantum Chemical | HOMO/LUMO energy, Partial Atomic Charges, Dipole Moment | Inform target binding and reactivity; used in physics-informed neural networks. | HOMO: -9 to -5 eV |

| 3-Dimensional | Molecular Shape, Steric/Electrostatic Field Maps (CoMFA) | Input for 3D-CNNs; critical for binding affinity prediction. | Grid-based continuous values |

Protocol: Building a Modern QSAR Model for AI Training

Objective: Develop a robust predictive model to integrate into a generative AI pipeline.

- Dataset Curation: From sources like ChEMBL, extract bioactivity data (e.g., IC50) for a target. Apply stringent cutoff (e.g., IC50 < 10 µM for actives). Aim for >2000 compounds.

- Descriptor Calculation: Use RDKit or Dragon to compute 2D/3D descriptors. Generate ECFP4 fingerprints for all compounds.

- Data Preprocessing: Remove near-constant descriptors. Handle missing values (imputation or removal). Scale numerical features (StandardScaler).

- Dataset Splitting: Split into Training (70%), Validation (15%), and Hold-out Test (15%) sets. Use scaffold splitting to ensure chemical diversity separation and prevent model overfitting.

- Model Training: Employ an algorithm like Gradient Boosting (XGBoost) or a DNN. Use the Validation set for hyperparameter tuning (e.g., via Bayesian optimization).

- Validation & Metrics: Evaluate on the Test set using: R² (regression), ROC-AUC (classification), and RMSE. Apply Y-randomization to confirm model significance.

Diagram Title: QSAR Model Development Workflow for AI

Research Reagent Solutions: QSAR Modeling

Table 2: Essential Tools for QSAR Analysis

| Tool/Reagent | Function | Provider/Example |

|---|---|---|

| RDKit | Open-source cheminformatics library for descriptor/fingerprint calculation. | RDKit Community |

| Dragon | Software for calculating >5000 molecular descriptors. | Talete srl |

| ChEMBL Database | Curated database of bioactive molecules with assay data. | EMBL-EBI |

| scikit-learn / XGBoost | Python libraries for building and validating ML models. | Open Source |

| TensorFlow/PyTorch | Frameworks for building deep neural network QSAR models. | Google / Meta |

Pharmacophore Modeling

A pharmacophore is an abstract model defining the essential steric and electronic functional arrangements necessary for molecular recognition by a biological target. For AI-based generation, pharmacophores act as 3D constraints, guiding the model to produce structures that satisfy key interaction points.

Key Features & Experimental Basis

Pharmacophore features are derived from ligand-receptor interaction analysis.

Table 3: Core Pharmacophore Features and Their Structural Correlates

| Feature | Description | Typical Moiety | Experimental Source |

|---|---|---|---|

| Hydrogen Bond Donor (HBD) | Positively polarized hydrogen atom. | -OH, -NH2, -NH- | Protein-ligand crystal structure (H-bond acceptor on target). |

| Hydrogen Bond Acceptor (HBA) | Lone pair of electrons on electronegative atom. | C=O, -O-, -N | Protein-ligand crystal structure (H-bond donor on target). |

| Hydrophobic | Region of lipophilicity. | Alkyl chains, aromatic rings | Burial in hydrophobic pocket; alanine scanning mutagenesis. |

| Positive/Negative Ionizable | Groups capable of forming ionic bonds. | -NH3+ (basic), -COO- (acidic) | Interaction with oppositely charged residue (Asp, Glu, Arg, Lys). |

| Aromatic Ring | Electron-rich π-system. | Phenyl, pyridine | π-π stacking or cation-π interaction with protein side chains. |

Protocol: Structure-Based Pharmacophore Generation

Objective: Create a pharmacophore query from a protein-ligand complex for virtual screening or generative AI guidance.

- Structure Preparation: Obtain a high-resolution PDB structure (e.g., resolution < 2.5 Å). Use protein preparation tools (Schrödinger Maestro, MOE) to add hydrogens, assign bond orders, and optimize side-chain orientations.

- Ligand Interaction Analysis: Analyze the binding site. Identify key interactions: H-bonds, salt bridges, hydrophobic contacts, π-stacking.

- Feature Mapping: Using software (e.g., LigandScout, Phase), map the observed interactions to pharmacophore features. Exclude features formed by non-essential parts of the ligand.

- Constraint Definition: Define geometric constraints (tolerances, angles) for each feature based on observed distances in the crystal structure. Define excluded volumes based on protein shape to prevent steric clash.

- Validation: Validate the model by screening a small decoy set enriched with known actives. Measure enrichment factor (EF).

Diagram Title: From Crystal Structure to AI-Usable Pharmacophore

ADMET

ADMET (Absorption, Distribution, Metabolism, Excretion, Toxicity) properties determine the pharmacokinetic and safety profile of a drug candidate. AI models for de novo design must incorporate predictive ADMET filters early in the generation process to prioritize synthesizable compounds with a high probability of in vivo success.

Key Parameters & Predictive Endpoints

Table 4: Critical ADMET Properties and Their Impact on Drug Design

| Property | Definition & Measure | Ideal Range/Profile | Common AI Prediction Model |

|---|---|---|---|

| Absorption (Caco-2 Permeability) | In vitro model of intestinal permeability. | Papp > 1 x 10⁻⁶ cm/s (high permeability) | Binary Classifier (High/Low) |

| Hepatocyte Clearance | Intrinsic clearance in human liver cells. | Low clearance (< 50% liver blood flow) | Regression (mL/min/kg) |

| CYP450 Inhibition | Inhibition of major metabolizing enzymes (e.g., CYP3A4). | IC50 > 10 µM (low risk of drug-drug interaction) | Binary Classifier (Inhibitor/Non-Inhibitor) |

| hERG Blockade | Inhibition of potassium channel linked to cardiotoxicity. | IC50 > 10 µM (low risk) | Binary Classifier (Risk/No Risk) |

| Ames Test | Bacterial assay for mutagenicity. | Non-mutagen | Binary Classifier (Mutagen/Non-Mutagen) |

| Volume of Distribution (Vd) | Apparent volume into which a drug distributes. | Vd > 0.15 L/kg (not overly restricted to plasma) | Regression (L/kg) |

Protocol: Integrating ADMET Predictions into an AI Generation Loop

Objective: Implement a multi-parameter ADMET filter within a generative AI pipeline (e.g., a Variational Autoencoder or Reinforcement Learning agent).

- Model Ensemble: For each ADMET endpoint, train or procure a validated predictive model (e.g., using ADMETlab 3.0 or proprietary models).

- Threshold Definition: Set acceptable thresholds for each property based on project goals (e.g., "CYP3A4 inhibition probability < 0.3").

- Pipeline Integration: After the AI generator proposes a new molecular structure (SMILES), decode it and compute relevant descriptors.

- Parallel Prediction: Pass the descriptors through the ensemble of ADMET models to obtain a vector of predictions.

- Scoring & Filtering: Apply a weighted scoring function (or a hard filter) to the predictions. Compounds passing the threshold are retained for further exploration; others are penalized or discarded.

- Iterative Feedback: Use the ADMET score as part of the reinforcement learning reward or as a loss term to steer the generator towards favorable chemical space.

Diagram Title: ADMET Prediction Loop in AI-Driven Generation

Research Reagent Solutions: ADMET Prediction

Table 5: Key Resources for ADMET Modeling

| Tool/Reagent | Function | Provider/Example |

|---|---|---|

| ADMETlab 3.0 | Web-based platform for comprehensive ADMET property prediction. | Xundrug Lab |

| Schrödinger QikProp | Software for rapid prediction of physicochemical and ADMET properties. | Schrödinger |

| Liver Microsomes / Hepatocytes | In vitro reagents for experimental metabolic stability assays. | Thermo Fisher, Corning |

| Caco-2 Cell Line | Cell line for in vitro permeability assessment. | ATCC |

| hERG Assay Kits | In vitro kits (binding or functional) for cardiotoxicity screening. | Eurofins, DiscoverX |

The Chemical Space

Chemical space is the multi-dimensional descriptor space encompassing all possible organic molecules. For drug discovery, the relevant region is "drug-like" chemical space. AI for de novo design operates by learning the distribution of known bioactive molecules within this space and generating novel points (molecules) within promising, under-explored regions.

Quantifying & Navigating Chemical Space

Table 6: Metrics for Characterizing Chemical Space in Drug Discovery

| Metric/Tool | Description | Application in AI Design | Typical Scale |

|---|---|---|---|

| Molecular Similarity (Tanimoto) | Jaccard index based on fingerprint overlap. | Assess novelty of AI-generated compounds vs. training set. | 0 (dissimilar) to 1 (identical). Novelty if < 0.4 |

| Scaffold Analysis (Murcko) | Decomposition into core ring systems and linkers. | Analyze diversity of generated compounds; avoid over-representation. | Number of unique Bemis-Murcko scaffolds. |

| Principal Component Analysis (PCA) | Dimensionality reduction to visualize chemical space. | Map training set, generated compounds, and known actives in 2D/3D. | First 3 PCs often explain ~30-50% variance. |

| t-Distributed Stochastic Neighbor Embedding (t-SNE) | Non-linear dimensionality reduction for cluster visualization. | Identify distinct clusters of generated compounds. | Used for qualitative pattern recognition. |

| Synthetic Accessibility Score (SAscore) | Score estimating ease of synthesis (1=easy, 10=hard). | Filter or penalize generated compounds that are unrealistic to synthesize. | Target SAscore < 4.5 for lead-like compounds. |

Protocol: Mapping and Analyzing the Output of a Generative AI Model

Objective: Evaluate the chemical space coverage and novelty of molecules generated by an AI agent.

- Reference Set Curation: Compile a relevant reference set (e.g., all known ligands for the target from ChEMBL, plus drugs in relevant therapeutic area).

- AI Generation: Run the trained generative model to produce a large set of novel molecules (e.g., 10,000 SMILES).

- Descriptor Calculation & Dimensionality Reduction: Compute ECFP4 fingerprints for both the reference set and the generated set. Use PCA to reduce to 50 principal components, then further to 2D for visualization.

- Spatial Analysis: Plot the 2D maps. Calculate the centroid and density of the reference set. Plot the generated molecules overlaid.

- Quantitative Metrics: Calculate: a) Novelty: % of generated molecules with Tanimoto < 0.4 to nearest neighbor in reference set. b) Diversity: Mean pairwise Tanimoto distance among generated molecules. c) Scaffold Hop: Identify novel Murcko scaffolds not present in the reference set.

- Synthetic Accessibility Filter: Apply an SAscore filter to remove unrealistic compounds from the final proposed list.

Diagram Title: Mapping AI Outputs onto Chemical Space

The AI Toolkit: Architectures and Workflows for Generating Novel Drug Candidates

The application of Artificial Intelligence (AI) to de novo drug design represents a paradigm shift in pharmaceutical research. The central thesis of this whitepaper posits that the strategic integration of three core generative model architectures—Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), and Transformers—can systematically address the multidimensional challenges of molecular generation, optimization, and validation. This guide provides an in-depth technical examination of these architectures within the context of generating novel, synthetically accessible, and biologically active molecular entities.

Core Architectural Principles and Comparative Analysis

Variational Autoencoders (VAEs) for Latent Space Exploration

VAEs provide a probabilistic framework for learning a continuous, structured latent representation of molecular data. In drug design, this latent space enables smooth interpolation and exploration of chemical properties.

Architecture & Loss Function: A VAE consists of an encoder ( q\phi(z|x) ) that maps a molecular representation ( x ) to a latent variable ( z ), and a decoder ( p\theta(x|z) ) that reconstructs the molecule from ( z ). The model is trained by maximizing the Evidence Lower Bound (ELBO): [ \mathcal{L}(\theta, \phi; x) = \mathbb{E}{q\phi(z|x)}[\log p\theta(x|z)] - D{KL}(q_\phi(z|x) \parallel p(z)) ] where ( p(z) ) is typically a standard normal prior ( \mathcal{N}(0, I) ). The first term is the reconstruction loss, and the KL divergence term regularizes the latent space.

Application: Primarily used for generating molecules with desired properties by performing gradient-based optimization in the continuous latent space.

Generative Adversarial Networks (GANs) for High-Fidelity Generation

GANs frame generation as an adversarial game between a generator ( G ) and a discriminator ( D ). The generator learns to produce realistic molecules from noise, while the discriminator learns to distinguish real from generated samples.

Minimax Objective: [ \minG \maxD V(D, G) = \mathbb{E}{x \sim p{data}(x)}[\log D(x)] + \mathbb{E}{z \sim pz(z)}[\log(1 - D(G(z)))] ] Application: Excels at generating highly realistic, novel molecular structures, often with superior perceptual quality compared to VAEs. Challenges include mode collapse and training instability.

Transformers for Sequence-BasedDe NovoDesign

Transformers, based on the self-attention mechanism, process sequential representations of molecules (e.g., SMILES, SELFIES) without recurrent connections. They model the conditional probability of a token given all previous tokens.

Self-Attention Mechanism: [ \text{Attention}(Q, K, V) = \text{softmax}\left(\frac{QK^T}{\sqrt{d_k}}\right)V ] Application: State-of-the-art for autoregressive molecular generation, capturing long-range dependencies in molecular sequences. Can be fine-tuned for property prediction and conditioned generation.

Quantitative Comparison of Architectures

Table 1: Comparative Analysis of Generative Models for Drug Design

| Feature | VAE | GAN | Transformer |

|---|---|---|---|

| Training Stability | High | Low | Moderate-High |

| Explicit Latent Space | Yes | No | No (usually) |

| Generation Diversity | Moderate | Can suffer from mode collapse | High |

| Sample Quality | Good | Very High | State-of-the-Art |

| Property Optimization | Easy via latent space interpolation | Requires RL or auxiliary networks | Via conditional generation |

| Primary Molecular Representation | Graph, Fingerprint, SMILES | Graph, SMILES | SMILES, SELFIES |

| Typical Validity Rate (%) | 60-90% | 70-100% | 85-100% (with SELFIES) |

| Novelty Rate (%) | 80-95% | 90-100% | 90-100% |

Experimental Protocols for Model Evaluation in Drug Design

A robust evaluation framework is critical for assessing generative models in a scientific context. Below are detailed protocols for key experiments.

Protocol 1: Benchmarking Molecular Generation Performance

- Data Preparation: Curate a standardized dataset (e.g., ZINC250k, MOSES). Split into training (80%), validation (10%), and test (10%) sets. Represent molecules as canonical SMILES or SELFIES strings.

- Model Training: Train VAE, GAN, and Transformer models on the training set. For VAE, use a KL annealing schedule. For GAN, employ techniques like WGAN-GP or Spectral Normalization for stability. For Transformer, use a standard language modeling objective.

- Generation & Metrics: Generate 10,000 molecules from each trained model. Evaluate using:

- Validity: Percentage of chemically valid molecules (using RDKit).

- Uniqueness: Percentage of unique molecules among valid ones.

- Novelty: Percentage of unique, valid molecules not present in the training set.

- Frechet ChemNet Distance (FCD): Measures distributional similarity to a reference set (e.g., test set) using activations from the ChemNet network.

- Analysis: Tabulate metrics for comparative analysis.

Protocol 2: Latent Space Property Optimization (VAE-specific)

- Property Predictor Training: Train a separate feed-forward neural network to predict a target property (e.g., LogP, QED) from the VAE's latent vectors using the training set molecules and their property values.

- Latent Space Navigation: For a chosen seed molecule, encode it to obtain its latent vector ( z_{seed} ).

- Gradient-Based Optimization: Calculate the gradient of the property predictor with respect to ( z ). Update ( z ) via gradient ascent/descent: ( z{new} = z{seed} + \alpha \nabla_z P(z) ), where ( P ) is the property predictor and ( \alpha ) is the step size.

- Decoding & Validation: Decode ( z_{new} ) to generate a new molecule. Validate its chemical structure and computationally verify the target property.

Protocol 3: In Silico Validation Pipeline for Generated Candidates

- ADMET Filtering: Pass generated molecules through a series of computational filters for Absorption, Distribution, Metabolism, Excretion, and Toxicity using tools like QikProp or admetSAR.

- Docking Simulation: Prepare protein target (PDB ID) using AutoDock Tools (add hydrogens, assign charges). Generate 3D conformers for the filtered molecules. Perform molecular docking using AutoDock Vina or Glide to estimate binding affinity (kcal/mol).

- Synthetic Accessibility (SA) Score: Calculate the SA Score for top-ranked docked compounds to prioritize synthetically feasible molecules.

Visualization of Core Concepts and Workflows

Title: VAE Architecture for Molecular Generation & Optimization

Title: Adversarial Training Cycle of a Molecular GAN

Title: Autoregressive Molecular Generation with a Transformer

The Scientist's Toolkit: Essential Research Reagents & Software

Table 2: Key Resources for AI-Driven De Novo Drug Design Experiments

| Category | Item / Software | Primary Function in Research |

|---|---|---|

| Core Development Frameworks | PyTorch, TensorFlow, JAX | Provides flexible libraries for building, training, and evaluating deep generative models. |

| Cheminformatics Toolkits | RDKit, Open Babel | Handles molecule I/O, descriptor calculation, validity checks, substructure search, and chemical transformations. |

| Molecular Docking | AutoDock Vina, GNINA, Schrödinger Glide (Commercial) | Performs in silico binding affinity prediction by simulating the fit of a generated molecule into a protein target's binding site. |

| ADMET Prediction | admetSAR, SwissADME, ProTox-II | Computationally predicts pharmacokinetic and toxicity profiles of generated molecules. |

| Benchmark Datasets | ZINC, ChEMBL, MOSES Benchmark | Provides curated, publicly available molecular structures for training and standardized evaluation of generative models. |

| High-Performance Computing | NVIDIA GPUs (e.g., A100, V100), Google Colab, AWS EC2 | Accelerates model training and enables large-scale virtual screening of generated libraries. |

| Visualization & Analysis | Matplotlib, Seaborn, DeepChem, t-SNE/UMAP | Enables plotting of chemical space, latent space visualization, and analysis of model results. |

| Molecular Representation | SELFIES (Self-Referencing Embedded Strings) | A robust string-based molecular representation guaranteeing 100% validity, crucial for sequence-based models. |

This technical guide, framed within a broader thesis on AI for de novo drug design principles, explores the application of Reinforcement Learning (RL) to generate novel molecular structures optimized for multiple, often competing, pharmacological objectives. Moving beyond single-property optimization, this paradigm addresses the real-world complexity of drug development, where candidates must simultaneously satisfy criteria such as potency, selectivity, synthetic accessibility, and favorable pharmacokinetics.

Foundational RL Framework for Molecule Generation

The core formulation treats molecule generation as a sequential decision-making process. An agent (generator) constructs a molecule step-by-step (e.g., adding atoms or fragments), and a reward function provides feedback based on the final molecule's properties.

Core Components

- Agent: Typically a deep neural network (e.g., RNN, Transformer, GNN) that defines a policy π(a|s) for taking action a (e.g., adding a substructure) given the current molecular state s.

- Action Space: A set of chemically valid modifications (e.g., from a predefined vocabulary of atoms/bonds or reaction rules).

- State Representation: The intermediate molecular structure, represented as a SMILES string, molecular graph, or fragment set.

- Reward Function R(s): A critical component that calculates a scalar reward by aggregating scores from multiple objective functions.

Multi-Objective Reward Formulation

The reward function integrates n objectives: [ R(s) = f(R1(s), R2(s), ..., Rn(s)) ] where (Ri(s)) are scores for individual objectives like QED (drug-likeness), SA (synthetic accessibility), binding affinity (docking score), and more.

Quantitative Landscape of Multi-Objective RL for Molecules

The table below summarizes key metrics and performance benchmarks from recent studies.

Table 1: Comparative Performance of RL Methods in Multi-Objective Molecule Generation

| RL Algorithm | Key Objectives Optimized | Benchmark/Score | Success Rate (%) | Unique & Valid (%) | Reference Year |

|---|---|---|---|---|---|

| PPO (Proximal Policy Optimization) | QED, SA, Target Similarity | DRD2 (Activity) > 0.5 | ~65% | >99% | 2022 |

| REINVENT 2.0 | Activity (Docking), SA, QED, Mw | Pareto Front Size | N/A | 98.5% | 2023 |

| Multi-Objective GFlowNet | Binding Energy (AutoDock Vina), QED, SA | Dominance Ratio on Practical Pareto Front | ~40% (High-affinity) | ~100% | 2023 |

| Goal-Conditioned RL | LogP, TPSA, Target Affinity | F1-Score for Goal Achievement | 72.4% | 99.2% | 2024 |

| Dual-Objective DQN | JAK2 Inhibition, JAK3 Selectivity | Selectivity Index (SI) > 10 | 22.5% | 97.8% | 2024 |

Detailed Experimental Protocol: A Standardized Workflow

The following methodology outlines a typical multi-objective RL experiment for generating novel kinase inhibitors.

Protocol: Multi-Objective RL-DrivenDe NovoDesign

Objective: Generate novel molecules with high predicted JAK2 kinase inhibition (pIC50 > 8) and high synthetic accessibility (SA Score > 4).

Step 1: Environment & Agent Setup

- Action Vocabulary: Define a set of ~100 chemical fragments derived from BRICS decomposition of known kinase inhibitors.

- State Representation: Use a Graph Neural Network (GNN) to encode the intermediate molecular graph.

- Agent Model: Initialize a Policy Network (3-layer GCN followed by an LSTM) with random weights.

Step 2: Multi-Objective Reward Definition [ R(m) = w1 * \text{Sigmoid}(\text{pIC50}{JAK2}(m) - 7) + w2 * (\text{SA}(m)/6) - \text{Penalty}(Invalid) ] where (w1=0.7), (w_2=0.3), pIC50 is predicted by a pre-trained Random Forest model, and SA is the synthetic accessibility score (1=easy, 10=hard).

Step 3: Training Loop (PPO Algorithm)

- Collection: The agent generates a batch of 512 molecules by sequentially selecting fragments.

- Evaluation: Each complete molecule m is evaluated by the reward function R(m).

- Optimization: The policy network is updated using the PPO clipped objective function to maximize the expected reward. Training runs for 500 epochs.

Step 4: Post-Generation Analysis

- Filtering: Remove duplicates and molecules with reactive functional groups.

- Pareto Analysis: Identify the non-dominated set of molecules on the 2D plane of pIC50 vs. SA Score.

- Validation: Select top Pareto-optimal molecules for in silico docking and synthesis feasibility assessment.

Visualizing the RL-Molecule Generation Pipeline

Title: RL Molecule Generation Feedback Loop

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Multi-Objective RL in Drug Design

| Category | Item / Software | Primary Function & Relevance |

|---|---|---|

| RL Frameworks | OpenAI Gym / ChemGym, TF-Agents, Stable-Baselines3 | Provides standardized environments and implementations of algorithms (PPO, DQN) for rapid prototyping. |

| Chemistry Toolkits | RDKit, OEChem (OpenEye) | Core library for cheminformatics: molecule manipulation, descriptor calculation, and validity checks. |

| Property Prediction | Pre-trained models (e.g., ChemBERTa), QSAR tools (e.g., Random Forest, XGBoost) | Predicts bioactivity (pIC50), toxicity, or ADMET properties to serve as reward components. |

| Synthetic Planning | RAscore, SAscore (RDKit), ASKCOS, AiZynthFinder | Evaluates and/or proposes synthetic routes, crucial for the "synthetic accessibility" objective. |

| Molecular Docking | AutoDock Vina, Glide, GOLD | Provides physics-based binding affinity estimates as a high-fidelity reward signal. |

| Multi-Objective Optimization | PyGMO, Platypus, custom Pareto-front analysis scripts | Analyzes and selects output molecules balancing trade-offs between objectives. |

| Visualization | Matplotlib, Seaborn, Plotly, t-SNE/UMAP | Creates plots of chemical space, Pareto fronts, and training progress. |

Advanced Strategies & Future Outlook

Current research focuses on improving sample efficiency, handling sparse rewards, and integrating human feedback. Techniques like curriculum learning, inverse reinforcement learning to infer rewards from ideal molecules, and hierarchical RL for scaffold-first generation are gaining traction. The integration of large language models (LLMs) trained on chemical knowledge as policy networks presents a promising frontier for capturing nuanced chemical heuristics and rules within the multi-objective optimization framework.

Genetic Algorithms and Evolutionary Strategies in Molecular Optimization

This whitepaper, framed within a broader thesis on artificial intelligence (AI) for de novo drug design, explores the application of genetic algorithms (GAs) and evolutionary strategies (ES) to the optimization of molecular structures. The core premise is that evolutionary computation provides a powerful, biologically-inspired framework for navigating the vast chemical space to discover novel compounds with tailored properties. This aligns with the thesis's overarching goal: to establish principled, AI-first methodologies for generating viable drug candidates from scratch, thereby accelerating early-stage discovery.

Foundational Principles: From Biology to Algorithm

Both GAs and ES belong to the broader class of evolutionary algorithms (EAs), which simulate natural selection to solve complex optimization problems.

Genetic Algorithms (GAs) operate on a population of candidate solutions (e.g., molecular graphs or fingerprints). Each candidate is encoded as a chromosome (string of numbers/bits). Core operators include:

- Selection: Fitter individuals (based on a fitness function, e.g., binding affinity score) are chosen to reproduce.

- Crossover (Recombination): Pairs of parent chromosomes exchange segments to produce offspring.

- Mutation: Random alterations are introduced to maintain genetic diversity.

Evolutionary Strategies (ES) traditionally focus on continuous parameter optimization (e.g., real-valued vectors representing molecular properties or force field parameters). Modern ES, like the Covariance Matrix Adaptation ES (CMA-ES), are renowned for their efficiency in high-dimensional, rugged landscapes. A key distinction is the self-adaptation of strategy parameters (e.g., mutation step size) alongside the solution.

In molecular optimization, the fitness landscape is the multidimensional space defined by chemical structure and its associated biological or physicochemical properties.

Core Methodologies & Experimental Protocols

Molecular Representation & Encoding

The choice of encoding dictates the applicable genetic operators.

| Encoding Scheme | Description | Applicable Operators | Advantages | Limitations |

|---|---|---|---|---|

| String-Based (SMILES/SELFIES) | Linear string representation of molecular structure. | String crossover, point mutation, substring replacement. | Simple, compatible with NLP-based models. | High risk of generating invalid strings (mitigated by SELFIES). |

| Graph-Based | Direct representation of atoms (nodes) and bonds (edges). | Graph crossover (subgraph exchange), node/edge mutation. | Intuitively represents molecular topology. | Computationally more complex; requires specialized operators. |

| Fragment-Based | Molecule as a combination of predefined chemical building blocks. | Fragment crossover, fragment addition/deletion. | Ensures synthetic feasibility and drug-likeness. | Limited to chemical space defined by fragment library. |

| Real-Valued Vector | Vector representing continuous properties (e.g., descriptors, latent space coordinates). | Arithmetic crossover, Gaussian mutation. | Enables smooth optimization of properties; ideal for hybrid AI models. | Not directly interpretable as a structure without a decoder. |

Protocol 3.1.1: Graph-Based Crossover for Molecules

- Input: Two parent molecular graphs, G1 and G2.

- Fragment Identification: Identify a common substructure or a valid cutting set of bonds in each parent using a maximum common subgraph (MCS) algorithm or random valid cut.

- Exchange: Swap non-common fragments between the two parents at the cut points.

- Validity Check & Sanitization: Ensure the resulting offspring graphs are chemically valid (e.g., correct valences). Apply sanitization rules if needed.

- Output: Two new offspring molecular graphs.

Fitness Evaluation: The Selection Pressure

The fitness function is the ultimate guide for evolution. In drug design, it is typically a multi-objective problem.

Protocol 3.2.1: Multi-Objective Fitness Evaluation for Lead Optimization

- Property Calculation: For each candidate molecule, compute a set of key properties:

- Potency: Predicted pIC50 or ΔG (binding free energy) from a QSAR model or molecular docking simulation.

- Selectivity: Score against off-target panels (e.g., using similarity or docking).

- ADMET: Predictions for solubility (LogS), permeability (Caco-2), metabolic stability (Cyp450 inhibition), and toxicity (e.g., hERG score).

- Normalization: Scale each property value to a [0, 1] range based on predefined desirable thresholds.

- Aggregation: Apply a scalarization function (e.g., weighted sum) or a Pareto-ranking algorithm (e.g., NSGA-II) to combine objectives into a single fitness score or a non-dominated ranking.

- Weighted Sum Example: Fitness = w₁Potency + w₂Selectivity + w₃Solubility - w₄Toxicity.

- Selection: Use fitness scores to perform tournament selection or roulette wheel selection to choose parents for the next generation.

Hybrid AI-Evolutionary Workflows

Modern implementations often integrate EAs with deep learning models.

- VAE + GA: A Variational Autoencoder (VAE) learns a continuous latent space from molecules. The GA operates directly in this latent space, optimizing the latent vectors. Decoders then convert high-fitness vectors back into molecules.

- Policy Gradient + ES: Evolutionary strategies can optimize the parameters of a deep reinforcement learning (RL) policy network that generates molecules, providing a robust alternative to gradient-based policy optimization.

Quantitative Performance Data

Recent benchmark studies highlight the performance of evolutionary approaches against other generative models.

Table 4.1: Benchmark Performance on GuacaMol and MOSES Datasets

| Algorithm | Type | Novelty (GuacaMol) ↑ | Diversity (MOSES) ↑ | Fitness (Drug-likeness) ↑ | Success Rate (Targeted) ↑ |

|---|---|---|---|---|---|

| Graph GA (FG) | Evolutionary | 0.94 | 0.83 | 0.89 | 0.73 |

| SMILES GA | Evolutionary | 0.91 | 0.85 | 0.82 | 0.65 |

| JT-VAE | Deep Generative | 0.97 | 0.86 | 0.92 | 0.58 |

| REINVENT | RL | 0.95 | 0.84 | 0.95 | 0.89 |

| CMA-ES (Latent) | Evolutionary | 0.93 | 0.82 | 0.88 | 0.81 |

↑ Higher is better. Data synthesized from recent literature (2023-2024). Success Rate refers to optimization of a specific target property.

Table 4.2: Case Study: Optimization of a Kinase Inhibitor Lead

| Generation | Avg. pIC50 (Predicted) | Avg. QED (Drug-likeness) | Synthetic Accessibility Score (SA) | Top Candidate pIC50 |

|---|---|---|---|---|

| Initial Population | 6.2 | 0.72 | 3.5 | 7.1 |

| Generation 50 | 7.8 | 0.85 | 2.8 | 8.9 |

| Generation 100 | 8.5 | 0.88 | 2.1 | 10.2 |

Results from a hypothetical fragment-based GA run over 100 generations. SA score: lower is easier to synthesize (scale 1-10).

Visualization of Workflows & Relationships

Title: Standard Genetic Algorithm Workflow for Molecular Optimization

Title: Hybrid AI-Evolutionary Molecular Design Architecture

The Scientist's Toolkit: Research Reagent Solutions

Table 6.1: Essential Resources for Implementing Molecular GAs

| Item / Reagent Solution | Function & Explanation | Example / Provider |

|---|---|---|

| Cheminformatics Library | Core toolkit for manipulating molecular structures, calculating descriptors, and handling file formats. | RDKit (Open Source), ChemAxon, Open Babel. |

| Docking Software | Provides a key fitness function component by predicting protein-ligand binding poses and scores. | AutoDock Vina, Glide (Schrödinger), GOLD. |

| ADMET Prediction Suite | Calculates critical pharmacokinetic and toxicity properties for fitness evaluation. | pkCSM, ADMETLab, QikProp (Schrödinger). |

| Chemical Fragment Library | A curated set of building blocks for fragment-based encoding and crossover operations. | Enamine REAL Fragments, Otava Fragments. |

| High-Performance Computing (HPC) Cluster | Parallelizes fitness evaluation (e.g., thousands of docking runs) across generations. | Local Slurm cluster, AWS/GCP cloud instances. |

| Evolutionary Algorithm Framework | Provides robust, optimized implementations of GA/ES operators and multi-objective algorithms. | DEAP (Python), Jenetics (Java), MOEA Framework. |

| Benchmarking Platform | Standardized datasets and metrics to evaluate and compare generative model performance. | GuacaMol, MOSES, TDC (Therapeutics Data Commons). |

This whitepaper is framed within a broader thesis on AI for de novo drug design, which posits that the next paradigm shift in medicinal chemistry will be driven by generative models that operate under explicit, multi-objective constraints. The core principle is the transition from retrospective analysis of chemical libraries to the prospective, on-demand generation of novel molecular entities conditioned on specific target engagement and predefined property profiles. This document serves as an in-depth technical guide to the methodologies, validation protocols, and practical tools enabling this transition.

Foundational Architectures & Core Methodologies

Current approaches for conditional molecular generation integrate deep generative models with explicit constraint-handling mechanisms.

2.1 Model Architectures:

- Conditional Variational Autoencoders (CVAE): Extend VAEs by incorporating condition labels (e.g., target protein ID, IC50 range) into both the encoder and decoder, learning a latent space organized by the specified conditions.

- Conditional Generative Adversarial Networks (cGAN): Utilize condition information as an additional input to both the generator (to produce compliant molecules) and the discriminator (to assess fidelity to both data distribution and conditions).

- Transformer-based Language Models: Treat SMILES or SELFIES strings as sequences and use conditional tokens or control codes to steer the generation process towards desired properties.

- Graph-based Generative Models: Operate directly on molecular graphs, where conditions are integrated as global features or used to bias the edge/node addition process during stepwise generation.

2.2 Conditioning Strategies:

- Direct Latent Space Optimization: Uses gradient-based or evolutionary algorithms to search the latent space of a pre-trained generative model in directions that optimize specific property predictors (e.g., QSAR models).

- Reinforcement Learning (RL) Fine-tuning: A pre-trained generative model is fine-tuned with RL rewards that combine likelihood (to maintain realism) and scores from property predictors (to meet constraints).

- Guided Diffusion Models: The denoising process in diffusion models is guided by the gradients of auxiliary property predictors, steering generation towards regions of chemical space that satisfy constraints.

Experimental Protocols for Model Training & Validation

A robust experimental pipeline is essential for developing and benchmarking conditional generative models.

Protocol 3.1: Model Training with Explicit Property Conditioning

- Data Curation: Assemble a dataset of molecules with associated experimental properties (e.g., pIC50, LogP, solubility). Standardize structures and normalize property values.

- Condition Encoding: Encode continuous properties into discrete bins or use a scalar value appended to the latent representation. For target-based conditioning, use learned embeddings for protein families or fingerprints.

- Model Training: Train a CVAE or cGAN using a combined loss: reconstruction loss (e.g., cross-entropy for SMILES) + condition prediction loss (e.g., MSE for regressed properties) + adversarial loss (if applicable).

- Validation: On a held-out test set, measure: (a) Reconstruction accuracy; (b) Ability to generate valid/novel molecules; (c) Correlation between input condition and predicted property of generated molecules.

Protocol 3.2: Benchmarking with the Guacamol Framework

- Task Selection: Implement standard benchmarks (e.g., Medicinal Chemistry SMARTS, Similarity to a Known Active).

- Generation: For each task, generate 10,000 molecules using the conditioned model.

- Evaluation: Calculate the success rate (fraction of generated molecules satisfying all constraints) and the novelty (fraction not present in the training set). Compare against baselines (e.g., SMILES LSTM, REINVENT).

Protocol 3.3: In Silico & Experimental Funnel Validation

- Conditional Generation: Generate a candidate library (e.g., 50,000 molecules) conditioned on a specific target (e.g., kinase hinge-binder profile) and properties (cLogP < 3, TPSA 60-100 Ų).

- Virtual Screening: Dock top candidates (e.g., 1000) into the target's active site. Select top 100 by docking score.

- ADMET Prediction: Filter the 100 using pre-trained classifiers for CYP inhibition, hERG, and solubility.

- Synthetic Accessibility: Score remaining molecules using SAscore or similar.

- Experimental Testing: Synthesize and assay the final 20-30 compounds for target activity and key properties.

Data & Performance Benchmarks

Quantitative performance of leading conditional generation models on public benchmarks.

Table 1: Performance on Guacamol Benchmark Tasks (Success Rate %)

| Model Architecture | Medicinal Chemistry SMARTS | Similarity to Celecoxib | Median Score (20 tasks) | Key Conditioning Mechanism |

|---|---|---|---|---|

| SMILES LSTM (cGAN) | 78.3 | 91.5 | 0.839 | Property labels in discriminator |

| Graph MCTS (RL) | 95.1 | 99.8 | 0.987 | Reward shaping with property predictors |

| MolGPT (Transformer) | 92.6 | 98.4 | 0.956 | Control tokens prepended to SMILES |

| Conditional Diffusion | 97.8 | 99.9 | 0.991 | Guided denoising with property gradients |

Table 2: Multi-Objective Optimization Success (MOSES Dataset)

| Model | Success Rate (3+ props) | Novelty (%) | Diversity (IntDiv) | Validity (%) | Key Properties Optimized |

|---|---|---|---|---|---|

| REINVENT 2.0 | 65.2 | 85.7 | 0.83 | 99.5 | QED, SA, LogP, Target Score |

| CVAE + BO | 58.9 | 99.2 | 0.88 | 94.1 | pIC50, Synthesizability, LogP |

| Hierarchical GAN | 71.4 | 92.3 | 0.86 | 98.8 | Scaffold type, Pharmacophore |

Visualizing Workflows & Pathways

Title: Conditional Molecular Design Funnel

Title: Conditional VAE Architecture for Molecule Generation

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools & Resources for Conditional Generation Research

| Item / Resource | Function / Purpose | Example / Format |

|---|---|---|

| CHEMBL / PubChem | Source of curated bioactivity data for training condition predictors (pIC50, etc.) | SQL database, API |

| RDKit | Open-source cheminformatics toolkit for molecule manipulation, descriptor calculation, and fingerprinting. | Python library |

| PyTorch / TensorFlow | Deep learning frameworks for implementing and training generative models. | Python library |

| Guacamol | Benchmark suite for assessing generative model performance on drug-like objectives. | Python package |

| MOSES | Benchmarking platform with standardized data splits, metrics, and baselines. | Python package |

| AutoDock Vina / Gnina | Molecular docking software for virtual screening of generated libraries against targets. | Command-line tool |

| SAscore | Synthetic Accessibility score to prioritize readily synthesizable molecules. | Python implementation |

| ADMET Predictors | Pre-trained models (e.g., from ADMETlab) to filter compounds for key pharmacokinetic properties. | Web server, API |

| REINVENT / MolDQN | Reference implementations of RL-based molecular optimization frameworks. | Open-source code |

| Diffusion Models for Molecules | Codebases for graph-based or SELFIES-based diffusion models (e.g., GeoDiff, DiG). | Research code (GitHub) |

The de novo design of novel molecular entities using artificial intelligence (AI) promises to accelerate drug discovery radically. However, a persistent gap exists between the in silico generation of putative bioactive compounds and their in vitro validation. This gap is largely defined by synthesizability—the practical feasibility of constructing a molecule with available reagents and methods within a reasonable timeframe and cost. This whitepaper, framed within the broader thesis on AI for de novo Drug Design Principles, posits that the integration of forward-looking synthesizability prediction with backward-planning retrosynthesis analysis forms a critical feedback loop. This integration is essential for grounding AI-generated molecules in chemical reality, thereby increasing the throughput and success rate of real-world drug development.

Core Concepts & Tools

Synthesizability Prediction (Forward Prediction)

This involves scoring a given molecular structure based on the estimated ease or likelihood of its synthesis. Metrics are often derived from:

- Rule-based systems: Applying chemical knowledge (e.g., complexity, presence of unstable functional groups).

- Data-driven models: Trained on reaction databases (e.g., USPTO, Reaxys) to estimate synthetic accessibility (SA) scores or the number of required synthetic steps.

Computer-Aided Retrosynthesis (Backward Planning)

These tools deconstruct a target molecule into simpler, commercially available building blocks via a series of plausible reaction steps. Modern tools are predominantly AI-driven:

- Template-based models: Apply known reaction templates from databases.

- Template-free models: Use sequence-to-sequence or graph-to-graph neural networks to predict disconnections without pre-defined rules.

Quantitative Data & Performance Comparison

Table 1: Performance Metrics of Select Synthesizability Prediction Tools

| Tool Name | Type | Key Metric | Reported Value | Basis/Training Data |

|---|---|---|---|---|

| SAscore (RDKit) | Rule-based | Synthetic Accessibility score (1=easy, 10=hard) | Correlation ~0.7 with expert assessment | Fragment contribution & complexity penalty |

| SCScore | ML-based | Neural network score (1-5 scale) | Classifies >80% of simple vs. complex molecules correctly | ~12.5M reactions from Reaxys |

| RAscore | ML-based | Retrosynthetic accessibility score (0-1) | AUC >0.9 for classifying feasible molecules | USPTO data & expert annotations |

| AiZynthFinder | Retrosynthesis | Top-1 route accuracy | 60-70% (within 3 steps from stock) | USPTO patented reactions |

Table 2: Performance Metrics of Select Retrosynthesis Planning Tools

| Tool Name | Approach | Solved Molecules (Benchmark) | Avg. Steps in Route | Key Strength |

|---|---|---|---|---|

| IBM RXN | Template-free (Transformer) | ~80% (USPTO-50k test) | 4.2 | Broad applicability |

| ASKCOS | Template-based & ML | ~85% (internal benchmark) | 5.1 | Integrates reaction condition prediction |

| MolCart | Graph-based MCTS | ~90% (40 molecule benchmark) | 3.8 | Efficient search strategy |

| Retro | Semi-template (Graph NN) | ~82% (USPTO-50k) | 4.0 | Good generalizability |

Detailed Experimental Protocol for Integrated Validation

This protocol outlines a method to validate the synergy between synthesizability prediction and retrosynthesis tools in a de novo design pipeline.

Objective: To assess whether pre-filtering AI-generated molecules with a synthesizability predictor increases the success rate of finding viable retrosynthetic pathways.

Materials: See "The Scientist's Toolkit" below. Procedure:

- Molecular Generation: Use a generative AI model (e.g., REINVENT, GENTRL) conditioned on a specific biological target to produce a library of 1,000 novel molecular structures (SMILES format).

- Pre-filtering (Property & SA):

- Apply standard drug-like property filters (e.g., Lipinski's Rule of Five, MW <500 Da).

- Calculate the Synthetic Accessibility score (SAscore) for each remaining molecule using RDKit.

- Retain the 200 molecules with the lowest SAscore (most synthetically accessible).

- Retrosynthesis Analysis:

- Input each of the 200 pre-filtered molecules into a retrosynthesis planning tool (e.g., AiZynthFinder, configured with a relevant building block stock list).

- Set search parameters: maximum search depth = 6, timeout = 60 seconds per molecule.

- For each molecule, record: (a) Success (Yes/No): whether at least one route to commercial building blocks was found. (b) Route Length: number of steps in the shortest successful route.

- Control Arm: Repeat Step 3 with 200 molecules randomly selected from the original 1,000 before SAscore filtering.

- Data Analysis:

- Calculate the route-finding success rate for both the SAscore-filtered set and the control set.

- Compare the distribution of route lengths between the two sets using a statistical test (e.g., Mann-Whitney U test).

- Perform manual chemist review on a subset of proposed routes for feasibility.

Visualizations of Integrated Workflows

AI-Driven Design-Synthesis Feedback Loop

Iterative Retrosynthesis with Feasibility Check

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Tools & Materials for Integrated Synthesizability Research

| Item/Category | Specific Example/Tool | Function in the Workflow |

|---|---|---|

| Generative AI Model | REINVENT, GENTRL, DiffLinker | Generates novel molecular structures conditioned on target properties. |