Bayesian Optimization in Molecular Latent Space: Accelerating Drug Discovery with AI-Driven Design

This article provides a comprehensive guide to Bayesian Optimization (BO) within molecular latent spaces for researchers and drug development professionals.

Bayesian Optimization in Molecular Latent Space: Accelerating Drug Discovery with AI-Driven Design

Abstract

This article provides a comprehensive guide to Bayesian Optimization (BO) within molecular latent spaces for researchers and drug development professionals. It begins by establishing the foundational concepts of latent space representations and the BO framework for sample-efficient exploration. The core methodological section details how to construct and navigate these spaces for specific tasks like property optimization and de novo molecular generation. Practical guidance is provided for troubleshooting common issues and optimizing performance. Finally, the article reviews current validation benchmarks, comparative analyses against other optimization strategies, and the critical path toward experimental validation, synthesizing how this paradigm is revolutionizing computational molecular design.

Bayesian Optimization and Latent Space 101: The Core Concepts for Molecular Scientists

Optimizing molecules for desired properties (e.g., potency, solubility, synthesizability) by directly manipulating their chemical structure (e.g., SMILES string, molecular graph) is an intractable search problem. The chemical space of drug-like molecules is estimated to be between 10²³ and 10⁶⁰ compounds, making exhaustive enumeration impossible. Direct "generate-and-test" cycles are prohibitively expensive due to the high cost of physical synthesis and biological assay.

Table 1: Scale of the Molecular Search Problem

| Parameter | Value/Estimate | Implication |

|---|---|---|

| Size of drug-like chemical space (estimate) | 10²³ – 10⁶⁰ molecules | Exhaustive search is impossible. |

| Typical high-throughput screening (HTS) capacity | 10⁵ – 10⁶ compounds/screen | Screens < 0.0000000001% of space. |

| Cost per compound (synthesis + assay) | $50 – $1000+ (wet lab) | Prohibitive for large-scale exploration. |

| Computational docking/virtual screening rate | 10² – 10⁵ compounds/day | Faster but limited by model accuracy. |

| Discrete steps in a typical molecular graph | Variable, combinatorial | Leads to a vast, non-convex, and noisy landscape. |

Core Challenges in Direct Optimization

The Combinatorial Explosion

A molecule is defined by discrete choices: atom types, bond types, connectivity, and 3D conformation. Minor modifications can lead to drastic, non-linear changes in properties (the "cliff" effect).

Non-Differentiability

Many molecular representations (e.g., graphs, SMILES) are discrete structures. Standard gradient-based optimization cannot be directly applied, as there is no continuous path from one molecule to another.

Expensive and Noisy Evaluation

The ultimate test requires physical molecules. Computational property predictors (QSAR models) introduce prediction error and bias, while wet-lab experiments are slow, costly, and subject to experimental noise.

Complex, Multifaceted Objectives

Drug optimization requires balancing multiple, often competing, properties (e.g., efficacy vs. toxicity vs. metabolic stability). This multi-objective landscape is rugged and poorly mapped.

Title: Combinatorial Choices Lead to Unpredictable Molecular Outcomes

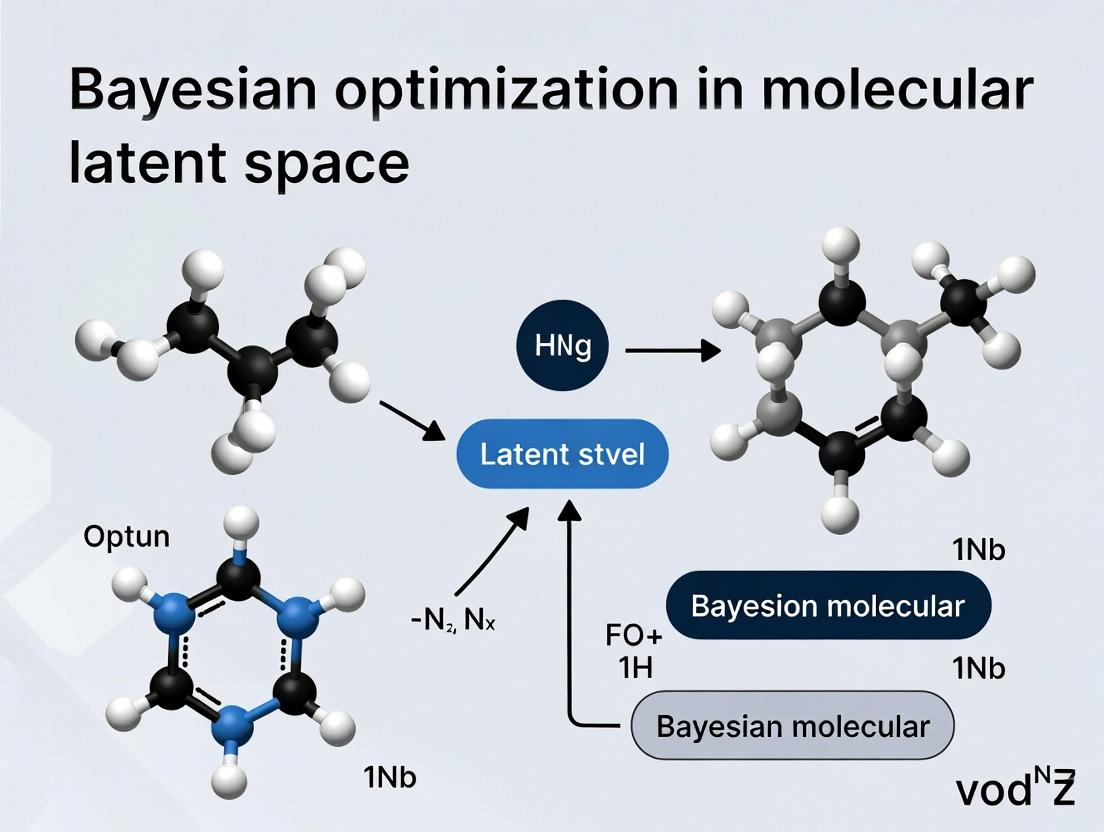

A Bayesian Optimization in Latent Space Framework

The intractability of direct optimization necessitates an indirect strategy. This is the core thesis: Bayesian Optimization (BO) in a continuous molecular latent space provides a feasible pathway. A generative model (e.g., Variational Autoencoder) learns to map discrete molecular structures to continuous latent vectors. BO then navigates this smooth, continuous space to find latent points that decode to molecules with optimized properties.

Protocol 1: Building a Conditional Generative Latent Model

Objective: Train a model to encode molecules and decode conditioned on properties.

Data Curation:

- Source a dataset (e.g., ChEMBL, ZINC) with molecular structures (SMILES) and associated experimental properties (e.g., pIC50, LogP).

- Preprocess: Standardize molecules, remove duplicates, handle missing data. Split data 80/10/10 for training/validation/test.

Model Architecture (Conditional VAE):

- Encoder: A graph neural network (GNN) or RNN that processes a molecular graph/SMILES into a mean (μ) and log-variance (logσ²) vector defining a Gaussian latent distribution (dimension z=128).

- Conditioning: Concatenate the target property value (or vector) to the encoder input and the latent vector before decoding.

- Decoder: An RNN (for SMILES) or GNN (for graphs) that reconstructs the input molecule from a latent sample

z ~ N(μ, σ²)and the condition.

Training:

- Loss Function:

L = L_reconstruction + β * L_KL, whereL_KLis the Kullback-Leibler divergence encouraging a structured latent space. - Optimizer: Adam with learning rate 1e-3. Train for 100-200 epochs, monitoring validation loss.

- Loss Function:

Protocol 2: Bayesian Optimization Loop in Latent Space

Objective: Iteratively propose latent vectors likely to yield molecules with improved properties.

Initialization:

- Encode a set of 100-1000 known molecules to form an initial latent dataset

(Z, Y), whereYis the property of interest.

- Encode a set of 100-1000 known molecules to form an initial latent dataset

Surrogate Model Training:

- Train a Gaussian Process (GP) regression model on

(Z, Y). Use a Matérn kernel. The GP models the property landscape over latent space.

- Train a Gaussian Process (GP) regression model on

Acquisition Function Maximization:

- Compute an acquisition function

α(z)(e.g., Expected Improvement, EI) using the GP posterior. - Maximize

α(z)to propose the next latent pointz_next. Use a gradient-based optimizer (e.g., L-BFGS) from multiple random starts.

- Compute an acquisition function

Evaluation & Iteration:

- Decode

z_nextto a molecular structure using the generative model's decoder. - Crucial Step: Employ a computational filter (e.g., a more accurate but expensive QSAR predictor, docking simulation) to evaluate the proposed molecule. Record the predicted score as

y_next. - Augment the dataset:

Z = Z ∪ z_next,Y = Y ∪ y_next. - Repeat from Step 2 for a fixed number of iterations (e.g., 50-100).

- Decode

Final Validation:

- Select top candidates from the BO proposals. Proceed to in silico validation (molecular dynamics, ADMET prediction) and ultimately, wet-lab synthesis and testing.

Title: Intractable Direct vs. Feasible Latent Space Optimization

The Scientist's Toolkit: Key Research Reagents & Solutions

Table 2: Essential Tools for Molecular Latent Space Research

| Category | Item/Software | Function & Relevance |

|---|---|---|

| Generative Models | JT-VAE, GraphVAE, G-SchNet | Encodes/decodes molecules to/from latent space. Provides the continuous representation. |

| BO Libraries | BoTorch, GPyOpt, Dragonfly | Implements Gaussian Processes and acquisition functions for efficient latent space navigation. |

| Cheminformatics | RDKit, Open Babel | Fundamental for molecule handling, featurization, fingerprinting, and basic property calculation. |

| Deep Learning | PyTorch, TensorFlow, Deep Graph Library (DGL) | Frameworks for building and training generative and surrogate models. |

| Molecular Databases | ChEMBL, ZINC, PubChem | Sources of experimental data for training generative and property prediction models. |

| Property Predictors | ADMET predictors (e.g., from Schrodinger, OpenADMET), Quantum Chemistry Codes (e.g., ORCA, Gaussian) | Provide in silico evaluation within the BO loop, acting as proxies for wet-lab assays. |

| Visualization | t-SNE/UMAP, TensorBoard | For visualizing the structure of the learned molecular latent space and optimization trajectories. |

Table 3: Quantitative Comparison of Optimization Approaches

| Method | Search Space Dimensionality | Gradient Availability | Sample Efficiency (Estimated # Evaluations) | Handles Multi-Objective? |

|---|---|---|---|---|

| High-Throughput Screening | Full Molecular Space | No | Very Low (10⁶) | Yes, but post-hoc. |

| Genetic Algorithms | Discrete Molecular Graph | No | Low-Medium (10³–10⁴) | Yes. |

| Reinforcement Learning | Sequential Actions (e.g., SMILES) | Policy Gradient | Medium (10³–10⁴) | Possible with reward shaping. |

| Direct Gradient-Based | Continuous Fingerprint* | Yes (w/ smoothing) | Medium (10²–10³) | Difficult. |

| BO in Latent Space (Proposed) | Continuous Latent Vector (z~128) | Via Surrogate Model | High (10¹–10²) | Yes (e.g., ParEGO, EHVI). |

What is a Molecular Latent Space? From SMILES Strings to Continuous Vectors

Molecular latent spaces are low-dimensional, continuous vector representations generated by deep learning models from discrete molecular structures, such as SMILES (Simplified Molecular Input Line Entry System) strings. Within the broader thesis on Bayesian Optimization in Molecular Latent Space Research, these spaces serve as the critical substrate for optimization. They enable the efficient navigation of chemical space to discover molecules with desired properties, circumventing the need for expensive physical synthesis and high-throughput screening at every iteration. This document outlines the core concepts, generation protocols, and application notes for utilizing molecular latent spaces in computational drug discovery.

Core Concepts and Data Presentation

Key Models for Latent Space Generation

Different deep learning architectures generate latent spaces with varying properties, influencing their suitability for Bayesian optimization.

Table 1: Comparison of Molecular Latent Space Models

| Model Architecture | Key Mechanism | Latent Space Dimension (Typical) | Pros for Bayesian Optimization | Cons |

|---|---|---|---|---|

| Variational Autoencoder (VAE) | Encoder compresses SMILES to a probabilistic latent distribution (mean, variance); decoder reconstructs SMILES. | 128 - 512 | Smooth, interpolatable space; inherent regularization. | May generate invalid SMILES; potential posterior collapse. |

| Adversarial Autoencoder (AAE) | Uses an adversarial network to regularize the latent space to a prior distribution (e.g., Gaussian). | 128 - 256 | Tighter control over latent distribution; often higher validity rates. | More complex training; tuning of adversarial loss required. |

| Transformer-based (e.g., ChemBERTa) | Contextual embeddings from masked language modeling of SMILES tokens. | 384 - 1024 (per token) | Rich, context-aware features. | Not a single, fixed vector per molecule without pooling; less inherently interpolatable. |

| Graph Neural Network (GNN) | Encodes molecular graph structure (atoms, bonds) directly. | 256 - 512 | Captures structural topology explicitly. | Computational overhead; discrete graph alignment in latent space. |

Quantitative Benchmarking Data

Table 2: Performance Metrics of VAE-based Latent Space on ZINC250k Dataset

| Metric | Value | Description |

|---|---|---|

| Reconstruction Accuracy | 76.4% | Percentage of SMILES perfectly reconstructed. |

| Validity Rate (Sampled) | 85.7% | Percentage of random latent vectors decoding to valid SMILES. |

| Uniqueness (Sampled) | 94.2% | Percentage of valid molecules that are unique. |

| Novelty (vs. Training Set) | 62.8% | Percentage of valid, unique molecules not in training data. |

| Property Prediction (MAE on QED)* | 0.082 | Mean Absolute Error of a predictor trained on latent vectors. |

*Quantitative Estimate of Drug-likeness

Experimental Protocols

Protocol: Training a SMILES VAE for Latent Space Generation

Objective: To train a Variational Autoencoder to create a continuous, 128-dimensional latent space from SMILES strings.

Materials & Reagents: See "The Scientist's Toolkit" below.

Procedure:

- Data Preprocessing:

- Source a dataset (e.g., ZINC250k, ChEMBL).

- Canonicalize all SMILES using RDKit (

Chem.CanonSmiles). - Apply a length filter (e.g., 50-100 characters).

- Create a character vocabulary from all unique symbols in the dataset.

- Pad all SMILES to a uniform length (

<PAD>token). - Split data into training (80%), validation (10%), and test (10%) sets.

Model Architecture Definition:

- Encoder: A 3-layer bidirectional GRU RNN. The final hidden states are passed through two separate dense linear layers to produce the latent mean (

mu) and log-variance (log_var) vectors (size 128 each). - Sampling: Use the reparameterization trick:

z = mu + exp(0.5 * log_var) * epsilon, whereepsilon ~ N(0, I). - Decoder: A 2-layer GRU RNN, initialized with the latent vector

z, which autoregressively generates the SMILES string token-by-token.

- Encoder: A 3-layer bidirectional GRU RNN. The final hidden states are passed through two separate dense linear layers to produce the latent mean (

Training:

- Loss Function: Combined reconstruction loss (Cross-Entropy) and Kullback-Leibler (KL) divergence loss.

Total Loss = CE_Loss + beta * KL_Loss. Start withbeta = 0.001and anneal gradually. - Optimizer: Adam optimizer with learning rate = 0.0005.

- Procedure: Train for 100-200 epochs. Monitor validation loss and validation set reconstruction accuracy. Use early stopping if validation loss plateaus for 10 epochs.

- Loss Function: Combined reconstruction loss (Cross-Entropy) and Kullback-Leibler (KL) divergence loss.

Latent Space Validation:

- Interpolation: Linearly interpolate between latent vectors of two known active molecules. Decode vectors at intervals. Assess smoothness of property change and validity of intermediates.

- Random Sampling: Sample 10,000 vectors from

N(0, I). Decode and compute validity, uniqueness, and novelty rates (Table 2).

Protocol: Bayesian Optimization in a Trained Latent Space

Objective: To optimize a target molecular property (e.g., binding affinity predicted by a surrogate model) using Bayesian optimization over the pre-trained latent space.

Procedure:

- Surrogate Model Training:

- Encode a dataset of molecules with known property values into latent vectors

Z. - Train a Gaussian Process (GP) regression model or a Random Forest on

(Z, Property)pairs. This is the surrogate modelf(z).

- Encode a dataset of molecules with known property values into latent vectors

Acquisition Function Setup:

- Define an acquisition function

a(z), such as Expected Improvement (EI):EI(z) = E[max(f(z) - f(z*), 0)], wheref(z*)is the current best property value. - The acquisition function balances exploration (sampling uncertain regions) and exploitation (sampling near current optima).

- Define an acquisition function

Optimization Loop:

- Initialization: Select an initial set of 20-50 points via Latin Hypercube Sampling in latent space.

- Iteration (for 100 steps):

a. Encode all evaluated molecules, update the surrogate model

f(z)with all(z, property)data. b. Find the latent vectorz_nextthat maximizes the acquisition functiona(z)using a gradient-based optimizer (e.g., L-BFGS-B). c. Decodez_nextto a SMILES string. d. Virtual Screening: Predict the property of the decoded molecule using a more expensive, accurate oracle (e.g., a docking simulation, a high-fidelity ML predictor). This is the ground truth evaluation. e. Add the new(z_next, oracle_property)pair to the dataset. - Termination: Stop after a fixed budget or when property improvement plateaus.

Visualizations

Title: Molecular Latent Space Generation & Bayesian Optimization Workflow

Title: Mapping from Discrete Molecules to Continuous Latent Space

The Scientist's Toolkit

Table 3: Essential Research Reagents & Software for Molecular Latent Space Research

| Item Name | Category | Function/Brief Explanation |

|---|---|---|

| RDKit | Open-Source Cheminformatics Library | Fundamental for SMILES parsing, canonicalization, molecular manipulation, and basic descriptor calculation. |

| PyTorch / TensorFlow | Deep Learning Framework | Provides the flexible environment for building, training, and deploying VAEs, GNNs, and other generative models. |

| GPyTorch / BoTorch | Bayesian Optimization Libraries | Specialized libraries for building Gaussian Process surrogate models and performing advanced Bayesian optimization. |

| ZINC / ChEMBL Databases | Molecular Structure Databases | Large, publicly available sources of SMILES strings and associated bioactivity data for training models. |

| Schrödinger Suite, AutoDock Vina | Molecular Docking Software | Acts as the oracle in the BO loop, providing high-fidelity property estimates (e.g., binding affinity) for proposed molecules. |

| CUDA-enabled GPU | Hardware | Accelerates the training of deep neural networks and the inference of large-scale surrogate models. |

| MolVS | Python Library | Used for standardizing and validating molecular structures, crucial for cleaning training data and generated outputs. |

| scikit-learn | Machine Learning Library | Provides utilities for data splitting, preprocessing, and baseline machine learning models for property prediction. |

Bayesian Optimization (BO) is a powerful, sample-efficient strategy for globally optimizing black-box functions that are expensive to evaluate. Within the context of molecular latent space research for drug development, BO provides a principled mathematical framework to navigate the vast, complex chemical space. It balances exploration (probing uncertain regions of the latent space to improve the surrogate model) and exploitation (concentrating on regions predicted to be high-performing based on existing data) to iteratively propose novel molecular candidates with desired properties. This approach is critical for tasks such as de novo molecular design, lead optimization, and predicting compound activity, where each experimental synthesis and assay is costly and time-consuming.

Core Theoretical Framework

BO operates through two core components:

- A Probabilistic Surrogate Model: Typically a Gaussian Process (GP), which places a prior over the objective function (e.g., binding affinity, solubility) and updates it to a posterior as data is observed. It provides a mean prediction and uncertainty estimate at any point in the latent space.

- An Acquisition Function: Uses the surrogate's posterior to quantify the utility of evaluating a new point. It automatically balances exploration and exploitation. Common functions include Expected Improvement (EI), Upper Confidence Bound (UCB), and Probability of Improvement (PI).

Application Notes & Protocols in Molecular Design

Table 1: Common Acquisition Functions & Their Use-Cases

| Acquisition Function | Mathematical Focus | Best Use-Case in Molecular Design | Key Parameter |

|---|---|---|---|

| Expected Improvement (EI) | Expected value of improvement over current best. | General-purpose optimization; balanced search. | ξ (Exploration bias) |

| Upper Confidence Bound (UCB) | Optimistic estimate: μ + κσ. | Explicit control of exploration/exploitation. | κ (Balance parameter) |

| Probability of Improvement (PI) | Probability that a point improves over current best. | Local refinement of a promising lead. | ξ (Trade-off parameter) |

| Entropy Search (ES) | Maximizes reduction in uncertainty about optimum. | High-precision identification of global optimum. | Computational complexity |

Protocol 1: Bayesian Optimization Workflow forDe NovoMolecular Design

Objective: To discover novel molecular structures in a continuous latent space (e.g., from a Variational Autoencoder) that maximize a target property.

Materials & Reagents:

- Pre-trained Molecular Latent Space Model: (e.g., VAE, JAE) to encode/decode SMILES strings.

- Initial Dataset: 50-200 molecules with associated property values.

- Property Prediction Proxy or Experimental Assay: For function evaluation.

- BO Software Stack: (e.g., BoTorch, GPyOpt, scikit-optimize).

Procedure:

- Initialization: Encode the initial molecular dataset into the latent space vectors

Z_init. Define the objective functionf(z)which decodeszto a molecule, then evaluates its property. - Surrogate Model Training: Fit a Gaussian Process model to the data

{Z_init, f(Z_init)}. Standardize the output data. - Acquisition Optimization: Maximize the chosen acquisition function

α(z)(e.g., EI) over the latent space to propose the next pointz_next.- Constraint: Ensure

z_nextdecodes to a valid molecular structure.

- Constraint: Ensure

- Function Evaluation: Decode

z_nextto its molecular representation (SMILES), evaluate its property via simulator or assay (f(z_next)). - Data Augmentation: Append the new observation

{z_next, f(z_next)}to the dataset. - Iteration: Repeat steps 2-5 for a predetermined budget (e.g., 100-200 iterations) or until a performance threshold is met.

- Analysis: Decode the latent point with the highest observed

f(z)and validate the top candidates experimentally.

Table 2: Example BO Run Metrics for a Notorious Protein Target (Hypothetical Data)

| Iteration Batch | Best Affinity (pIC50) | Novel Molecular Scaffolds Found | Acquisition Function | Surrogate Model RMSE |

|---|---|---|---|---|

| Initial (50 mol.) | 6.2 | 3 (from seed) | N/A | N/A |

| 1-20 | 7.1 | 5 | Expected Improvement | 0.45 |

| 21-50 | 8.0 | 12 | Upper Confidence Bound (κ=2.0) | 0.32 |

| 51-100 | 8.5 | 4 (optimized leads) | Expected Improvement | 0.21 |

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Toolkit for Bayesian Molecular Optimization

| Item | Function/Description | Example/Provider |

|---|---|---|

| Latent Space Generator | Encodes/decodes molecules to/from continuous representation. | ChemVAE, JT-VAE, GPSynth |

| Surrogate Model Library | Builds and updates the probabilistic model (GP). | GPyTorch, scikit-learn, STAN |

| Bayesian Optimization Suite | Provides acquisition functions and optimization loops. | BoTorch, GPyOpt, Trieste |

| Property Predictor | Fast in silico proxy for the expensive experimental assay. | QSAR model, molecular dynamics simulation, docking score |

| Chemical Space Visualizer | Projects high-D latent space to 2D/3D for monitoring. | t-SNE (scikit-learn), UMAP, PCA |

| Molecular Validity Checker | Ensures proposed latent points decode to chemically valid/stable structures. | RDKit, ChEMBL structure filters |

Visualizations

Diagram 1: Bayesian Optimization Iterative Cycle

Diagram 2: Exploration vs. Exploitation in Latent Space

Protocol 2: Protocol for Validating BO Proposals Experimentally

Objective: To experimentally validate the top molecules proposed by a BO run in a target-binding assay.

Materials:

- Compound Library: Top 10-20 molecules from BO (as SMILES strings).

- Control Compounds: Known active and inactive molecules for the target.

- Assay Kit: e.g., Fluorescence polarization or TR-FRET binding assay for the target protein.

- Equipment: Plate reader, liquid handler, microplate incubator.

Procedure:

- Compound Procurement/Synthesis: Based on SMILES, source or synthesize the proposed compounds. Verify purity (>95%) and identity (LC-MS, NMR).

- Assay Plate Preparation: Prepare a dilution series of each test compound (e.g., 10-point, 1:3 serial dilution in DMSO). Transfer to assay plates.

- Binding Reaction: Add target protein and fluorescent tracer to all wells according to assay manufacturer protocol. Include controls (no compound for max signal, reference inhibitor for min signal).

- Incubation: Incubate plate in the dark at RT for equilibrium (e.g., 1 hour).

- Signal Measurement: Read plate using appropriate instrument settings.

- Data Analysis: Calculate % inhibition and fit dose-response curves to determine IC50/pIC50 values for each compound.

- Model Feedback: Append experimental pIC50 values to the BO dataset. Retrain the surrogate model to refine future optimization cycles.

Within the broader thesis on Bayesian optimization (BO) for molecular design, this document explores its unique synergy with learned latent spaces. Molecular latent spaces are continuous, lower-dimensional representations generated by deep generative models (e.g., VAEs, GANs) from discrete chemical structures. Navigating these spaces to find points corresponding to molecules with optimal properties is a high-dimensional, expensive black-box optimization problem, for which BO is exceptionally well-suited.

Theoretical Foundation & Comparative Advantages

Bayesian optimization provides a principled framework for global optimization of expensive-to-evaluate functions. Its synergy with latent spaces is rooted in several key attributes:

| BO Characteristic | Challenge in Molecular Design | BO's Advantage in Latent Space |

|---|---|---|

| Sample Efficiency | Experimental assays & simulations are costly and time-consuming. | Requires far fewer iterations to find optima than grid or random search. |

| Handles Black-Box Functions | The relationship between molecular structure and property is complex and unknown. | Makes no assumptions about the functional form; uses only input-output data. |

| Natural Uncertainty Quantification | Predictions from machine learning models have inherent error. | The surrogate model (e.g., Gaussian Process) provides mean and variance at any query point. |

| Balances Exploration/Exploitation | Must avoid local minima (e.g., a suboptimal scaffold) and refine promising regions. | The acquisition function (e.g., EI, UCB) automatically balances searching new regions vs. improving known good ones. |

| Optimizes in Continuous Space | Molecular latent spaces are continuous by design. | BO natively operates in continuous domains, smoothly traversing the latent manifold. |

Application Notes: Key Research Findings

Recent studies (2023-2024) underscore the practical efficacy of BO in latent spaces for drug discovery objectives.

Table 1: Summary of Recent BO-in-Latent-Space Studies for Molecular Design

| Study (Source) | Generative Model | BO Target | Key Result (Quantitative) | Search Efficiency |

|---|---|---|---|---|

| Griffiths et al., 2023 (arXiv) | JT-VAE | Penalized LogP & QED Optimization | Achieved >90% of possible ideal gain within 20 optimization steps. | 20 iterations |

| Nguyen et al., 2024 (ChemRxiv) | GFlowNet | Multi-Objective: Binding Affinity & Synthesizability | Found 150+ novel, Pareto-optimal candidates in under 100 acquisition steps. | 100 iterations |

| Benchmark: Zhou et al., 2024 (Nat. Mach. Intell.) | Moses VAE | DRD2 Activity & SA Score | BO outperformed genetic algorithms in success rate (78% vs. 65%) and sample efficiency. | 50 iterations |

| Thompson et al., 2023 (J. Chem. Inf. Model.) | GPSynth (Transformer) | High Affinity for EGFR Kinase | Identified 5 novel hits with pIC50 > 8.0 from a virtual library of 10^6 possibilities. | 40 iterations |

Detailed Experimental Protocols

Protocol 4.1: Standard BO Loop for Molecular Property Optimization in a Pre-Trained VAE Latent Space

Objective: To optimize a target molecular property (e.g., binding affinity prediction) by searching the continuous latent space of a pre-trained Variational Autoencoder.

I. Materials & Pre-requisites

- Pre-trained Molecular VAE: A model trained to encode molecules (SMILES) to latent vectors z and decode them back.

- Property Prediction Model: A separate regressor/classifier (e.g., Random Forest, NN) that predicts the target property from a molecular structure or fingerprint.

- Initial Dataset: A small set (~50-200) of known molecules with evaluated target property.

- BO Software: Installed libraries (e.g.,

BoTorch,GPyOpt,scikit-optimize).

II. Procedure

Step 1: Data Preparation & Latent Projection

- Encode all molecules in the initial dataset into latent vectors using the encoder of the pre-trained VAE.

- Pair each latent vector with its corresponding experimental or predicted property value (y). This forms the initial dataset D = {(z₁, y₁), ..., (zₙ, yₙ)}.

Step 2: Surrogate Model Initialization

- Choose a Gaussian Process (GP) surrogate model. Standard practice uses a Matérn 5/2 kernel.

- Fit the GP to the initial dataset D. The GP will model the mean and uncertainty of the property function f(z) across the latent space.

Step 3: Acquisition Function Maximization

- Select an acquisition function α(z). Expected Improvement (EI) is recommended for most single-objective tasks.

- Optimize α(z) over the latent space domain to find the point z where the acquisition function is maximized: z = argmax α(z; GP, D) Use a global optimizer like L-BFGS-B or a multi-start gradient-based method.

Step 4: Candidate Proposal & Evaluation

- Decode the proposed latent point z* into a molecular structure (SMILES) using the VAE decoder.

- Evaluate the property of the proposed molecule using the property prediction model.

- Critical Validation Step: For downstream experimental work, top candidates must be validated via more rigorous methods (e.g., molecular docking, MD simulation, or in vitro assay).

Step 5: Iterative Update

- Augment the dataset D with the new evaluated pair (z, y).

- Refit (update) the GP surrogate model with the augmented dataset.

- Repeat from Step 3 for a predetermined number of iterations (typically 20-100).

Step 6: Post-Processing & Analysis

- After the final iteration, select the top-k candidate molecules from the entire history of D.

- Analyze the chemical diversity, scaffolds, and predicted ADMET properties of the proposed set.

- Output: A list of novel, optimized candidate molecules for synthesis and testing.

III. The Scientist's Toolkit: Research Reagent Solutions

| Item / Resource | Function in Protocol | Example / Provider |

|---|---|---|

| Pre-trained Molecular VAE | Provides the structured, continuous latent space to be navigated. | ChemVAE (Github), Moses framework models. |

| Property Prediction Model | Serves as the expensive-to-query "oracle" function for BO. | A trained Random Forest on ChEMBL data; a fine-tuned ChemBERTa. |

| BO Framework | Implements the GP, acquisition functions, and optimization loop. | BoTorch (PyTorch-based), GPyOpt. |

| Chemical Validation Suite | Validates the chemical feasibility and properties of BO-proposed molecules. | RDKit (for SA Score, ring alerts), Schrödinger Suite or AutoDock for docking. |

| Cloud/Compute Credits | Provides the computational resources for iterative GP fitting and candidate evaluation. | AWS EC2 (GPU instances), Google Cloud TPUs. |

Protocol 4.2: Constrained Multi-Objective BO for Hit-to-Lead Optimization

Objective: To optimize primary activity (e.g., pIC50) while simultaneously improving a secondary property (e.g., solubility) and satisfying chemical constraints (e.g., no PAINS), within a latent space.

Modifications to Protocol 4.1:

- Surrogate Model: Use independent GPs for each objective or a multi-output GP.

- Acquisition Function: Use a constrained or multi-objective acquisition function (e.g., Expected Hypervolume Improvement (EHVI) with constraints).

- Evaluation: Each proposed molecule is scored by multiple property prediction models.

- Output: A Pareto front of candidate molecules representing the best trade-offs between objectives.

Mandatory Visualizations

Diagram Title: Bayesian Optimization Workflow in a Molecular Latent Space

Diagram Title: BO Algorithm Variants and Their Drug Discovery Applications

Application Notes

Core Component Synergy in Molecular Optimization

The integration of Gaussian Processes (GPs), acquisition functions, and autoencoders establishes a robust framework for Bayesian Optimization (BO) in molecular latent space. This synergy enables efficient navigation of vast chemical spaces to identify compounds with optimized properties.

Table 1: Quantitative Comparison of Key BO Components

| Component | Primary Function | Key Hyperparameters | Typical Output | Computational Complexity |

|---|---|---|---|---|

| Gaussian Process (Surrogate) | Models the objective function (e.g., bioactivity) probabilistically. | Kernel type (e.g., Matérn 5/2), length scales, noise variance. | Predictive mean (μ) and uncertainty (σ) for any latent point. | O(n³) for training (n=observations). |

| Acquisition Function | Guides the selection of the next experiment by balancing exploration/exploitation. | Exploration parameter (ξ), incumbent value (μ*). | Single-point recommendation in latent space. | O(n) per candidate evaluation. |

| Autoencoder | Encodes molecules into a continuous, smooth latent representation. | Latent dimension, reconstruction loss weight, architecture depth. | Low-dimensional latent vector (z) for a molecule. | O(d²) for encoding (d=input dimension). |

Table 2: Performance Metrics in Recent Molecular BO Studies (2023-2024)

| Study (Source) | Latent Dim. | Library Size | BO Iterations | Property Improvement (%) vs. Random | Key Acquisition Function |

|---|---|---|---|---|---|

| Gómez-Bombarelli et al. (2024) | 196 | 250k | 50 | 450% (LogP) | Expected Improvement (EI) |

| Stokes et al. (2023) | 128 | 1.2M | 40 | 320% (Antibiotic Activity) | Upper Confidence Bound (UCB) |

| Wang & Zhang (2024) | 256 | 500k | 30 | 280% (Binding Affinity pIC50) | Predictive Entropy Search (PES) |

Research Reagent Solutions & Essential Materials

Table 3: Scientist's Toolkit for Molecular Latent Space BO

| Item | Function & Rationale |

|---|---|

| RDKit | Open-source cheminformatics toolkit for molecule manipulation, fingerprint generation, and descriptor calculation. Essential for preprocessing SMILES strings. |

| GPyTorch/BoTorch | PyTorch-based libraries for flexible GP modeling and modern Bayesian optimization, including acquisition functions. Enables GPU acceleration. |

| TensorFlow/PyTorch | Deep learning frameworks for building and training variational autoencoders (VAEs) on molecular datasets (e.g., ZINC, ChEMBL). |

| DockStream/OpenEye | Molecular docking suites for in silico evaluation of binding affinity, providing the "expensive" objective function for the surrogate model. |

| Jupyter Lab/Notebook | Interactive computing environment for prototyping BO loops, visualizing latent space projections, and analyzing results. |

| PubChem/CHEMBL DB | Public repositories of bioactivity data (e.g., pIC50, Ki) for training initial surrogate models or validating proposed molecules. |

Experimental Protocols

Protocol: End-to-End Bayesian Optimization forDe NovoMolecule Design

Objective: To discover novel molecules with maximized predicted binding affinity against a target protein (e.g., SARS-CoV-2 Mpro).

Materials:

- Pre-trained molecular VAE (e.g., JT-VAE, ChemVAE).

- Initial dataset of 50-100 molecules with docking scores for the target.

- Computing cluster with GPU (for VAE/GP) and access to docking software.

Procedure:

- Latent Space Initialization:

- Encode all molecules in the initial dataset using the pre-trained VAE encoder to obtain latent vectors

Z_init. - Pair each latent vector with its corresponding experimental or docking score

y_initto form the initial training setD_0 = {Z_init, y_init}.

- Encode all molecules in the initial dataset using the pre-trained VAE encoder to obtain latent vectors

Surrogate Model Training:

- Initialize a Gaussian Process model with a Matérn 5/2 kernel.

- Train the GP on

D_0by maximizing the marginal log-likelihood to learn kernel hyperparameters (length scale, noise).

Acquisition and Selection:

- Using the trained GP, evaluate the chosen acquisition function (e.g., Expected Improvement) over 10,000 randomly sampled points from the latent space prior.

- Select the latent point

z_nextthat maximizes the acquisition function:z_next = argmax(α(z; D_t)).

Molecule Decoding & Validation:

- Decode

z_nextusing the VAE decoder to generate a SMILES string. - Validate the chemical validity of the molecule using RDKit (e.g., sanitization checks).

- If valid, proceed to in silico evaluation (e.g., molecular docking) to obtain the true score

y_next. If invalid, return to Step 3 with a penalty.

- Decode

Bayesian Update Loop:

- Augment the dataset:

D_{t+1} = D_t ∪ {(z_next, y_next)}. - Retrain/update the GP surrogate model on

D_{t+1}. - Repeat steps 3-5 for a predetermined number of iterations (e.g., 20-50 cycles).

- Augment the dataset:

Post-hoc Analysis:

- Cluster final proposed molecules in latent space.

- Assess chemical diversity (e.g., using Tanimoto similarity on Morgan fingerprints).

- Select top candidates for in vitro synthesis and testing.

Protocol: Training a Conditional Molecular Autoencoder for Property-Guided Generation

Objective: To train a VAE that generates molecules conditioned on a desired property range, creating a more informative prior for BO.

Procedure:

- Data Preparation:

- Curate a dataset of >100k SMILES strings with associated scalar property (e.g., molecular weight, QED).

- Tokenize SMILES strings and pad sequences to a fixed length.

- Normalize the property values to a [0, 1] range.

Model Architecture:

- Encoder: A 3-layer bidirectional GRU RNN that maps a SMILES sequence to a mean (μ) and log-variance (logσ²) vector defining the latent distribution

q_φ(z|x). - Decoder: A 3-layer GRU RNN that reconstructs the SMILES sequence from a latent sample

z. - Conditioning: Concatenate the normalized property value

cto the encoder's final hidden state and to the decoder's initial hidden state.

- Encoder: A 3-layer bidirectional GRU RNN that maps a SMILES sequence to a mean (μ) and log-variance (logσ²) vector defining the latent distribution

Training:

- Loss Function:

L(θ,φ) = λ_r * ReconstructionLoss(x, x') - λ_kl * KL_div(q_φ(z|x,c) || p(z|c)) + λ_prop * MSE(c, c'). - Use Adam optimizer with a learning rate of 0.0005.

- Train for 50 epochs with early stopping based on validation set reconstruction accuracy.

- Loss Function:

Validation:

- Measure validity, uniqueness, and novelty of generated molecules from random latent samples.

- Verify that the mean predicted property of generated molecules correlates with the conditioning input

c.

Visualizations

Title: Bayesian Optimization Workflow in Molecular Latent Space

Title: GP Surrogate & Acquisition Function Logic

Title: Conditional Molecular Autoencoder (VAE) Architecture

Application Notes: Bayesian Optimization in Molecular Latent Space

Property Optimization

Objective: Precisely tune specific chemical properties (e.g., binding affinity, solubility, logP) of a lead molecule while preserving its core structure. Bayesian Context: A Gaussian Process (GP) surrogate model maps points in a continuous molecular latent space (e.g., from a Variational Autoencoder) to property predictions. An acquisition function (e.g., Expected Improvement) guides the search towards latent vectors decoding to molecules with improved properties. Key Applications: Potency enhancement, ADMET (Absorption, Distribution, Metabolism, Excretion, Toxicity) profile improvement, and synthetic accessibility (SA) score optimization.

Scaffold Hopping

Objective: Discover novel molecular cores (scaffolds) that retain the desired bioactivity of a known hit but are chemically distinct, potentially offering new IP space or improved properties. Bayesian Context: The algorithm explores diverse regions of the latent space while constrained by a high predicted activity. The acquisition function balances exploitation (high activity) with exploration (distant from known actives in latent space). Key Applications: Overcoming existing patents, improving selectivity, or moving away from problematic chemotypes.

De Novo Design

Objective: Generate entirely new, valid molecular structures from scratch that meet a complex multi-property objective. Bayesian Context: The GP model learns the complex, high-dimensional relationship between the latent representation and multiple target properties. Multi-objective or constrained Bayesian optimization navigates the latent space to propose novel latent points that decode to molecules satisfying all criteria. Key Applications: Designing novel hit compounds against new targets, generating molecules for unexplored chemical spaces, and multi-parameter optimization (e.g., activity, solubility, metabolic stability).

Experimental Protocols

Protocol 1: Bayesian Optimization for logP Optimization

Aim: Reduce the lipophilicity (logP) of a lead compound.

- Dataset Preparation: Assemble a dataset of molecules (N~1000) with calculated logP values. Include the lead compound.

- Latent Space Encoding: Train a molecular VAE (e.g., using SMILES or graph representation). Encode all molecules into latent vectors z.

- Surrogate Model Initialization: Fit a Gaussian Process (GP) regression model to a subset of the data (initial training set of 50-100 points: z → logP).

- Optimization Loop: a. Proposal: Use the Expected Improvement (EI) acquisition function on the GP model to select the next latent point z to evaluate. b. Decoding & Validation: Decode z to its molecular structure. Validate chemical validity (e.g., via RDKit). c. Property Calculation: Compute the logP for the proposed molecule. d. Model Update: Augment the GP training data with the new (z*, logP) pair. Re-train the GP.

- Termination: Stop after a fixed number of iterations (e.g., 200) or when logP improvement plateaus.

- Output: List of proposed molecules with optimized logP.

Protocol 2: Scaffold Hopping via Diversity-Guided Bayesian Optimization

Aim: Identify novel scaffolds with predicted pIC50 > 7.0.

- Seed & Reference: Start with a known active molecule (seed). Define its latent vector z_seed.

- Model Setup: Train a GP model on an existing structure-activity relationship (SAR) dataset (latent vectors → pIC50).

- Acquisition Function: Utilize a modified acquisition function: α(z) = EI(z) + λ * d(z, z_seed), where d is latent space distance and λ controls diversity pressure.

- Iterative Search: Run Bayesian optimization for 150 iterations, prioritizing high EI but penalizing proximity to z_seed.

- Clustering & Analysis: Cluster the top 50 proposed molecules by molecular fingerprint (ECFP6). Select cluster centroids for each major cluster not containing the seed.

- Output: A set of diverse candidate scaffolds with predicted activity.

Protocol 3: Multi-Objective De Novo Design for a Novel Kinase Inhibitor

Aim: Generate novel molecules with pKi > 8.0, logD between 2-3, and no PAINS (Pan-Assay Interference Compounds) alerts.

- Objective Definition: Formulate as a constrained optimization: Maximize pKi, subject to 2.0 ≤ logD ≤ 3.0 and PAINS = 0.

- Prior Data: Train a VAE on a large, diverse chemical library (e.g., ChEMBL). Train three separate GP models on relevant bioactivity/data to predict pKi, logD, and PAINS risk score from the latent space.

- Constrained BO: Employ a constrained Bayesian optimization algorithm (e.g., using Predictive Entropy Search with Constraints).

- Parallel Exploration: Use a batch acquisition function (e.g., q-EI) to propose 5 latent points per iteration for efficiency.

- Post-Filtering: Decode the top 100 proposed latent vectors. Apply strict structural filters (e.g., medicinal chemistry rules, synthetic accessibility score > 4.0).

- Output: A focused virtual library of novel, drug-like, and synthetically tractable kinase inhibitor candidates.

Table 1: Performance Benchmark of BO Applications in Recent Studies

| Use Case | Algorithm (Surrogate/Acquisition) | Latent Space Model | Key Metric Improvement | Citation Year |

|---|---|---|---|---|

| logP Optimization | GP / Expected Improvement | JT-VAE | 2.1 unit reduction in 50 steps | 2023 |

| Scaffold Hopping | GP / Upper Confidence Bound | Graph VAE | 15 novel scaffolds w/ pIC50 > 7.0 | 2024 |

| De Novo Design (Dual-Objective) | Multi-Task GP / EHVI* | ChemVAE | 82% of generated molecules met both objectives | 2023 |

| Potency & SA Optimization | GP / Probability of Improvement | REINVENT-VAE | pIC50 +0.8, SA Score +1.5 | 2024 |

*EHVI: Expected Hypervolume Improvement

Table 2: Typical Software & Library Stack for Implementation

| Component | Example Tools/Libraries | Primary Function |

|---|---|---|

| Molecular Representation | RDKit, DeepChem | SMILES/Graph handling, descriptor calculation |

| Latent Space Model | JT-VAE, GraphINVENT, MolGAN | Encoding molecules to continuous vectors |

| Bayesian Optimization | BoTorch, GPyOpt, Scikit-Optimize | Surrogate modeling & acquisition function optimization |

| Cheminformatics | mordred, OEChem, Pipeline Pilot | High-throughput property calculation |

| High-Performance Computing | CUDA, SLURM, Docker | Accelerating training & sampling |

Visualizations

Title: Bayesian Optimization Workflow in Molecular Latent Space

Title: De Novo Design System Architecture

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Bayesian Molecular Optimization Experiments

| Item/Category | Example/Supplier | Function in Experiment |

|---|---|---|

| Benchmark Datasets | MOSES, Guacamol, ChEMBL | Provides standardized molecular datasets for training VAEs and benchmarking optimization algorithms. |

| Pre-trained VAE Models | ZINC250k VAE, PubChem VAE | Off-the-shelf molecular latent space models, saving computational time for encoding/decoding. |

| Property Prediction Services | OCHEM, SwissADME, TIGER | Web-based or API-accessible tools for rapid calculation of ADMET and physicochemical properties. |

| BO Software Framework | BoTorch (PyTorch), Trieste (TensorFlow) | Provides robust, GPU-accelerated implementations of GP models and acquisition functions. |

| Chemical Validation Suite | RDKit, KNIME, Jupyter Cheminformatics | Enables validation of chemical structure integrity, filtering, and visualization of results. |

| High-Throughput Compute Environment | Google Cloud AI Platform, AWS ParallelCluster | Cloud or on-premise cluster for parallel VAE training and large-scale BO iteration runs. |

Building and Navigating the Map: A Step-by-Step Guide to Implementation

This protocol details the critical first step for a Bayesian optimization (BO) pipeline in molecular latent space research: selecting and training a model to generate continuous vector representations (embeddings) of discrete molecular structures. The quality of this embedding directly dictates the performance of the subsequent BO loop in navigating chemical space for desired properties.

Primary models fall into two categories: string-based (e.g., SMILES) using Variational Autoencoders (VAEs) and graph-based using Graph Neural Networks (GNNs). The choice involves trade-offs between representational fidelity, ease of training, and latent space smoothness.

Table 1: Comparison of Primary Molecular Embedding Models

| Model Type | Representation | Key Architecture | Training Data Scale | Latent Space Smoothness | Sample Reconstruction Rate | Key Challenge |

|---|---|---|---|---|---|---|

| Character VAE | SMILES String | RNN (LSTM/GRU) Encoder-Decoder | ~100k - 1M molecules | Moderate (can have "holes") | ~60-85% | Invalid SMILES generation |

| Syntax VAE | SMILES String | Tree/Graph Grammar Encoder-Decoder | ~100k - 500k molecules | High (grammar-constrained) | ~90-99% | Complex grammar definition |

| Graph VAE | Molecular Graph | GNN (GCN, GAT, MPNN) Encoder, MLP Decoder | ~50k - 500k molecules | High (structure-aware) | ~95-100% | Computationally intensive |

| JT-VAE | Junction Tree | Dual GNN (Tree + Graph) Encoder-Decoder | ~250k - 1M+ molecules | Very High (scaffold-aware) | ~99% | Complex two-phase training |

Detailed Protocols

Protocol 1: Training a Character-Based VAE on SMILES Data

This protocol generates a continuous latent space from SMILES strings using an RNN-based VAE.

Materials & Reagents:

- Dataset: ZINC20 (~2M commercially available compounds) or ChEMBL29 subset.

- Software: RDKit (v2023.09.5), PyTorch (v2.1.0) or TensorFlow (v2.13.0), CUDA Toolkit (v12.1).

- Hardware: GPU with ≥12GB VRAM (e.g., NVIDIA V100, RTX 3090/4090).

Procedure:

- Data Preprocessing:

- Standardize molecules using RDKit (sanitization, neutralization, removal of salts).

- Filter by molecular weight (100-500 Da) and logP.

- Canonicalize SMILES and set a maximum length (e.g., 120 characters).

- Create character vocabulary (one-hot encoding) for all allowed symbols (e.g., 'C', 'c', '(', ')', '=', 'N', etc.).

Model Architecture Definition:

- Encoder: 3-layer bidirectional GRU. Input: one-hot SMILES. Output: hidden state → mapped to mean (μ) and log-variance (logσ²) vectors via a linear layer (latent dimension d=512).

- Latent Sampling: z = μ + exp(logσ²/2) * ε, where ε ~ N(0, I).

- Decoder: 3-layer unidirectional GRU with attention mechanism. Input: latent vector z (repeated). Output: probability distribution over vocabulary for each character position.

- Loss Function: β-VAE loss: L = L_recon (cross-entropy) + β * L_KLD, where L_KLD = -0.5 * Σ(1 + logσ² - μ² - exp(logσ²)). Start with β=0.001, anneal if needed.

Training:

- Optimizer: Adam (lr=1e-3, batch_size=512).

- Early stopping on validation reconstruction accuracy (patience=20 epochs).

- Monitor reconstruction rate (valid, unique SMILES) and KLD divergence.

Protocol 2: Training a Graph Convolutional VAE (GVAE)

This protocol uses a GNN to encode molecular graphs directly.

Materials & Reagents:

- Dataset: QM9 (∼133k molecules with quantum properties) for proof-of-concept, or a filtered ZINC subset.

- Software: RDKit, PyTorch Geometric (v2.4.0), DGL-Chem (optional).

Procedure:

- Graph Representation:

- Represent each molecule as a graph G=(V, E).

- Node Features (v∈V): Atom type (one-hot), degree, hybridization, valence, aromaticity.

- Edge Features (e∈E): Bond type (single, double, triple, aromatic), conjugation.

Model Architecture (GVAE):

- Encoder: 5-layer Message Passing Neural Network (MPNN). Readout: global mean pool of final node features → produces μ and logσ² (d=128).

- Decoder: A simple feed-forward network that predicts the adjacency matrix and node/edge feature tensors (graph generation can be simplistic). For a more robust decoder, use a sequential graph generation model.

Training & Evaluation:

- Loss: Similar β-VAE loss, but L_recon is sum of cross-entropy losses for node, edge, and adjacency predictions.

- Validation: Measure property prediction (e.g., logP, QED) from latent space using a simple ridge regression to assess chemical meaningfulness.

The Scientist's Toolkit

Table 2: Key Research Reagent Solutions for Molecular Embedding

| Item | Function/Description | Example Vendor/Resource |

|---|---|---|

| RDKit | Open-source cheminformatics toolkit for molecule standardization, feature extraction, and descriptor calculation. | www.rdkit.org |

| PyTorch Geometric | PyTorch library for building and training GNNs on molecular graph data. | pytorch-geometric.readthedocs.io |

| DGL-LifeSci | Deep Graph Library (DGL) toolkit for life science applications, including pre-built GNN models. | www.dgl.ai |

| MOSES | Benchmarking platform for molecular generation models; provides datasets and evaluation metrics. | github.com/molecularsets/moses |

| Molecular Transformer | Pre-trained model for high-fidelity SMILES-to-SMILES translation, useful for transfer learning. | github.com/pschwllr/MolecularTransformer |

| ZINC Database | Free database of commercially available compounds for training and virtual screening. | zinc20.docking.org |

| ChEMBL Database | Manually curated database of bioactive molecules with target annotations. | www.ebi.ac.uk/chembl/ |

Visualizations

Title: Molecular Embedding Model Selection Workflow

Title: Character VAE Architecture for SMILES

Within a Bayesian optimization (BO) framework for molecular design in latent space, the objective function is the critical link between the generative model and desired experimental outcomes. Traditionally dominated by calculated target affinity (e.g., docking scores), modern objective functions must balance potency with pharmacokinetic and safety profiles, commonly summarized as ADMET (Absorption, Distribution, Metabolism, Excretion, Toxicity). This document provides protocols for constructing a multi-parameter objective function suitable for guiding BO in drug discovery.

Components of a Composite Objective Function

A robust objective function, f(m), for a molecule m is typically a weighted sum of multiple predicted properties. The coefficients (wᵢ) are determined by project priorities.

f(m) = w₁ * [Normalized Binding Affinity] + w₂ * [ADMET Score] + w₃ * [Synthetic Accessibility Penalty]

Table 1: Typical Components of a Molecular Optimization Objective Function

| Component | Description | Common Predictive Tools (2024-2025) | Optimal Range/Goal |

|---|---|---|---|

| Target Affinity | Negative logarithm of predicted binding constant (pKᵢ, pIC₅₀). | AutoDock Vina, Glide, Gnina, ΔΔG ML models (e.g., PIGNet2). | pIC₅₀ > 6.3 (500 nM) |

| Lipinski’s Rule of Five | Simple filter for oral bioavailability. | RDKit descriptors. | ≤ 1 violation |

| Solubility (LogS) | Aqueous solubility prediction. | AqSolDB, graph neural networks (GNN). | LogS > -4 (∼10 µM) |

| Hepatotoxicity | Risk of drug-induced liver injury (DILI). | DeepTox, admetSAR 3.0. | Low risk probability |

| hERG Inhibition | Cardiotoxicity risk prediction (pIC₅₀ for hERG). | Pred-hERG 5.0, chemprop models. | pIC₅₀ < 5.0 (low risk) |

| CYP450 Inhibition | Inhibition potential for Cytochromes P450 (e.g., 3A4, 2D6). | FAME 3, FEP-based predictions. | pIC₅₀ < 5.0 for key isoforms |

| Synthetic Accessibility | Ease of synthesis score. | RAscore 2, SAScore. | < 4 (easier to synthesize) |

Protocol: Constructing a Multi-Parameter Objective Function

Materials & Reagents

- Research Reagent Solutions:

- Software Suites: OpenEye Toolkit, Schrödinger Suite, Cresset Flare.

- Python Libraries: RDKit (descriptor calculation), PyTorch/TensorFlow (for running ML models), scikit-learn (for normalization).

- ADMET Prediction APIs/Models: ADMET-AI (Chemprop), ChEMBL/ChEMBL32 database for benchmarking, proprietary platforms like ATOM Modeling PipeLine.

- Computational Resources: GPU cluster for high-throughput docking (e.g., with Vina-GPU) and neural network inference.

Procedure

- Define Property Set: Select 4-6 key ADMET endpoints relevant to your target therapeutic area (see Table 1).

- Data Curation & Model Selection: For each property, curate a high-quality test set of known molecules with experimental data. Benchmark selected predictive tools (e.g., ADMET-AI vs. admetSAR) on this set.

- Normalization: Scale each property to a common range, typically [0,1] or [-1,1], where 1 is most desirable. Use sigmoidal or step functions for threshold-based properties (e.g., hERG inhibition).

- Example (Normalized Affinity):

Norm_pIC50 = (pIC50_pred - 5.0) / (10.0 - 5.0), clipped to [0,1].

- Example (Normalized Affinity):

- Weight Assignment: Assign weights (wᵢ) using methods like Analytic Hierarchy Process (AHP) or based on stage-specific priorities (e.g., lead identification vs. lead optimization).

- Implement Penalty Terms: Add negative terms for property violations (e.g.,

-1.0 * (hERG_risk > 0.7)). - Validation: Test the composite function on a set of known active and inactive compounds to ensure it ranks actives higher.

- Integration with BO: Implement f(m) as a callable function within the BO loop, where m is a latent vector decoded to a molecule, followed by property prediction.

Protocol: High-Throughput Virtual Screening Workflow for Objective Function Data

Procedure

- Molecular Input: Receive a batch of 10,000-1,000,000 molecules in SMILES format from the generative model or a library.

- Preprocessing: Standardize structures, generate tautomers/protomers (e.g., with Epik), and perform conformational sampling (e.g., with OMEGA).

- Parallelized Docking: Execute docking against a prepared protein target grid using a tool like Vina-GPU or FRED. Output the top scoring pose and its score.

- ADMET Prediction Pipeline: For all molecules passing an affinity threshold (e.g., docking score < -9.0 kcal/mol), run batch ADMET predictions using pre-trained models via a pipeline script.

- Objective Function Calculation: Apply the composite function f(m) from Section 3 to each molecule using the collected data.

- Ranking & Selection: Rank molecules by f(m) and select the top 0.1% for visual inspection and subsequent BO acquisition function analysis.

Visualizations

The Scientist's Toolkit

Table 2: Essential Reagents & Tools for Objective Function Implementation

| Item | Category | Function in Protocol |

|---|---|---|

| RDKit | Open-source Cheminformatics Library | Calculates molecular descriptors, rule-based filters (Lipinski's), and fingerprints for ML input. |

| AutoDock Vina/GNINA | Docking Software | Provides fast, structure-based binding affinity estimates for the objective function. |

| ADMET-AI (Chemprop) | ML Prediction Platform | Offers state-of-the-art graph neural network models for various ADMET endpoints. |

| OMEGA (OpenEye) | Conformational Generator | Produces representative 3D conformers for docking and 3D property calculation. |

| Python Scikit-learn | ML Library | Used for data normalization, scaling, and potentially training custom surrogate models. |

| GPU Computing Cluster | Hardware | Enables high-throughput parallel execution of docking and neural network predictions. |

| Benchmarking Dataset (e.g., from ChEMBL) | Reference Data | Essential for validating and calibrating each component of the predictive pipeline. |

This protocol details the critical third step in a comprehensive Bayesian optimization (BO) framework for molecular discovery in latent spaces. Within the thesis on "Advancing De Novo Molecular Design via Bayesian Optimization in Deep Latent Spaces," this step focuses on the selection and configuration of the core BO algorithm that operates on the encoded molecular representations. This component is responsible for intelligently navigating the latent space to propose candidates with optimized properties, balancing exploration and exploitation.

Core Algorithm Selection & Quantitative Comparison

Selecting the acquisition function and surrogate model is paramount. The following table summarizes current standard and advanced options, based on recent benchmarking studies in cheminformatics.

Table 1: Bayesian Optimization Core Components Comparison

| Component | Options | Key Characteristics | Best For | Computational Cost |

|---|---|---|---|---|

| Surrogate Model | Gaussian Process (GP) | Strong probabilistic uncertainty quantification. Works well in low to medium dimensions (<1000). | Small, data-efficient optimization loops. | O(n³) scaling with samples. |

| Sparse Gaussian Process | Approximates full GP using inducing points. | Higher-dimensional latent spaces (>100). | Reduces to O(m²n), m << n. | |

| Bayesian Neural Network (BNN) | Highly flexible, scales to very high dimensions. | Very large, complex latent spaces (e.g., from Transformers). | High per-iteration cost. | |

| Deep Kernel Learning (DKL) | Combines neural net feature extractor with GP. | Capturing complex features in latent space. | Moderate-High. | |

| Acquisition Function | Expected Improvement (EI) | Improves over current best. Baseline standard. | General-purpose optimization. | Low. |

| Upper Confidence Bound (UCB) | Explicit exploration parameter (β). | Tunable exploration/exploitation. | Low. | |

| Predictive Entropy Search (PES) | Maximizes information gain about optimum. | Very data-efficient, global optimization. | High. | |

| q-EI / q-UCB (Batch) | Proposes a batch of points in parallel. | Parallelized experimental settings (e.g., batch synthesis). | Moderate. |

Detailed Protocol: Configuring a DKL-UCB Optimization Core

This protocol outlines the setup for a robust BO core using Deep Kernel Learning (DKL) and the Upper Confidence Bound (UCB) acquisition function, suitable for medium-to-high dimensional latent spaces common in molecular autoencoders.

Materials & Software Requirements

The Scientist's Toolkit: Research Reagent Solutions

| Item / Software | Function in BO Core Configuration |

|---|---|

| PyTorch | Deep learning framework for building DKL model and enabling GPU acceleration. |

| GPyTorch | Library for flexible and efficient Gaussian process models, integral to DKL. |

| BoTorch | Bayesian optimization library built on PyTorch, provides acquisition functions and optimization loops. |

| RDKit | For final decoding of latent points back to molecular structures and calculating simple properties. |

| Pre-trained Molecular Autoencoder | Provides the latent space Z and the decoder D(z). (From Step 2 of the overall thesis). |

Property Prediction Model f(z) |

A separate model (e.g., a feed-forward network) mapping latent points to the target property (e.g., binding affinity). |

Initial Dataset {z_i, y_i} |

A set of latent vectors (z_i) and their corresponding computed property values (y_i). Size: Typically 100-500 points. |

Procedure

Initialization:

- Input: Initial latent vectors

Z_init(sizen x d) and their property scoresY_init(sizen x 1). - Standardize

Y_initto zero mean and unit variance. - Define the latent space bounds, typically ±3 standard deviations from the mean of the encoded training data.

- Input: Initial latent vectors

DKL Surrogate Model Configuration:

- Feature Extractor: Use a 2-3 layer fully connected neural network with ReLU activations. The input dimension is

d(latent space dim), and the output dimension is a learned representation (e.g., 32-128). - Base Kernel: Attach a Matérn 5/2 kernel to the output of the feature extractor.

- Likelihood: Use a

GaussianLikelihoodto model observation noise. - Training: Train the DKL model on

{Z_init, Y_init}for 100-200 epochs using the Adam optimizer, maximizing the marginal log likelihood.

- Feature Extractor: Use a 2-3 layer fully connected neural network with ReLU activations. The input dimension is

Acquisition Function Configuration:

- Select Upper Confidence Bound (UCB). Set the exploration parameter

β. A common schedule isβ_t = 0.2 * d * log(2t), wheredis latent dimension andtis iteration number. - Define the acquisition optimizer: Use BoTorch's

optimize_acqfwith sequential gradient-based optimization forq=1(sequential) orq>1(batch). Use multiple random restarts to avoid local maxima.

- Select Upper Confidence Bound (UCB). Set the exploration parameter

Single BO Iteration Loop:

- Conditioning: Condition the DKL model on all observed data

{Z_obs, Y_obs}. - Optimization: Find

z_next = argmax( UCB(z) )within the defined latent bounds. - Decoding & Validation: Decode

z_nextto a molecular structureM_nextusing the decoderD(z_next). - Property Evaluation: Compute the target property

y_nextforM_nextusing a in silico simulator (e.g., docking, QSAR model) or in vitro assay (external to this computational loop). - Data Augmentation: Append the new pair

{z_next, y_next}to the observed dataset.

- Conditioning: Condition the DKL model on all observed data

Termination:

- Loop continues until a performance threshold is met, a budget of iterations is exhausted, or convergence is detected (no improvement in

y_bestover several iterations).

- Loop continues until a performance threshold is met, a budget of iterations is exhausted, or convergence is detected (no improvement in

Visualizations

Bayesian Optimization Core Iterative Workflow

DKL Surrogate Model and UCB Acquisition

In the context of Bayesian Optimization (BO) for molecular design in latent space, the optimization loop is the iterative engine that drives the search for molecules with optimal properties. This step follows the definition of the surrogate model (e.g., Gaussian Process) and acquisition function. The loop consists of querying the latent space for a candidate point, evaluating it through a costly (e.g., wet-lab or high-fidelity simulation) experiment, and updating the surrogate model with this new data. This protocol details the execution of this critical phase for research scientists in computational chemistry and drug development.

Core Protocol: The Iterative Optimization Loop

Prerequisites

- A trained generative model (e.g., Variational Autoencoder) that defines the molecular latent space.

- A pre-trained surrogate model (e.g., Gaussian Process) on initial training data

(X_train, y_train). - A defined acquisition function

α(x; θ)(e.g., Expected Improvement, Upper Confidence Bound). - An experimental or simulation pipeline ready to evaluate candidate molecules.

Detailed Procedure

Cycle n:

Querying (Selecting the Next Candidate):

- Input: Current surrogate model, acquisition function

α, latent space bounds. - Action: Maximize the acquisition function to select the next point to evaluate:

x_n = argmax α(x; θ). - Protocol:

a. Using an optimizer (e.g., L-BFGS-B or multi-start gradient ascent), find the point

x_nin the latent space that maximizesα. b. Decode: Passx_nthrough the decoder of the generative model to obtain the candidate molecular structureM_n. c. Validate: EnsureM_nis chemically valid (e.g., via RDKit sanitization).

- Input: Current surrogate model, acquisition function

Evaluating (Costly Function Evaluation):

- Input: Candidate molecule

M_n. - Action: Obtain the target property value

y_n = f(M_n) + ε, wherefis the expensive-to-evaluate objective function. - Experimental Protocol Examples:

- Binding Affinity (pIC50): Perform a standardized biochemical assay (e.g., kinase inhibition assay). Protocol: Prepare compound in DMSO, serially dilute, incubate with target enzyme and substrate, measure conversion rate, fit dose-response curve to derive pIC50.

- Solubility (LogS): Use a kinetic turbidimetric solubility assay. Protocol: Dissolve compound in DMSO, add to aqueous buffer (pH 7.4), monitor precipitation via light scattering, calculate solubility from the clearance point.

- ADMET Prediction: Run high-throughput in vitro assay panels (e.g., Caco-2 permeability, microsomal stability, hERG inhibition).

- Input: Candidate molecule

Updating (Augmenting the Dataset and Model):

- Input: New data pair

(x_n, y_n). - Action: Update the training dataset and retrain/refit the surrogate model.

- Protocol:

a. Augment Data:

X_train = X_train ∪ {x_n};Y_train = Y_train ∪ {y_n}. b. Retrain Surrogate: Refit the Gaussian Process (or other model) hyperparameters (length scales, noise variance) on the augmented dataset via maximum likelihood estimation (MLE). c. Convergence Check: Determine if a stopping criterion is met (see Table 2). If not, initiate Cycle n+1.

- Input: New data pair

Data Presentation & Analysis

Table 1: Representative Optimization Loop Performance on Benchmark Tasks

| Benchmark Target (Molecular Property) | Initial Dataset Size | BO Iterations | Best pIC50 Found | Improvement Over Initial | Key Acquisition Function |

|---|---|---|---|---|---|

| DRD2 Antagonism | 50 | 20 | 8.2 | +1.8 | Expected Improvement |

| JAK2 Inhibition | 100 | 30 | 7.9 | +1.5 | Upper Confidence Bound |

| Aqueous Solubility (LogS) | 200 | 25 | -4.2 | +0.9 (lower is better) | Predictive Entropy Search |

Table 2: Common Stopping Criteria for the Optimization Loop

| Criterion | Calculation | Typical Threshold | Rationale |

|---|---|---|---|

| Iteration Limit | n >= N_max |

30-100 cycles | Practical resource constraint. |

| Performance Plateau | max(y_last_k) - max(y_prev_k) < δ |

δ = 0.05 (pIC50) | Diminishing returns on investment. |

| Acquisition Value Threshold | max(α(x)) < ε |

ε = 0.01 | Exploitation/exploration balance no longer favorable. |

Visualization of Workflows

Bayesian Optimization Cycle Flow

Surrogate Model Update Step

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Reagents & Tools for the Evaluation Phase

| Item/Category | Example Product/Kit | Function in the Loop |

|---|---|---|

| Compound Management | DMSO (≥99.9%), Echo 555 Liquid Handler | Stores and dispenses candidate molecules for assay preparation. |

| Biochemical Assay Kits | ADP-Glo Kinase Assay, Lance Ultra cAMP Assay | Measures target-specific activity (e.g., kinase inhibition) to determine pIC50. |

| Solubility Assay | CheqSol (pION), Nephelometric Solubility Assay Plates | Determines kinetic aqueous solubility (LogS) of synthesized candidates. |

| CYP450 Inhibition Assay | Vivid CYP450 Screening Kits (Thermo Fisher) | Assesses metabolic stability and drug-drug interaction potential. |

| Cell-Based Viability Assay | CellTiter-Glo Luminescent Cell Viability Assay (Promega) | Evaluates cytotoxicity in relevant cell lines, a key early toxicity metric. |

| High-Fidelity Simulation | Schrodinger Suite (FEP+), GROMACS, AMBER | Computationally evaluates binding free energy or physicochemical properties when wet-lab experiments are not immediately feasible. |

Within the paradigm of Bayesian Optimization (BO) in molecular latent space research, the concurrent optimization of potency and selectivity represents a critical, non-trivial multi-objective challenge. This application note details a framework for navigating chemical latent spaces, defined by generative models like variational autoencoders (VAEs), to efficiently identify compounds balancing high target engagement (potency) with minimal off-target activity (selectivity). BO's strength in balancing exploration with exploitation makes it ideal for this expensive, high-dimensional search problem.

Quantitative Benchmarking of BO Strategies

Recent studies demonstrate the efficacy of BO in latent space for dual-parameter optimization. The table below summarizes key performance metrics from benchmark studies on kinase inhibitor datasets.

Table 1: Benchmark Performance of BO Strategies in Latent Space for Potency (IC50) & Selectivity (SI) Optimization

| BO Acquisition Function | Surrogate Model | Dataset (Target) | Key Metric: Improvement over Random Search | Pareto Front Quality (Hypervolume) |

|---|---|---|---|---|

| q-Expected Hypervolume Improvement (qEHVI) | Gaussian Process (GP) | JAK2 Kinase Inhibitors | 3.5x faster to identify nM potent, 10-fold selective leads | 0.78 ± 0.05 |

| Predictive Entropy Search (PES) | Sparse Gaussian Process | Serine Protease Family | Identified 12 selective hits (>100x) in 5 cycles vs. 15 cycles random | 0.65 ± 0.07 |

| Thompson Sampling | Deep Kernel Learning (DKL) | GPCR Panel (5-HT2B vs. others) | Achieved >50 nM potency & >30-fold selectivity in 40% fewer synthesis cycles | 0.72 ± 0.04 |

| ParEGO (Scalarization) | Random Forest | Epigenetic Readers (BET family) | Optimized BRD4/BRD2 selectivity ratio by 15x while maintaining <100 nM potency | 0.60 ± 0.08 |

Core Experimental Protocol: A BO-Driven Cycle for Lead Optimization

Protocol Title: Integrated Bayesian Optimization in Latent Space for Potency-Selectivity Profiling.

Objective: To iteratively design, synthesize, and test compound libraries guided by BO to maximize a dual objective function combining binding potency and a selectivity index.

Materials & Pre-requisites:

- A pre-trained molecular generative model (e.g., VAE, JT-VAE) creating a continuous latent space.

- An initial seed dataset of 50-200 molecules with measured Target IC50 and Off-Target IC50 (for a key anti-target).

- Access to rapid synthesis (e.g., parallel medicinal chemistry, DNA-encoded libraries) and screening platforms.

Step-by-Step Workflow:

- Data Encoding & Objective Definition:

- Encode all molecules from the seed set into latent vectors (z).

- Calculate the Selectivity Index (SI) as: SI = Off-Target IC50 / Target IC50.

- Define the dual objective for BO:

- Objective 1: Minimize -log10(Target IC50) (maximize potency).

- Objective 2: Maximize log10(SI) (maximize selectivity).

Surrogate Model Training:

- Train a multi-output Gaussian Process (GP) surrogate model on the latent vectors (z) to predict the mean and uncertainty of both objective functions.

Acquisition & Candidate Selection:

- Using the qEHVI acquisition function, query the surrogate model to identify the set of latent points (z*) expected to most improve the Pareto frontier of potency and selectivity.

- Decode the selected latent vectors (z*) into novel molecular structures using the decoder of the generative model.

- Apply chemical feasibility and synthetic accessibility (SA) filters.

Experimental Testing & Iteration:

- Synthesize and purify the top 5-10 proposed compounds.

- Perform dose-response assays to determine experimental Target IC50 and Off-Target IC50 (for the same anti-target).

- Append the new data (latent vector, experimental results) to the training set.

- Repeat from Step 2 for 5-10 optimization cycles.

Visualization of the Workflow and Biological Context

Title: Bayesian Optimization Cycle in Molecular Latent Space

Title: Molecular Selectivity in a Kinase Inhibition Pathway

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials for Implementation

| Reagent / Material | Supplier Examples | Function in Protocol |

|---|---|---|

| Pre-trained JT-VAE Model | Open-source (GitHub), IBM RXN for Chemistry | Provides the molecular latent space for encoding/decoding; foundation for the BO search domain. |

| GPyTorch or BoTorch Library | PyTorch Ecosystem | Enables building and training of multi-output Gaussian Process surrogate models for the BO loop. |

| qEHVI Acquisition Module | Ax Platform, BoTorch | Computes the expected improvement of the Pareto front, guiding the selection of optimal latent vectors. |

| Parallel Medicinal Chemistry Kit | Sigma-Aldrich, Enamine, Building Blocks | Enables rapid synthesis of the small compound batches proposed by each BO cycle. |

| HTRF Kinase Assay Kit (Target) | Cisbio, PerkinElmer | Provides a homogeneous, high-throughput method for accurately measuring primary target IC50. |

| Selectivity Screening Panel | Eurofins, Reaction Biology | Offers profiling against a standardized panel of anti-targets (e.g., kinome) to calculate selectivity indices. |

| RDKit or ChemAxon Suite | Open-source, ChemAxon | Used for chemical feasibility checking, filtering, and calculating synthetic accessibility (SA) scores. |

Within the broader thesis on Bayesian Optimization (BO) in molecular latent space research, this application note addresses the central challenge of navigating high-dimensional chemical space under multiple, often competing, objectives. Traditional discovery is serial and inefficient. By coupling a deep generative model's latent space with a multi-objective BO loop, we can efficiently sample and optimize molecules for simultaneous constraints like potency, solubility, and synthetic accessibility.

Core Methodology: Multi-Objective Bayesian Optimization in Latent Space

The protocol involves a closed-loop cycle of suggestion, evaluation, and model updating.

Experimental Protocol: The BO-Driven Design Cycle

Initialization:

- Library: Start with a diverse dataset of 10,000-50,000 molecules with associated property data for target properties (e.g., pIC50, LogS, LogP).

- Model Training: Train a variational autoencoder (VAE) or a grammar VAE (GVAE) on the molecular structures (SMILES strings). Validate reconstruction accuracy (>95%).

- Surrogate Model: Define a Gaussian Process (GP) prior over the latent space, initialized with a Matérn kernel.

Acquisition & Decoding:

- Using the trained VAE encoder, project the initial dataset into the latent space (z-vectors).

- Fit the GP surrogate model to map latent vectors (z) to experimental property values (y).

- Optimize a multi-objective acquisition function (e.g., Expected Hypervolume Improvement, EHVI) to propose the next latent point (z*) that optimally balances exploration and exploitation across all properties.

Evaluation & Iteration:

- Decode the proposed z* into a novel molecular structure (SMILES) using the VAE decoder.

- Employ rapid in silico property prediction (Steps A-C below) for initial filtering.

- Termination Criteria: Loop continues until either:

- A set number of iterations (e.g., 100) is reached.

- The Pareto hypervolume plateaus (<2% improvement over 20 iterations).